We just announced the launch of DirectFlash Fabric which uses NVMe-oF RoCE protocol. Our NVMe-oF protocol first implementation uses RoCE which stands for RDMA over Converged Ethernet (RDMA = Remote Direct Memory Access). FlashArray//X with DirectFlash technology delivers a great performance which exceeds SAS SSDs.

DirectFlash Fabric uses NVMe-oF RoCE protocol

In this blog, I would like to show you performance benefits as well as other advantages provided by FlashArray such as data reduction, snapshots in the context of Apache Cassandra cluster. Furthermore, I will compare Pure Storage FlashArray with DirectFlash with Apache Cassandra cluster deployed on SAS DAS.

Apache Cassandra is an open-source, distributed, wide column store, NoSQL database management system designed to handle large amounts of data across many servers, providing high availability with no single point of failure.

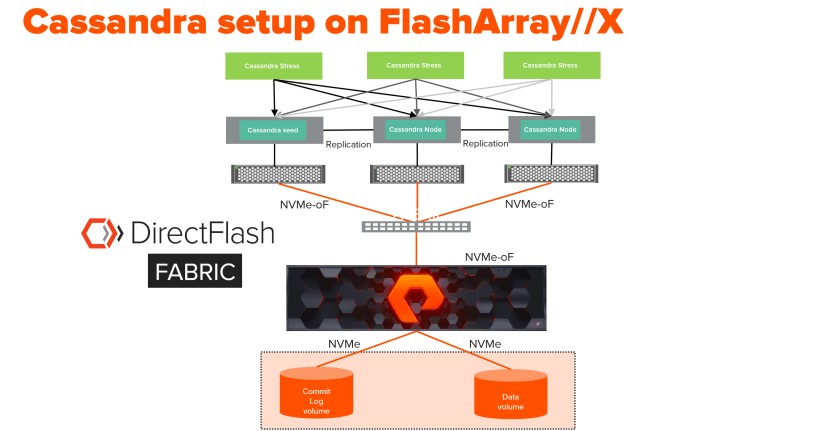

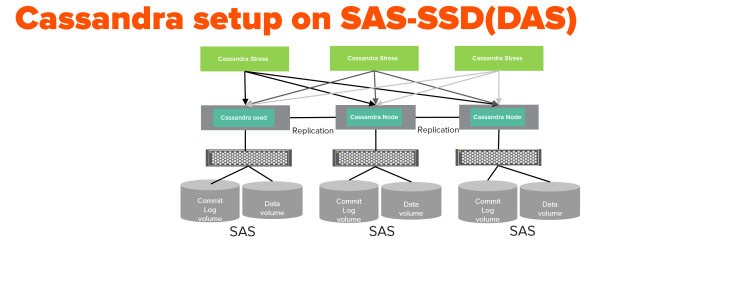

In order to test the performance of Apache Cassandra, I built two 3 node clusters. The first cluster was deployed on FlashArray//X DirectFlash Fabric and the other using DAS SAS SSDs.

Configuration for FlashArray DirectFlash NVMe-oF based Cassandra cluster setup:

Each cluster consisted of three nodes, one seed node used for bootstrapping the gossip process and two peer nodes. The data volume and the commit logs were mounted using FlashArray//X volumes with xfs file system. FlashArray//X here was configured with NVMe-oF protocol for server to FlashArray. FlashArray//X also provides disk to host bus adapter (HBA) NVMe connectivity assuring end-to-end (host to the disk) full NVMe connectivity.

Here are the specifications of each host(x86) for the Cassandra cluster on FlashArray//X DirectFlash Fabric setup:

- OS: Red Hat Linux 7.6

- Host to Pure Storage FlashArray //X90R2 Connectivity: Mellanox ConnectX-4 Lx (Dual Port 25 GbE)

- Apache Cassandra version: 3.11.3

The configuration for SAS/DAS based Cassandra cluster setup:

In this setup of three nodes, one seed node is used for bootstrapping the gossip process for new nodes joining the cluster. It has two other peer nodes joining the seed node. The data volume and the commit logs are mounted using SAS/DAS SSD drives with xfs file system.

Here are the specifications of each host(x86) for the Cassandra cluster using SAS SSD DAS:

- OS: Red Hat Linux 7.6

- DAS: 4x 1.6TB 12Gb/s SAS SSD (RAID 10)

- Apache Cassandra version: 3.11.3

Performance test setup and Results:

The Cassandra stress generator tool was used to generate load on to the three nodes cluster. The keyspace used by cassandra-stress has replication set to 3. The testing consisted of only writes (updates). The keyspace used by cassandra-stress was configured with durable writes. When durable writes parameter is set true for each write IO, the Cassandra node first writes the data to an on-disk append-only structure called commitlog and then it writes the data to an in-memory structure called memtable. When the memtable is full or reaches a certain size, it is flushed to an on-disk immutable structure called SSTable. Testing was done using Cassandra-stress load generator. Three parallel Cassandra-stress instances were running in parallel against three Cassandra nodes as shown above. In order to saturate the cluster, each Cassandra-stress instance is connecting to the other three nodes to generate the load. The CQL (Cassandra Query Language) generates an update statement for writes which have identical performance characteristics as insert statements in Cassandra. For this tests the database compression was disabled.

Here is the keyspace information used by Cassandra-stress:

CREATE KEYSPACE keyspace1 WITH replication = {‘class’: ‘SimpleStrategy’, ‘replication_factor’: ‘3’} AND durable_writes = true;

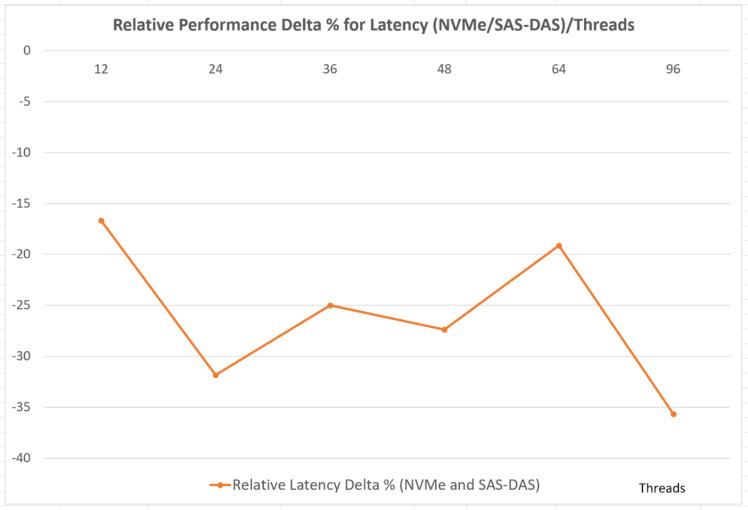

The chart below shows the relative latency delta (percentage) versus the number of threads of a Cassandra cluster on NVMe-oF FlashArray cluster and a Cassandra SAS-DAS cluster. It clearly illustrates that write/updates latency of Cassandra cluster on NVMe-oF FlashArray is way lower ( on an average of 28.6 % lower or -28.6%) than Cassandra cluster running on SAS-DAS, even when the number of clients (threads) is increased.

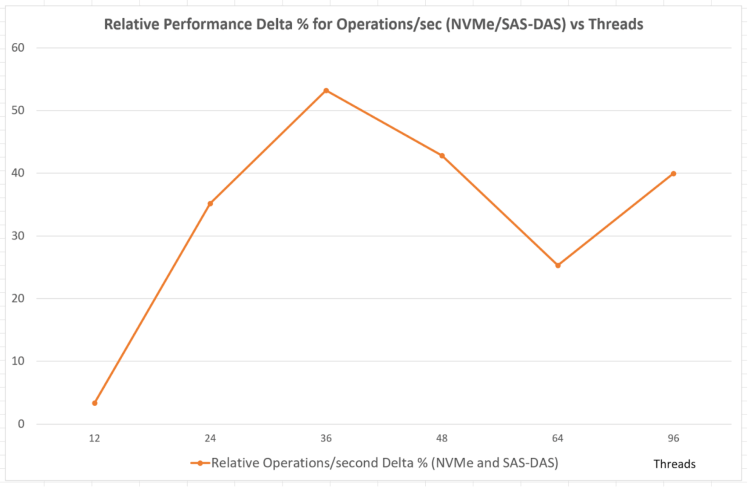

Next chart shows the relative delta of operations per second (percentage) versus the number of threads for writes/updates between Cassandra cluster based on NVMe-oF FlashArray and Cassandra cluster based on SAS-DAS.

Here also, it shows that the Cassandra cluster based on NVMe-oF FlashArray is way higher (on an average of 33.5% higher) than SAS-DAS performance even when the number of clients(threads) is increased.

All the tests were performed with the cluster replication factor for the keyspace set to three generating optimal results.

The other benefits which a shared storage like FlashArray//X brings to Cassandra’s deployments are around Data reduction and Data protection using FlashArray snapshots. Here are the other benefits in detail:

Data Reduction :

Pure Storage provides the industry’s most granular and complete data reduction, which is included with every FlashArray//X. For many applications, it delivers data reduction savings that bring the cost of all-flash below that of spinning disk.

The data reduction on FlashArray for Apache Cassandra is around 2.4 to 3.0 for its data and commit logs volumes. The data on Cassandra table was not compressed. This reduces the storage footprint by a big factor. Since the data is not compressed, it saves CPU cycles as well as it does not have to do compression and uncompression. The SAN storage can be managed easily from one central management GUI.

On the other hand, DAS is inexpensive but it is very difficult to manage and scale the node capacity, whereas SAN storage is more flexible and can be easily scaled.

FlashArray snapshots:

FlashArray snapshots are instantaneous, making them well suited for protection or cloning your Cassandra clusters. In the demo shown in my other blog I used snapshots to recover the Cassandra cluster or it can be used to copy or clone the Cassandra cluster for development and testing purposes. Cassandra offers native snapshots but it is often very difficult to use. Most customers cannot take more than 2-3 native Cassandra snapshots before they run out of storage space, whereas Pure Storage FlashArray snapshots are instantaneous and do not consume much space to start with. Also, we can take thousands of Pure Storage FlashArray snapshots without impacting array’s performance. In contrast, DAS does not provide any kind of snapshot mechanism.

Please refer to my other blog for more information on the other value propositions in detail.

Summary: With the performance of NVMe-oF of higher than DAS, and the other benefits like data reduction and data protection, Pure Storage FlashArray NVMe-oF would be a better choice than DAS for Apache Cassandra. I would highly recommend trying this new architecture based on the value propositions which we bring to Cassandra’s world over DAS. Thank you for reading our article on DirectFlash.