Containers and containerization have transformed the way applications are both developed and deployed. But what are containers and what value can modern all-flash storage can add to this story?

What are Containers and Where Did They Come From?

Containers are a form of virtualization, but don’t confuse them with virtual machines. In essence, containers are like sand boxes that run on top of an operating system without having to run a full-blown operating system image or use a hypervisor. Containers provide a form of process isolation that is much more lightweight than virtualisation.

The concept of a container can be traced all the way back to chroot in Unix 7 in 1979 when isolating a process to its own execution environment was first introduced. Fast forward to 2013 and Docker® was born, then container orchestration platforms like Kubernetes, sowing the seeds of the explosion in container usage we see today. There are other means of running containers such as LXC on Unix or rkt by CoreOS, however Docker is far and away the most popular container engine.

Kubernetes vs. Docker Swarm: Which Container Orchestration Platform Should You Use?

Who Is Using Containers?

Google™ probably stands out as the “Container poster child” in that they run virtually their entire infrastructure on containers, however Netflix®, Spotify® and eBay® are also notable container users:

Containers have since proliferated in enterprise scenarios and are gold-standard for modern, microservices-based applications. Even stateful, mission-critical legacy applications are getting migrated to containers.

What Applications Are Containers Used For?

A glance at the top ten most pulled images provides some color as to Docker’s usage:

Note that mysql, mongo and postgres all appear in the list of the top ten most pulled images; this alludes to the fact that people are using containers to store data.

Why Are Containers So Popular?

By virtue of their lightweight nature, container usage leads to better use of compute resources. Consider this simple Jenkins build pipeline:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

node { stage('git checkout') { git 'file:///C:/Projects/SsdtDevOpsDemo' } stage('build dacpac') { bat "\"${tool name: 'Default', type: 'msbuild'}\" /p:Configuration=Release" stash includes: 'SsdtDevOpsDemo\\bin\\Release\\SsdtDevOpsDemo.dacpac', name: 'theDacpac' } stage('start container') { bat 'docker run -e "ACCEPT_EULA=Y" -e "SA_PASSWORD=P@ssword1" --name SQLLinuxLocal -d -i -p 15566:1433 microsoft/mssql-server-linux' } stage('deploy dacpac') { unstash 'theDacpac' bat "\"C:\\Program Files\\Microsoft SQL Server\\140\\DAC\\bin\\sqlpackage.exe\" /Action:Publish /SourceFile:\"SsdtDevOpsDemo\\bin\\Release\\SsdtDevOpsDemo.dacpac\" /TargetConnectionString:\"server=localhost,15566;database=SsdtDevOpsDemo;user id=sa;password=P@ssword1\" /p:ExcludeObjectType=Logins" } stage('run tests') { print "Tests go here" } stage('cleanup') { bat 'docker rm –f SQLLinuxLocal' } } |

This performs the following functions:

- It checks out code from a local GIT repository

- The code is built into a deploy-able artifact

- A container using the Linux® SQL Server image is spun up

- The code is then deployed to the container

- There is a stub in which some tests can be run

- Finally ,the container is stopped and removed

Agility

It also provides agility. Take the step of starting the container, for example. Were this to be a virtual machine deployed in any IT environment that has some rigor around processes and procedures, this might involve:

- Talking to an infrastructure team about getting the virtual machine created

- Talking to a networking team about the assignment of an ip address and port numbers the virtual machine can use

- Talking to whoever manages the domain about being able to join this virtual machine to the domain

- Talking to the infrastructure team again about the requirements of the virtual machine to be patched, backed up, and scanned for viruses etc . . .

This is anything but an agile process compared to the two lines of code in the script excerpt above. Two lines are all that are required to start the container, stop, and remove it:

|

1 2 3 |

bat 'docker run -e "ACCEPT_EULA=Y" -e "SA_PASSWORD=P@ssword1" --name SQLLinuxLocal -d -i -p 15566:1433 microsoft/mssql-server-linux' bat 'docker rm –f SQLLinuxLocal' |

Mobility

The value that containers add to continuous integration and build processes does not end here. Irrespective of where a container is run, it always executes the same way, whether this is on a laptop, on premises server, public cloud, Linux or Windows®.

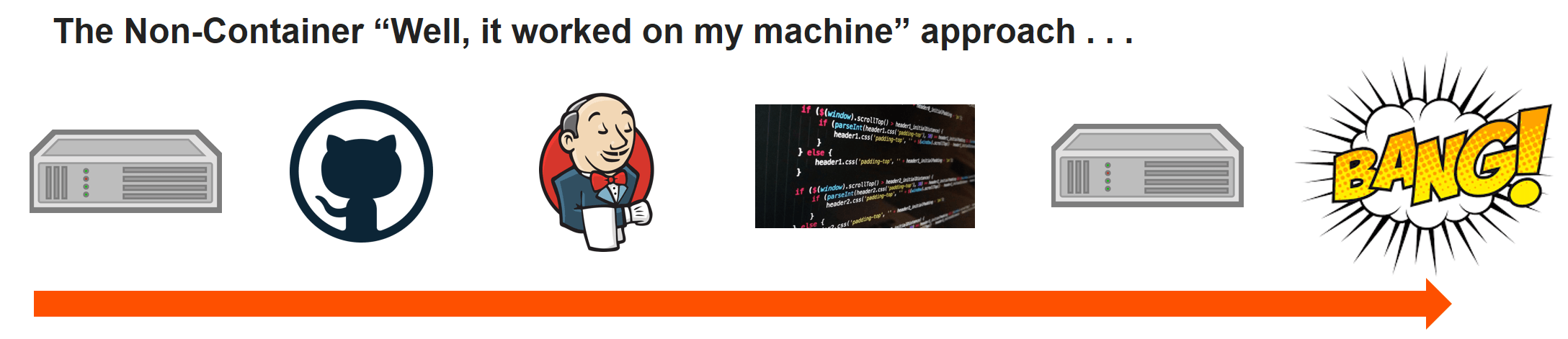

It’s common practice for Jenkins build slaves (‘Slaves’ being processes that can participate in a build) to be run as containers. Consider a problem that most developers have faced in their time:

This is a “The code worked in test but went ‘bang’ in production” problem. The main reason for this is a difference between test/staging and the production environment.

To distill this down further, the problem exists due the tight coupling between the code and the environment it is running on. There are a slew of desired state configuration tools now available such as Ansible®, Puppet®, Chef® and Salt. But with containers, you get consistency without the effort of managing the underlying environment the container engine is running on:

Software Architectures: From 3-Tiered to Service-Oriented

The overriding trend in software design used to be structuring software as multi-tiered. Typically there would be a data tier, a business logic tier, a presentation logic tier and perhaps an orchestration tier. It was common to implement each tier as a monolithic slab of code.

Then, service-oriented architectures (SOA) came along.

A service-oriented architecture is a technology and platform-agnostic way of building out a software architecture from components (services) exposed via APIs. Wikipedia defines the key characters of a service as:

- It logically represents a business activity with a specified outcome.

- It is self-contained.

- It is a black box for its consumers.

- It may consist of other underlying services.

The ultimate goal of this style of software architecture is to be able to compose systems from different components irrespective of the underlying technology used to implement these services and a whole middle-ware industry emerged off the back of this. The focus of a service-oriented architecture was exposing software via service APIs and having a homogeneous API layer across all the software assets in the architecture.

Enter Microservices

Consider a simple online bookstore application which consists of order processing, inventory management, and payment handling functionality, designed using monolithic layer approach:

Software designed in this way presents several challenges. A single change to either the order processing, inventory management, or payment processing modules requires the whole business logic tier to be redeployed. Secondly, the tight coupling between the three different functional areas means that they cannot be developed independently of one another. A defect in one area may impact the whole business logic tier.

Microservices are the evolution of service-oriented architectures. In this recording from the GOTO 2014 conference, Martin Fowler outlines nine common characteristics of micro services:

- Componentization via services

- Organized around business capabilities

- Products not projects

- Smart endpoints and dumb pipes

- Decentralized governance

- Decentralized data management

- Infrastructure automation

- Design for failure

- Evolutionary design

Let’s take a look at what the online book store application looks like when designed using microservices:

The tight coupling between the three functional areas is removed, along with the inability to develop components independently of one another. This makes containers a perfect fit for the deployment of microservices. They’re ideal for encapsulating microservices:

Where Does Storage Come In?

The need to persist data within containerized applications is clear, which ultimately requires agile storage.

Agility in developing and delivering software is a key requirement for competitive and disruptive companies. In this new digital order, agile software development and delivery via containerization will become the new normal. Learn how Portworx by Pure Storage allows enterprises to evolve along with this new landscape.