Summary

IT plays a critical role in everything radiology professionals do. Why? The sheer size of medical images is a challenge. Learn how one organization overcame challenges to fuel faster discovery, treatment, and innovation.

This is a guest post on how data storage is revolutionizing radiology was written by Christopher Harr, senior site reliability engineer at the Radiological Society of North America (RSNA), a nonprofit organization that promotes excellent patient care and healthcare delivery through education, research, and innovative technology. Christopher is responsible for architecting and maintaining RSNA’s infrastructure on premises and in the cloud.

When your doctor orders a medical imaging exam like an X-ray, MRI, or ultrasound, you’re counting on the radiologist to be up to date on the latest science. That’s where the Radiological Society of North America (RSNA) comes in—and IT plays a critical role in everything we do. Why?

Thedata storage is reo sheer size of medical images makes our job challenging. One imaging library we built for researchers around the world contained 600,000 images totaling 80GB—and that’s just from one of several institutions that contributed data sets. To make sure researchers around the world can quickly access and download radiological images for their projects, we needed a data storage platform that scales easily and has very low latency.

Remembering the “Dark Days” of Legacy Storage

When I joined RSNA in 2013, I inherited a storage array with high latency and bottlenecks. Those were dark days. We were always on call, crossing our fingers that we wouldn’t have to go into the data center to reboot the array. Developers complained that the storage hardware slowed down their code. The final straw was when we found out that an upcoming operating system upgrade would require us to completely wipe and rebuild the array.

We needed storage that was faster, more reliable, and more scalable.

How Pure Powered 65 Million Hits, Transferring 366GB—Non-disruptively

The Pure Storage® platform blew all the other arrays out of the water. Pure was scalable, fast, and redundant, and it had monster compression—up to 4.2:1 data reduction and 20.4:1 total reduction. But the clincher was that we don’t have to migrate data during upgrades. Pure just comes in and swaps out the controllers, with zero downtime. Since then, we’ve gone through several nondisruptive upgrades, and today we use the FlashArray//C device with an Evergreen™ Storage subscription.

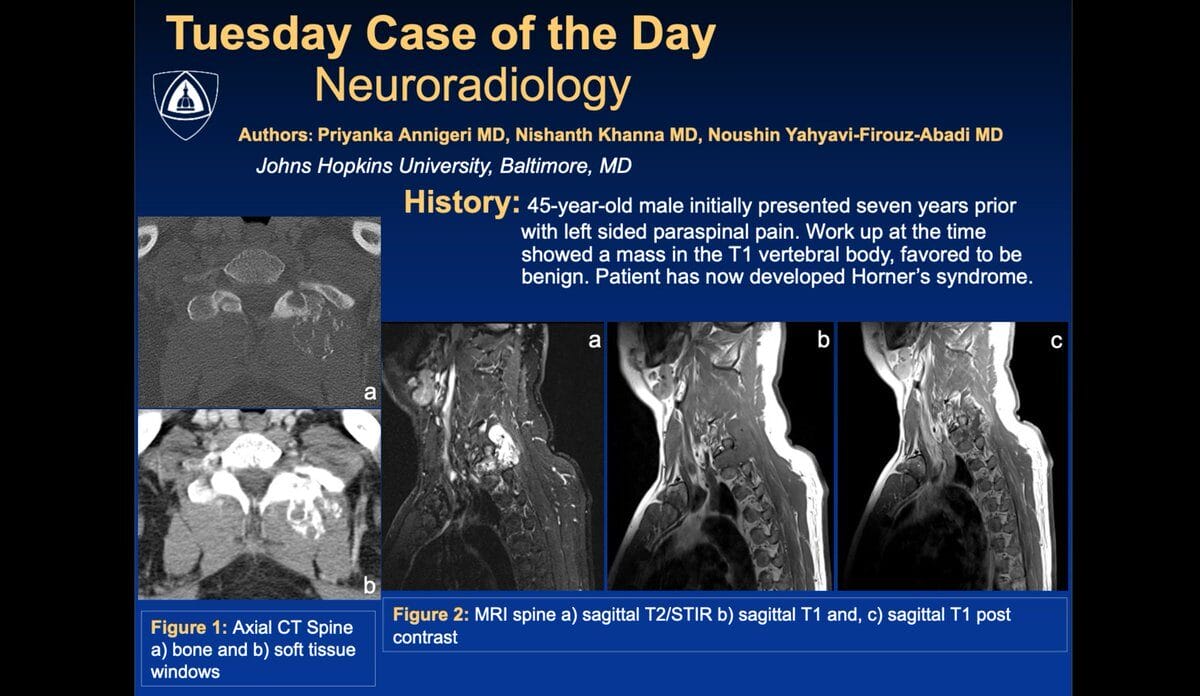

Our Case of the Day program illustrates how Pure truly delivers. Every day during our six-day annual meeting, we post an image or video for members to interpret. The images are up to 25MB, sometimes even larger. To load within our two-second target, the storage platform has to respond to requests in less than two milliseconds. Before we switched to Pure, the only way we could deliver that performance was to physically transport servers with local storage from our data center to the event venue. That was tense!

Now that we host the Case of the Day website on Pure, we don’t need local storage at the annual meeting. The image loads quickly on any browser. In 2020, when we had to hold the annual meeting virtually, the Case of the Day website received 65 million hits and transferred 366GB of images. Pure performed flawlessly, delivering a great experience to members around the world, even during the busiest times.

Faster access to images also makes more time for potentially life-saving research. This research is game changing, and its importance cannot be understated. Stripping off personal health information (PHI) from images for HIPAA compliance is also faster and cheaper than it would be in a public cloud.

Source: Noushin YahYavi MD, MBA

For DevOps, Faster Provisioning Means Faster Innovation in Radiology

“Spooling up a new environment is expensive in the cloud and took 15 to 20 minutes on our old storage array. With Pure, developers can spin up their own server in less than a minute, test their code, and then spool it back down.” –Christopher Harr, Sr. Site Reliability Engineer, RSNA

Another big benefit of Pure for RSNA is faster software development for radiology research and education. Developers can code and test faster when they have their own environment. Spooling up a new environment is expensive in the cloud and took 15 to 20 minutes on our old storage array. With Pure, developers can spin up their own server in less than a minute, test their code, and then spool it back down. We’re working to make it even easier, with one-click creation of a development and test environment that’s automatically destroyed after 24 hours.

To sum it up, Pure is the heart and soul of our IT operations. It’s what makes our member services run flawlessly and fast, supporting our mission to promote excellent patient care and healthcare delivery. Pure is a phenomenal storage solution. Why pay for the cloud when we can do it ourselves—cheaper and faster?