There have been many exciting weeks at Pure Storage® in my past 6.5 years with the company. Multiple feature/product releases, IPO, acquisitions, etc. Last week felt uniquely special. Not just because we announced the impending purchase of a new business (we’ve done that twice before), but because of what it means—and where it could take Pure Storage. I refer to, of course, the acquisition of Portworx®. Now, let’s talk more about Portworx VMware.

There are a few posts that go into detail on what that means. They’ve detailed things well, so you don’t need me to fully rehash it here:

- Pure to Acquire Portworx, Powering the Future of Cloud Native Apps. Charlie Giancarlo, CEO and Chairman, Pure Storage

- Pure Storage and Portworx: Better Together. Simon Dodsley, Technical Director of New Stack, Pure Storage

- Welcoming Portworx to Pure Storage. Matt Kixmoeller, Vice President – Strategy, Pure Storage

- Get Ready for An Even More Exciting Future – Portworx is Being Acquired! Murli Thirumale, Portworx Co-founder and CEO

- Apps drive infrastructure. That’s why Portworx is joining Pure Storage. Goutham Rao, Portworx Co-founder and CTO

- Portworx solved an inevitable problem for cloud-native apps. By joining Pure, we are bringing it to the masses. Vinod Jayaraman, Portworx Co-founder and Chief Architect

One of the clear benefits of Portworx is that it can basically run anywhere: baremetal, AWS, Google Cloud Platform, Azure. Anywhere your containers are, Portworx can also be. That is really helpful with flexibility today (I can start anywhere) and anxiety relief for tomorrow (I can go anywhere if my decision changes).

My realm at Pure Storage is VMware—VMware solutions specifically. It’s my team’s job to make VMware + Pure a happy place. And a place where there is a lot of opportunity to create happiness is with containers. If you have been paying attention, VMware has recently been making big investments into container orchestration—and specifically Kubernetes—in a variety of ways. Very recently VMware announced the next iteration of the Tanzu portfolio.

The changes here do a lot of things—namely make VMware’s Kubernetes offerings open to a lot more of its customers. And that introduces a lot more people to Kubernetes on VMware in general.

I have been spending the greater part of the inauspicious year that is 2020 speaking with customers about their container plans, how and if that involves Kubernetes (almost always does), where their problems are, what gaps currently exist as they see it, and what technologies they are considering that might help. When it comes to how they plan on running Kubernetes, their responses were all over the map.

OpenShift. Rancher. DIY. GKE on Anthos. And, of course, public clouds.

One fairly common theme though was VMware (yes, I have some selection bias…I talk to a lot of VMware users!”). A lot of these organizations have a ton of VMs–VMs that aren’t going away any time soon. Upon analysis, applications running in those VMs were not great candidates for containerization. These organizations need a way to run both VMs and containers at least for the foreseeable future.

On the other hand, there is a clear need for container support. Containers come into an organization’s way usually through one of two roads:

- Their application developers were requiring them (or already using them with stealthy impunity) o

- Their software vendors were containerizing their off-the-shelf applications

Either way (or in some cases, both), having a practice around containers (and the orchestration thereof) is clearly and quickly becoming a requirement. Look at the latest vRealize suite. Yes, the suite is still all offered in the form of deployable OVAs, but inside those VMs are mini-implementations of k8s managing the container-based vRealize application(s).

Having a VMware practice is, and will, continue to be important, as will having the ability to manage and deploy containers. Putting those containers alongside your VMs makes a lot of sense, and in fact, might be required. It’s often due to application relationships: the VMs and containers need to be physically co-located because latency between related applications can slow the business down.

So do you move everything, which may or may not be the right thing to do? Or put workloads into the cloud that makes sense and put the applications that make sense on-premises, on-premises? If you don’t have a public-cloud practice, what is more imminent: supporting container deployments or building a public cloud practice? Often the former. I am not saying it will never or shouldn’t go there, but doing it in your VMware environment is a very reasonable place to start.

Furthermore, there is a very large and mature VMware ecosystem that has a significant amount of know-how, all of which is still important to a large number of organizations and will continue to be. So for a variety of reasons, running both containers and VMs on top of VMware offers simplicity and is logical. Customers don’t want to manage two different infrastructures (virtualization and baremetal) and they certainly don’t want to waste time learning baremetal deployment practices if eventually the containers are moved somewhere else (be it on VMware or public cloud).

So for now: Containers on VMware is the simplest place to start for many. Forever? Maybe. That is honestly VMware’s game to lose—they are doing a lot of interesting things with Kubernetes and customers are taking notice. I expect that moving forward if an organization decides to run containers on prem, doing it on VMware will be the incumbent if things continue down this path. Whether that is with Tanzu or something else is what we will all find out.

Pure, Containers, and VMware

So when it comes to Pure, we have three (now) significant offerings around container storage in a VMware environment:

Cloud Native Storage

VMware’s Container Storage Interface (CSI) driver, called Cloud Native Storage (CNS), uses storage policies within VMware and maps them to storage classes. This is how you can choose storage features (characteristics, properties, etc.) when provisioning storage (persistent volumes). In a VMware world, storage policies describe either advertised features of the array (in this case vVols) or tags. If the array that owns a vVol datastore can offer up this feature, then that datastore is valid for that storage policy, and therefore valid for persistent-volume provisioning when that corresponding class is chosen. Alternatively (or in conjunction), you can tag datastores with custom tags and create storage policies that say “any datastore with this or these tags is compatible with this policy.” You can use this with any type of datastore—VMFS, vVol, and otherwise. For the Pure Storage FlashArray in particular, VMFS or vVols (vVols being the Pure Storage preferred option for container persistent storage) are great ways to store and protect your VMs and containers on top of Pure in a VMware environment.

There is particular integration with vSphere when using the CNS CSI driver. Persistent storage shows up as first-class disks which can be seen natively in the vSphere Client.

This integration gets tighter with the vSphere with Kubernetes offering (or the newly announced vSphere with Tanzu) like the automated importing of storage policies into storage classes.

VMware’s CSI driver can be used with Tanzu or other Kubernetes flavors, although the biggest impact can come from its  integrations with VMware’s offering. The key point here: In this architecture you are making the storage infrastructure choices from a vSphere layer. You are using the VMware tooling and features as a way to provide the options up to the container consumer or deployer. Fibre Channel (or NVMe-oF) customers, for instance, would likely go the CNS route for their container storage as FC storage cannot be directly connected to guests because the datastore concept abstracts away the physical protocol layer. This allows for the use of vSphere storage features (SIOC, SDRS, vVols, snapshots, array feature integration with vSphere, etc.) while providing a standardized way to manage storage in Kubernetes with the VMware admin controlling the keys.

integrations with VMware’s offering. The key point here: In this architecture you are making the storage infrastructure choices from a vSphere layer. You are using the VMware tooling and features as a way to provide the options up to the container consumer or deployer. Fibre Channel (or NVMe-oF) customers, for instance, would likely go the CNS route for their container storage as FC storage cannot be directly connected to guests because the datastore concept abstracts away the physical protocol layer. This allows for the use of vSphere storage features (SIOC, SDRS, vVols, snapshots, array feature integration with vSphere, etc.) while providing a standardized way to manage storage in Kubernetes with the VMware admin controlling the keys.

Pure Service Orchestrator™

Pure Storage’s CSI driver—called Pure Service Orchestrator—allows provisioning from FlashArray™, FlashBlade®, or Pure Cloud Block Store™ storage to containers.

Pure Service Orchestrator Explorer

This is a fully featured CSI driver supporting the latest and greatest CSI features with intelligent array provisioning and more. Pure Service Orchestrator is supported in Kubernetes on top of VMware, baremetal, or wherever Pure Cloud Block Store can run (currently AWS and in beta on Azure). There is no direct VMware integration today, so when using Pure Service Orchestrator, storage is presented directly to the worker node:

- NFS (FlashBlade), FC (FlashArray), or iSCSI (FlashArray) for baremetal

- NFS (FlashBlade), iSCSI (FlashArray) for VMs or

- iSCSI (Pure Cloud Block Store) for EC2 (in AWS)

The storage is mounted and presented without VMware knowing anything about it. Snapshots, management, capacity usage, and so on are not affected by or controlled via VMware.

Pure Service Orchestrator + VMware is for Pure Storage customers who want to run their deployment on VMware (or, of course, elsewhere) and design the storage usage to run agnostic to the compute layer (hypervisor or not). Pure Service Orchestrator only supports Pure hardware products such as the FlashArray, FlashBlade, or Pure Cloud Block Store platforms. Pure Service Orchestrator (as opposed to CNS) on top of VMware is for (includes but is not limited to) the customer that leverages Pure Storage platforms in other deployment models (baremetal, public cloud) simultaneously as on top of VMware and wants a consistent storage-management experience (same storage, same CSI driver) across deployments. Alternatively it’s for the Kubernetes admin who doesn’t have access to the underlying VMware environment, but wants to manage Pure Storage capacity directly.

Portworx

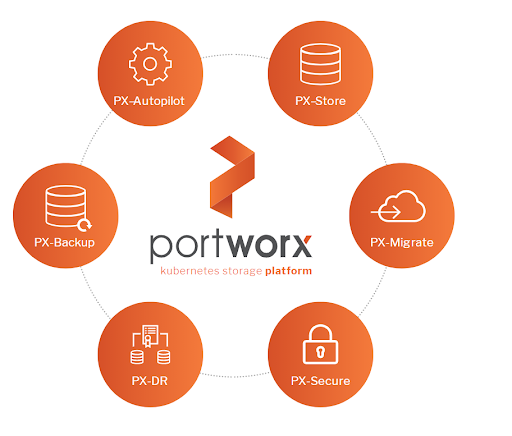

The first thing to note is that Portworx is MUCH more than a CSI driver. It includes replication, DR, backup, software-defined storage, and more.

Portworx isn’t limited to Pure Storage; it can use basically any storage. And it’s certainly not only for VMware environments—in fact only a portion of Portworx customers use VMware. Portworx’s solution can run anywhere on anything.

Portworx’s solution includes VMware integration. If it’s running on top of VMware, it can provision from VMware datastores (vVols, VMFS, NFS, vSAN) and present disks up to the worker nodes.

Disk Provisioning on VMware vSphere

It then formats and uses that raw storage for its SDS layer within the worker nodes and chunks up storage from it. So there is no direct relationship with virtual disks and containers (like there would be with CNS). Instead, they’re provisioned as raw capacity; persistent volumes are carved from that pool as it expands via additional underlying virtual disks. Portworx does the feature work. It allows you to put fewer requirements on your VMware storage. For instance, if your current storage is not very feature-rich, resilient, or available, Portworx can enable it to be so.

Furthermore, it can provide application integration such as application consistency, full DR and backup, and other important overall features that CSI alone cannot provide.

Portworx on VMware provides full data management across clouds or deployment models that is completely agnostic to the compute layer and the physical storage layer. I think a quick chart can help:

*These are based on my opinions **though not for long. See this post on some possibilities.

Conclusion

I think the key here is that there is a lot going on in the VMware world around Kubernetes and containers. And there are a lot of options from Pure on how you can manage the storage.

At Pure we support container storage in the public cloud, baremetal, and virtualized. With the introduction of Portworx, that scope of where and how we can help increases dramatically. If you and your organization, like many others, are looking to get started with Kubernetes via VMware—we have you covered!