One of the criteria that IT vendors have seemed to highlight about their products since the inception of the industry is performance. Whether the subject is CPUs, servers, networks, databases, and yes – storage – not only does everyone seem to talk about performance, but have you ever seen anyone claim that their performance was slower than a competitor? We all know that almost everyone seems to be claiming to be “the best!”, but obviously they can’t all be the fastest, and more importantly, does it really matter?

One of the criteria that IT vendors have seemed to highlight about their products since the inception of the industry is performance. Whether the subject is CPUs, servers, networks, databases, and yes – storage – not only does everyone seem to talk about performance, but have you ever seen anyone claim that their performance was slower than a competitor? We all know that almost everyone seems to be claiming to be “the best!”, but obviously they can’t all be the fastest, and more importantly, does it really matter?

Our belief is that when it comes to performance what matters most is: Are YOU able to maintain performance to consistently meet and exceed your desired Service Level Objectives for YOUR applications to satisfy YOUR users.

We further believe that there is no substitute for your own personal, hard experience to determine the value of a solution. When it comes to performance, if there is any doubt regarding viability, we recommend that you consider a Proof Of Concept (PoC) evaluation.

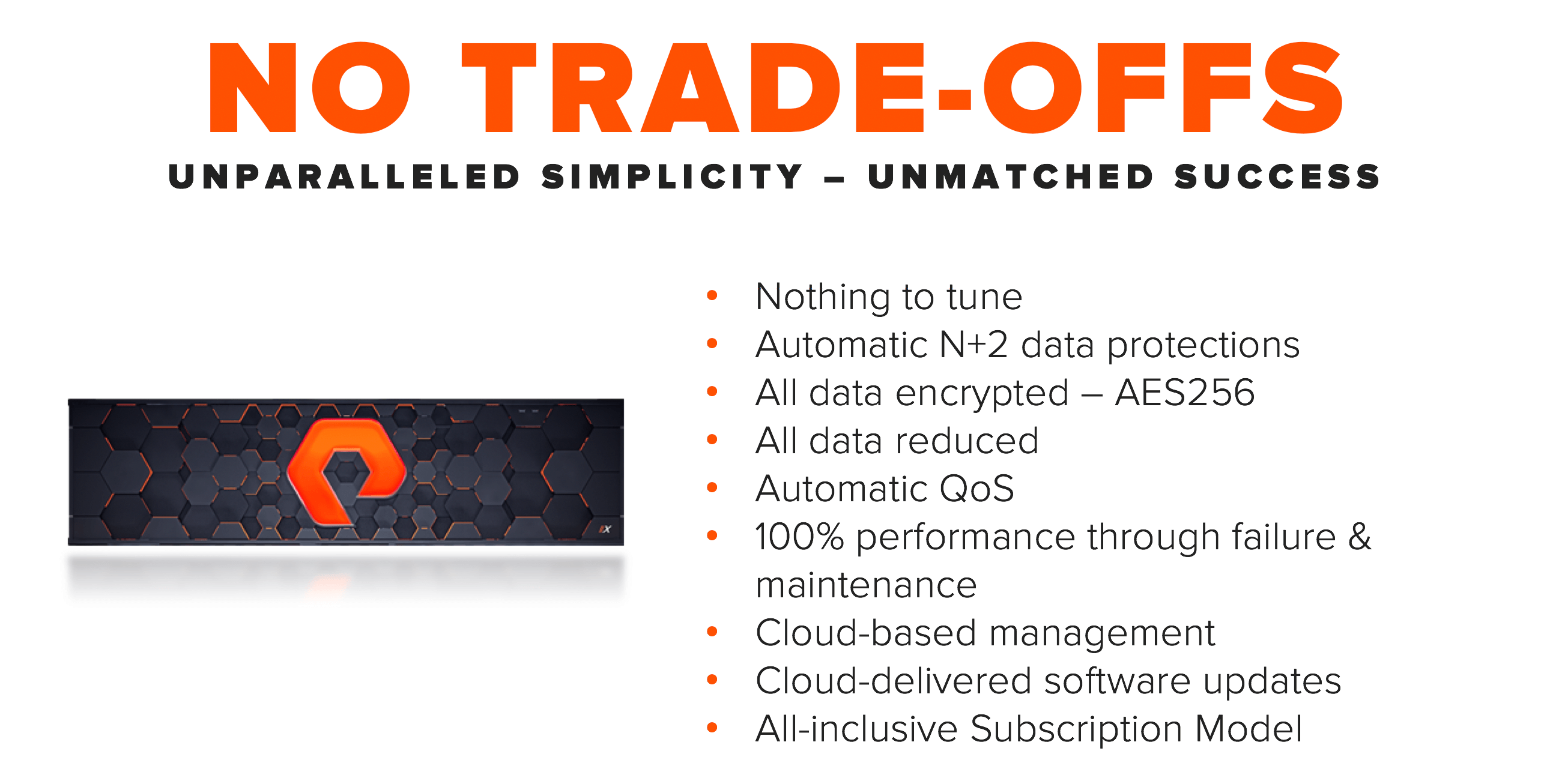

From the beginning at Pure Storage, we’ve sought to solve the issue of performance in a manner such that you don’t have to. Our FlashArray was architected to assume that the only storage media that it would ever use is solid-state with no conventional spinning disks, ever. There were some key assumptions, and objectives:

- Flash technology is so much faster than conventional disks that we should focus on how to reduce the cost of flash and accept great raw performance rather than dwell on it.

- Make operation so simple that customers automatically get consistent high performance but don’t have to deal with traditional legacy performance management to achieve it.

- Focus on delivering the right performance for real applications and real customer workloads rather than on artificial benchmarks and quirky configurations that are not relevant to real production.

At Pure Storage, we design all our products to run with all data services on, yet still with high performance for the real world.

Pure Storage believes that your real experience with real applications is the best indicator of what can be expected for performance, rather than “hero numbers” from us or anyone. You can read about what over 180 real customers have already achieved with Pure Storage.

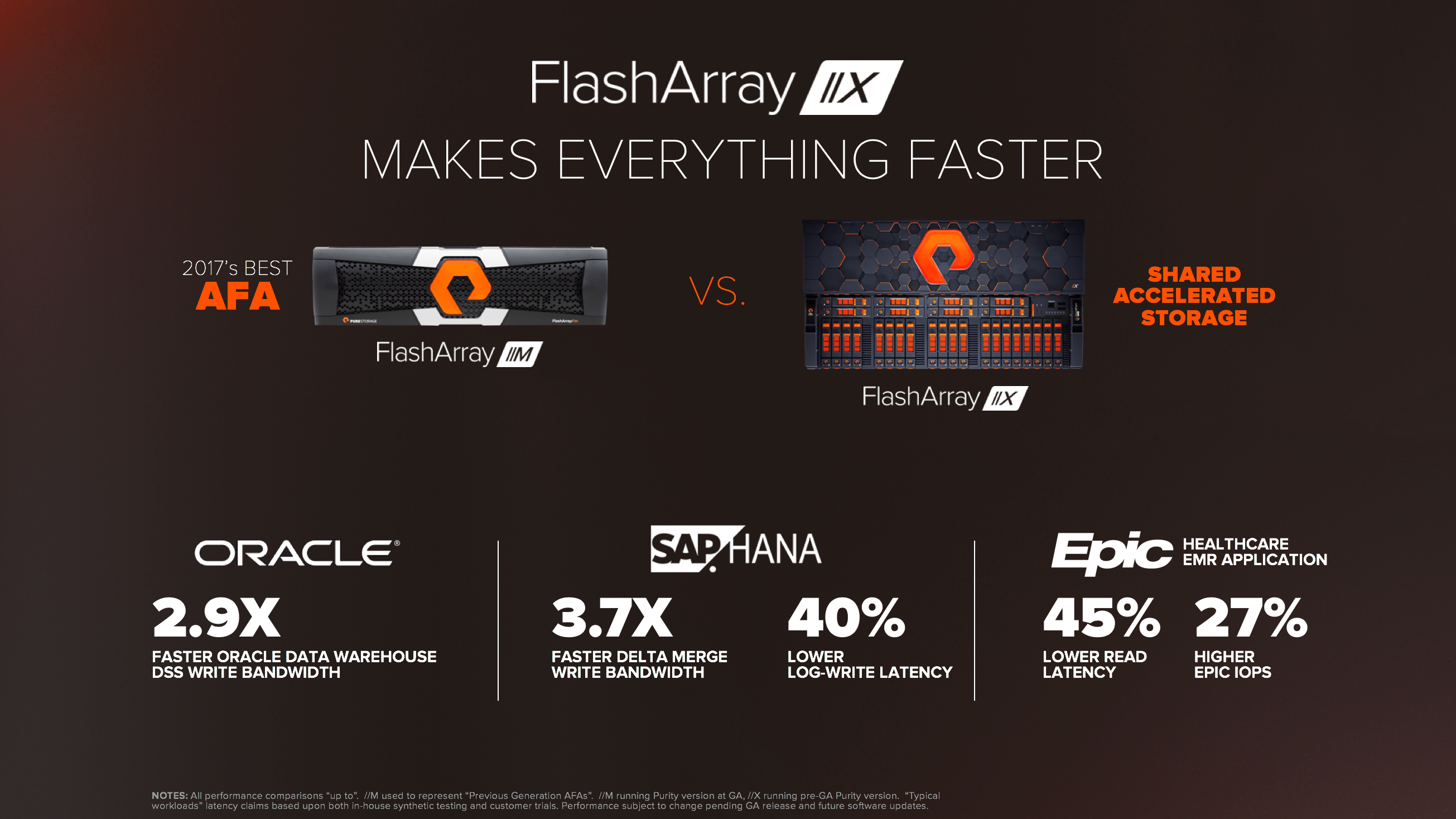

With the recent announcement of Pure’s FlashArray//X family, our 4th generation that uses NVMe, we also released some data about performance of our new products, All of these benchmarks were associated with popular applications and databases.

If you run SAP, you might be interested in this blog about our performance with SAP HANA.

If you run Oracle, you might be interested in this blog about our performance with the Oracle 12c database.

If you are a Healthcare organization, you might be interested in this blog about our performance running Epic with the Caché database.

When Dell recently announced their newest version of the VMAX architecture, now called PowerMax, Dell made some pretty big claims about performance. Dell called PowerMAX not only the “world’s fastest storage” but also claimed a “2X”* advantage over all alternatives. Dell stated: “Amazing performance It’s fast, really fast. In fact, PowerMax is the world’s fastest storage array(1) delivering up to 10 million IOPS(2) and 150 GB per second(3) with 290 microsecond response times(4)“

For those not familiar with PowerMAX’s cache-centric architecture, when Dell states “Random Read Hits Max” in the small print, it means that performance is being measured a) 100% read-only (no data being written) and b) 100% from the front-end high-speed cache memory, with 0% of the data coming from the actual SSD storage devices where the data should actually live. Despite all of the attention given to the new NVMe technology in PowerMax, it seems that none of the data in these IOPS and bandwidth benchmarks uses any of PowerMax’s new NVMe paths on the back-end. You should ask yourself if you believe that tests like that reflect what you do running real applications in the real world.

Let’s put this in context. What if we said that we have created the world’s fastest bicycle, able to achieve speeds up to 250 km/hour (!), but then putting in the fine-print footnotes that it only achieves that speed when being dropped out of an airplane at altitude, not for very long, and only without an actual rider on the bicycle. Oh, you wanted to ride the bicycle on a paved surface on the ground? Well, then your maximum performance will be much less than what was claimed, but we still would like you to compare our numbers to other bicycles that had riders on them on roads, because our numbers are so big!

The OLTP2 HW benchmark that was used for the latency test is a bit more interesting. You might want to ask at what I/O rate the claimed 290 microsecond latency was achieved, as the performance curves for OLTP2 HW may not be very linear, and possibly this was achieved at a very low I/O rate.

Curiously, sometime shortly after the public announcement the online references to the latency tests running OLTP2 HW seem to have mostly been replaced instead with a claim of “Up to 50% lower latency”.

The fine-print footnote for this new claim states: “Based on Dell internal testing using the Random Read Miss benchmark test in March 2018, comparing the PowerMax with SCM drives against the VMAX 950F with SAS Flash Drives. Actual response times will vary.”

So, Dell did release a performance expectation using the back-end NVMe (faster, parallel) infrastructure, but they did it using “SCM drives” (Storage Class Memory) and compared it to a legacy (slower, serial) SAS infrastructure on VMAX with NAND. In a different document, Dell provides even more detail about this expected PowerMax performance, stating “When we have implemented SCM and NVMeoF we expect a mixed workload to see about a 50% latency reduction overall.”

You might want to ask Dell what the part number, specifications, and availability are for those SCM drives that were used in the tests, since PowerMax supports only dual-ported NAND NVMe devices currently, as “SCM drives are planned to GA with PowerMax early 2019”. You might want to also ask about NVMeoF, since that is also claimed to be planned for 2019 and isn’t available today either. But if those technologies do come to market per Dell’s plans, you especially might want to ask yourself if that anticipated 50% latency reduction is worth it to you, given that Dell states: “We expect initial SCM pricing to (be) around 5-10X per GB compared to NVMe NAND drives.”

Some more questions that you might want to consider about these performance numbers are: Why is Dell providing IOPS and bandwidth tests that are exclusively cache read “hits”, when Dell states that “the main benefit will be seen with workloads that experience many read-misses” -isn’t that saying that the expected benefit will be for workloads that are more similar to the exact opposite of what Dell has provided? Were data services active or not for these tests? For example, specifically were data compression, deduplication, encryption, replication, etc. on or off? If not on, can you provide tests with data services enabled? What would performance tests look like if they were run on a configuration that used the back-end NVMe SSDs that are available today instead of SCM drives? But most importantly, is there data from performance tests using real applications or databases like the ones that I use that have been run on a configuration that I can install now?

At Pure, we certainly believe that performance is important. But with the current pervasiveness of Flash technology, and NVMe now rapidly becoming the dominant protocol across the end-to-end storage infrastructure, for most customers and most applications there will be many products from multiple vendors in the market that will be able to meet and exceed most performance requirements. We believe this includes Pure’s FlashArray//X, but also Dell’s PowerMax, and others.

What has driven Pure FlashArrays success with customers has often been the balance between performance and economics mentioned above. FlashArray was not built with an objective to be the fastest, but rather to be able to provide outstanding performance at a much lower cost of ownership, much greater efficiency, and much greater simplicity than what might be assumed from an all-flash storage system. Always-on data services such as data reduction, encryption, QoS etc. have then provided additional benefits.

Technologies that have targeted the elusive “Tier 0” ultra-high performance all-flash market segment (Dell-EMC DSSD, Fusion-IO, etc.) have mostly vanished after finding that the market for ultra-high performance at ultra-high prices was neither large nor sustainable. In contrast, our ability to give the masses Tier 1 performance at Tier 2 prices has proven to be extremely attractive for our customers across the majority of the storage market and almost all applications. Is Dell now targeting the “Tier 0” niche with PowerMax? Do you really need to wait for SCM and NVMeoF to meet your performance requirements?

So, just how fast is the new PowerMax, really? We know how fast PowerMax can deliver data when reading from cache: up to 10 million IOPS, and 150 GB/s. And we can say with reasonable confidence that if you dropped both a Pure Storage FlashArray//X90 and a PowerMax 8000 out of an airplane, they’d both fall to earth just about as fast as a bicycle.

But seriously, we suggest that you look to your own unique requirements. Contact us, and challenge Pure to do a Proof of Concept (PoC) test if appropriate to demonstrate not just our ability to meet your performance needs, but to do it with greater ease of operation and management, a smaller footprint, enterprise-class functionality standard and included, and a more attractive TCO. We gladly welcome the opportunity to show you how we’d compare to the self-proclaimed “world’s fastest storage” in YOUR environment and let you be the judge.