Today we’re pleased to announce general availability of Pure Service Orchestrator™ version 6.0. Pure Service Orchestrator delivers storage as a service for containers, giving developers the agility of public cloud with the reliability and security of on-premises infrastructure, wherever your data lives.

This release has been a long time coming, and I would first like to thank everyone on the engineering team who has worked so hard on it.

When you look at the additional features provided with this release, you may wonder why we assigned just a version number increment to such a major release. Good question. The magic is in the underlying architectural changes accomplished to make this release possible.

These architectural changes will pave the way for many new, innovative features in future releases of Pure Service Orchestrator.

So what is the main architectural change?

Pure Service Orchestrator v6.0 is stateful. Where previous versions were completely stateless, Pure Service Orchestrator version 6.0 is able to retain state because it includes CockroachDB, a cloud-native SQL database designed for scalable, cloud-based services that are disaster tolerant.

Pure Service Orchestrator installs and maintains the database, which is secured using Kubernetes secrets.

Note: We continue to recommend installing Pure Service Orchestrator in a private Kubernetes namespace and any additional security controls over that namespace.

During the deployment of Pure Service Orchestrator v6.0, you’ll now see between five and seven CockroachDB pods spread across your cluster (managed by tolerations, annotations, or affinity). If you have fewer nodes in your cluster than CockroachDB pods, you’ll get multiple pods on a node.

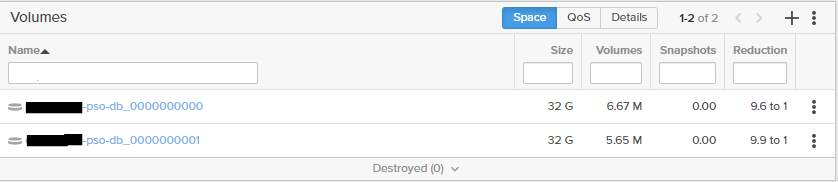

Each of the DB pods will have a different back-end volume that’s created and managed by Pure Service Orchestrator. These can be either block volumes or filesystem shares, depending on your federated storage pool configuration. Again, you may see multiple volumes/shares on your back-end storage appliances if there are fewer than five appliances. The example below shows an array with two DB volumes.

Please Note: We ask that you never interact with these volumes as this could compromise the integrity of the Pure Service Orchestrator installation.

Beyond the core architectural change, there are more new features that you might find useful as you scale your Kubernetes deployments and require more granularity of control over persistent storage requirements.

- Storage quality of service: Using parameters in the definition of a StorageClass, you can control the IOPs and bandwidth limits of all volumes created by the StorageClass. These limits are on a per-volume basis, so you can have different classes for Gold, Silver, or Bronze QoS levels. Additionally, when a volume clone occurs, the new volume retains any QoS levels from the source volume. You can also add QoS limits to volumes that you import into Pure Service Orchestrator control from external applications.

- Persistent storage filesystem options: Again, by using StorageClass parameters, you can use predefined keys to control the default filesystem type and creation options for the filesystem. Filesystem mount options can be specified using predefined keys as well.

- FlashBlade NFS export rules: While you can globally specify the default NFS export rules for all Pure Service Orchestrator created in FlashBlade® filesystems, StorageClass parameters allow you to specify per-filesystem export rules controls as well. These rules can help to lock down specific filesystems to a subset of worker nodes in your cluster, or even expose it to external applications that can feed the share with data used by your Kubernetes-based applications.

Read more about these features in the Pure Service Orchestrator documentation for Storage QoS and Filesystem Options.

Finally, I want to point out that with Pure Service Orchestrator v6.0 and higher, Pure Storage supports only Helm installations. All operator-based installations have been removed, even for Red Hat OpenShift deployments of Pure Service Orchestrator.

With the release of OpenShift 4.4, Helm3 is a GA feature and we enforce this installation methodology for Pure Service Orchestrator v6.0 and beyond. We’ve already approved Pure Service Orchestrator v6.0 for use with OpenShift 4.4 and 4.5 (the latest version at the time of this writing).