Continuous integration is the development practice of merging all developer changes to a common code base into a central source code repository on a basis that is at least once daily. Once this ‘Push’ into the central repository has taken place a suite of automated tests is usually executed. Continuous integration has been a practice prevalent in the software development industry for well over a decade.

Taking Continuous Integration A Step Further

Continuous delivery takes continuous integration one-step further by introducing the notion that software in the central repository should always be in a deploy-able state. Continuous delivery is also one of the key principles that underpins the “Agile development manifesto”; a set of principles that underpin the agile software development ethos.

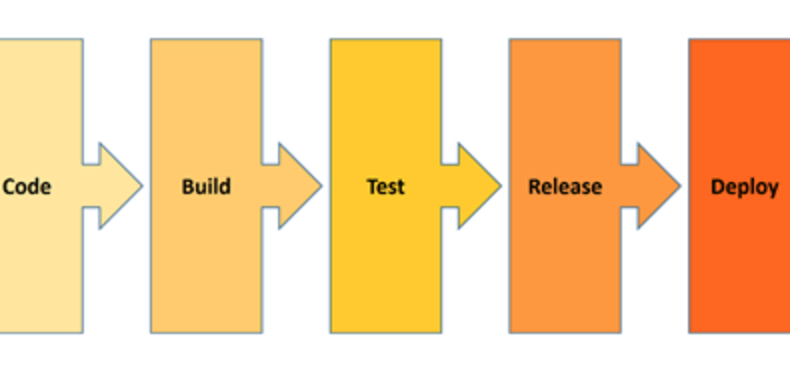

Continuous integration and continuous delivery are ubiquitous amongst the type of processes that are part of a DevOps software development and delivery culture. If you take the DevOps process flow at its highest level, it is clear to see where both continuous delivery and integration fit into DevOps development ethos:

Why Should Organisations Care About This ?

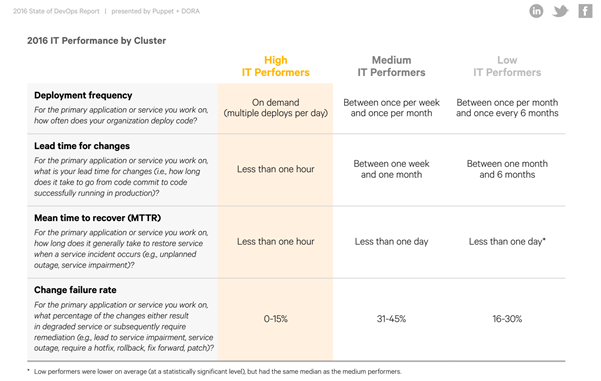

The State of DevOps 2016 report states that amongst the many things organisations classed as “High IT performers” do, frequency of software deployment is paramount:

Irrespective of the software design, architecture, stack, or tools used, most applications have a  fundamental requirement to store data somewhere. Taking databases as an example, some organisations struggle to back up their production databases during normal business as usual production activities. How can deployment on demand be achieved when production like test and developments environments are required?

fundamental requirement to store data somewhere. Taking databases as an example, some organisations struggle to back up their production databases during normal business as usual production activities. How can deployment on demand be achieved when production like test and developments environments are required?

Software Build Pipelines

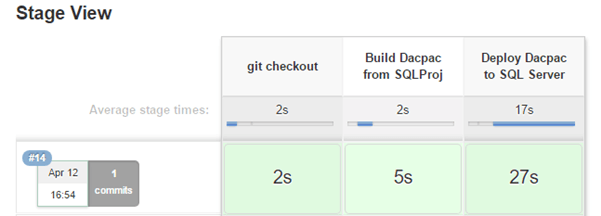

Build pipelines help automate the process of continuous integration and delivery. The most basic function of a build pipeline is to extract code from a source control repository, build the code into a deploy-able artefact and finally deploy the artefact to an environment. Jenkins is a popular software build automation engine, the Jenkins dashboard excerpt below illustrates a simple build pipeline:

The pipeline takes a SQL Server database project, builds it into a deploy-able artefact called a ‘Dacpac’, which is then applied to a database. Ideally, the target test database should look as similar to the production database as possible.

Where Modern All Flash Storage Comes In

What if regular copies of the production database could be taken with zero impact to the source with high levels of data reduction that allowed the storage to handle numerous copies of the largest of production databases with ease?. The dashboard below is for a customer performing development with databases cloned from snapshots, 1.4PB of usable storage is being realised from 115TB of raw capacity and this is without the inclusion of thin provisioning or snapshots:

Taking snapshots with Pure Storage® FlashArray:

- Is lightning fast

- Has zero performance impact on the source of the snapshot

- Is incredibly space efficient

The FlashArray PowerShell and Python software development kits enable snapshot creation to be integrated into any automated process that supports these scripting languages. This enables a refresh of the test database from production to be added to the build pipeline script from our example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

def PowerShell(psCmd) { bat “powershell.exe -NonInteractive -ExecutionPolicy Bypass -Command \”\$ErrorActionPreference=’Stop’;$psCmd;EXIT \$global:LastExitCode\”” } node { stage(‘git checkout’){ git ‘file:///C:/Projects/SsdtDevOpsDemo’ } stage(‘Build Dacpac from SQLProj’){ bat “\”${tool name: ‘Default’, type: ‘msbuild’}\” /p:Configuration=Release” stash includes: ‘SsdtDevOpsDemo\\bin\\Release\\SsdtDevOpsDemo.dacpac’, name: ‘theDacpac’ } stage(‘Refresh test from production’) { PowerShell(“. ‘C:\\scripts\\Refresh-Test-Checkpoint-2016.ps1′”) } stage(‘Deploy Dacpac to SQL Server’) { unstash ‘theDacpac’ bat “\”C:\\Program Files (x86)\\Microsoft SQL Server\\130\\DAC\\bin\\sqlpackage.exe\” /Action:Publish /SourceFile:\”SsdtDevOpsDemo\\bin\\Release\\SsdtDevOpsDemo.dacpac\” /TargetServerName:(local) /TargetDatabaseName:SsdtDevOpsDemo” } } |

The magic that refreshes the test database from production database is the call to the PowerShell script on line 17, this in turn calls the FlashArray REST API. Sample PowerShell scripts to perform SQL Server database refreshes via FlashArray snapshots can be found in this Pure Storage® GitHub repository. For development in an environment where sensitive data cannot be exposed to developers, scripting can be added to obfuscate the data, for the sake of brevity the example has been kept as simple as possible.

If build processes can use test databases cloned from production, this improves the quality of testing and the confidence that changes will work in production to a degree that deployment frequency will increase.

The Power Of The FlashArray Rest API

The FlashArray REST API is key to integrating flash array functionality into anything that is scripted or automated, but what makes this special?:

- It requires no gateway and it is an integral part of the array

- The REST API exposes the full array command surface area

- It is fast and scale-able

- The REST API was written by developer for developers

- It is always on

- By virtue of the fact users of FlashArray do not need worry about RAID levels, tier-ing or any special configuration knobs, levers or dials, the REST API is inherently simple

In short, the REST API is a first class citizen when it comes to FlashArray software and not a “Bolt-on” or afterthought.

Lead Time for Changes

Software development is expensive and is why larger organizations often buy out other organizations with software intellectual property and strong software delivery capabilities. The high cost of software development has also resulted in organizations carrying out development in parts of the world with lower software development overheads. However, there is one very simple way of increasing developer productivity; this is to release developers from the shackles of slow, complex and difficult to provision storage. Modern storage that is simple to use and provision and is designed for flash from the ground up helps to avoid a number of pitfalls associated with traditional storage architectures that can hamper developer productivity:

- Poor storage performance

- Developers being dependant on infrastructure teams to provision storage because it is too complex for the development teams to do it themselves

- Insufficient storage capacity depriving developers of the ability to develop and test against production like environments

- Storage which does not lend itself well, if at all, to being integrated with automated software development processes

In the past, some organizations have chosen to give development teams whatever storage is available. Providing developers with storage that is easy to deploy and both fast and simple to use, reduces lead-time for changes, or time to market in other words.

Conclusion

Pure Storage furnishes a full set of tools and capabilities that add tremendous value to development processes and developer productivity. With NVMe-ready technology, FlashArray //X and the Data Platform For The Cloud Era, comprising of more than 25 new software features, developers can leverage storage that is denser, faster and more data service rich than ever before. If traditional storage that is slow, difficult to provision and integrate into automated software build processes is hampering your organisation, we would love to hear from you and have a chat about how Pure can help.