MongoDB is a popular, highly scalable NoSQL database. For fault tolerance MongoDB supports replica sets where multiple copies of the same database exist on different servers. If you have MongoDB in your environment, more likely it is deployed on multiple servers with local disks. This locally attached type of storage (disks) is referred to as DAS or Direct-Attached Storage. DAS is common because of its relatively low cost, ease of setup and good performance characteristics. On the opposite side of the storage spectrum, just to add more to your three-letter acronym vocabulary, is SAN. SAN, which stands for Storage Area Network, provides means of connecting multiple hosts to one or more storage arrays. Storage arrays have generally been more expensive, complex to manage, and somewhat slower than DAS – until now. Welcome to Pure Storage! With Pure’s FlashArray//X and modern SAN protocols such as NVMe (Non-Volatile Memory Express) and NVMe-oF (Non-Volatile Memory Express over Fabrics), we can successfully compete against and replace data center DAS devices.

MongoDB on DAS

As mentioned earlier, a common MongoDB installation consists of a replica set. A replica set requires a minimum of three servers, one primary and two secondaries. In a DAS configuration each of those servers has local disks with a copy of the database files. A simple MongoDB replica set with DAS is shown below.

SAS (Serial Attached SCSI) or SATA (Serial AT Attachment) are the two popular connectivity protocols for moving data between disks and servers. The disks in each box (server) are only locally accessible. A typical deployment includes spinning drives (HDDs) or SSDs (Solid State Disks) and some type of a RAID controller to protect the server from possible data loss in the event of a disk failure.

MongoDB on FlashArray

The MongoDB replica set deployment with FlashArray™ may look like the diagram below:

In the FlashArray environment above, we have NVMe-oF protocol for server to FlashArray communication, and NVMe protocol for FlashArray to disks connectivity. So now a little bit of history. First, Pure Storage with FlashArray democratized NAND-based flash memory by delivering very high storage performance at affordable prices. Then we introduced DirectFlash™ modules to eliminate the disk Firmware Translation Layer which hampered flash performance. Not to stop there, we integrated the NVMe protocol inside the array (from the Host Bus Adapter to the Direct Flash Module) again to open up even more speed. At that point, FlashArray//X was able to deliver an I/O with latency down to 250 𝞵s. And finally, thinking outside-of-the-box, we brought the same NVMe protocol outside of the FlashArray//X to the host to deliver end-to-end, 100% NVMe connectivity. To differentiate NVMe from NVMe on SAN (array to host), the protocol is called NVMe-oF and our first implementation uses RoCE which is RDMA over Converged Ethernet (RDMA = Remote Direct Memory Access). We call it DirectFlash Fabric. I suppose that is enough of alphabet soup for now.

The description of the technical aspects and NVMe advantages is beyond the scope of this article. Additional information can be found at nvmexpress.org. I am convinced that just like the introduction of flash-based memory to storage devices was a pivotal event in the history of storage, the complete NVMe connectivity has the potential to revolutionize the storage industry. Finally, we have a fully functional, modern, storage oriented data delivery protocol which takes full advantage of flash-based devices.

MongoDB on DAS vs. FlashArray/NVMe

Disk Space

As previously indicated, a MongoDB replica set node maintains a copy of the entire database. Based on this requirement each server must have the same or greater amount of disk storage as the host with the smallest disks in the set. So how much disk space is required? The answer depends on the failure protection level selected and your downtime tolerance. For optimal performance MongoDB recommends SSDs in RAID 10 configuration for all nodes in the replica set. While the RAID 5 or RAID 6 configurations may not provide adequate performance nevertheless, they are also commonly deployed.

DAS

RAID 10

RAID 10 is a striped mirror and requires a minimum of four SSDs. The usable storage is half of all disks in the set. For example, 1.2 TB of usable disk space requires six 400 GB SSDs (6 * 400 / 2).

RAID 5

RAID 5 is a stripe with parity and requires a minimum of three SSDs. The usable storage is reduced by the equivalent of a single drive capacity. For example, 1.2 TB of usable disk space requires four 400 GB SSDs (4 * 400 – 400).

RAID 6

RAID 6 is a stripe with double parity and requires a minimum of four SSDs. The usable storage is reduced by the equivalent of two disk capacity. For example, 1.2 TB of usable disk space requires five 400 GB SSDs (5 * 400 – 2 * 400).

FlashArray

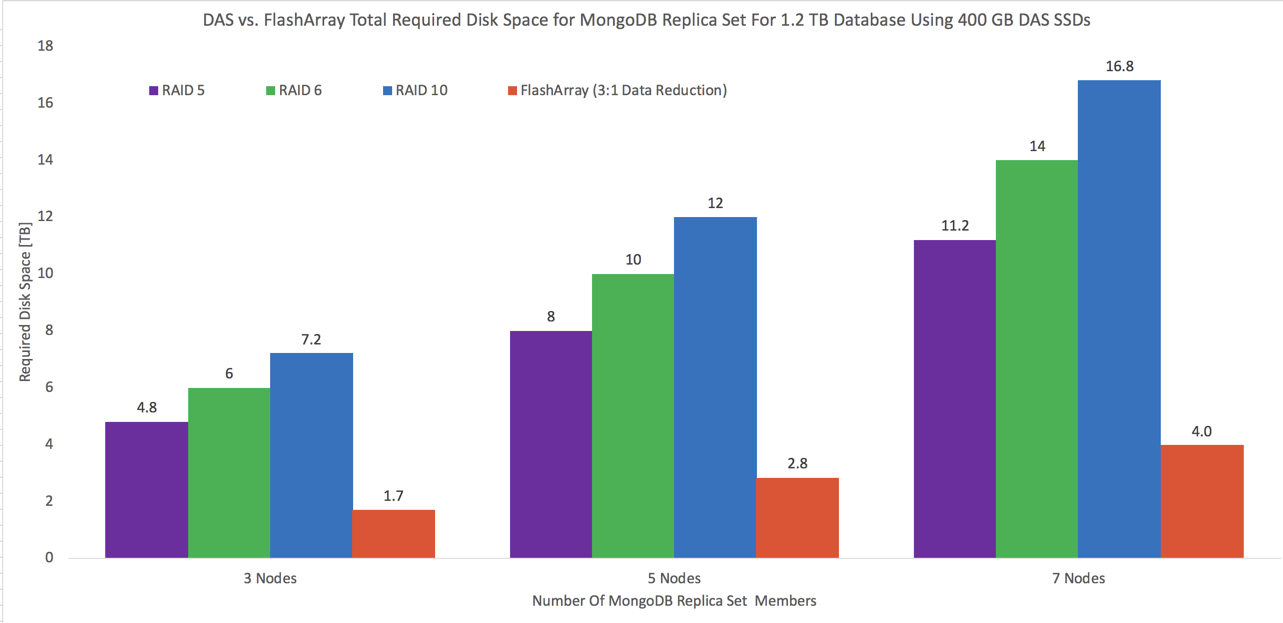

Unlike DAS, FlashArray provides essential data services such as thin provisioning, deduplication and compression which are always turned on. Data protection is ensured by a dual-parity proprietary RAID-HA configuration. Let us compare the potential disk space savings on FlashArray and DAS. Using the previous 1.2 TB example, the FlashArray will allocate approximately 1.7 TB of raw storage per host assuming no thin provisioning. Using 3:1 FlashArray data reduction the same 1.7 TB shrinks to less than 570MB. On DAS servers 1.6 TB per host must be configured for RAID 5, 2 TB per host for RAID 6 and 2.4 TB for RAID 10. The DAS disk space requirements become more drastic as we grow the replica set and increase the disk fault tolerance. The chart below outlines storage requirements for three, five and seven node replica sets. A seven member MongoDB replica set consumes 16.8 TB of disk space just for a 1.2 TB database. The same replica set on FlashArray, without thin provisioning and conservative 3:1 data reduction ratio would require only 4.0 TB! As the number of replica set members increases, so do the disk space savings on the array.

Performance

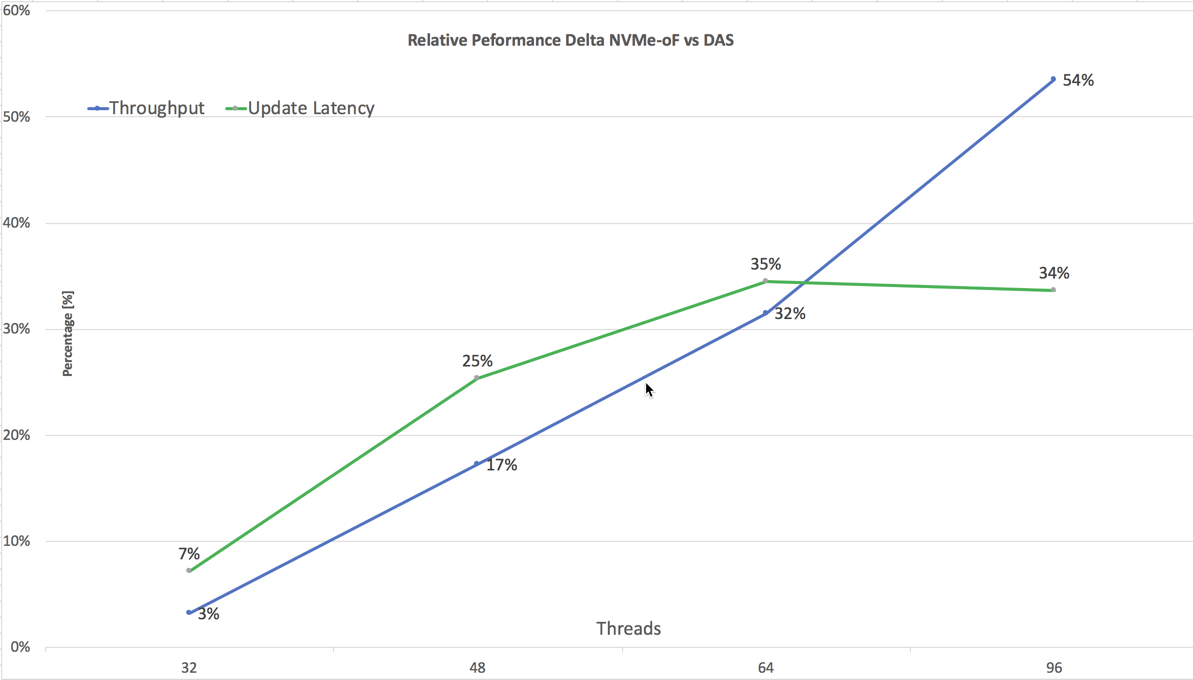

Because of the great variety of local storage solutions, it is very difficult to accurately compare DAS performance with FlashArray and NVMe-oF SAN. Nevertheless, in our testing in some cases MongoDB on FlashArray//X matched and even outperformed a somewhat typical DAS configuration (RAID 10 with four SAS SSDs).The performance delta is more pronounced with the increase in database workload manifesting itself in higher throughput (operations per second) and sub-millisecond latency for updates. The database operations were generated by Yahoo! Cloud Serving Benchmark (YCSB) using core Workload A (heavy updates) on 100,000,000 records and operations. The relative performance delta between NVMe-oF (DirectFlash Fabric) and DAS is shown below. The positive values indicate better NVMe-oF performance compared to DAS.

Storage Management

Without additional applications DAS must be managed locally on each server. The user interface as well as the CLI and REST APIs (if available) are not consistent between different server and RAID controller vendors making the management more difficult. Moreover, the hot-swap disks trays are usually not interchangeable between different manufacturers forcing users to stock multiple replacement drives, further increasing the cost.

FlashArray provides a unified user experience for provisioning, monitoring and managing storage whether you use the built-in GUI, Rest API, Pure1 or CLI and there is no need to maintain the replacement part inventory since the failed components are replaced by the service personnel.

Failure Management

The MongoDB recommended RAID levels are capable of sustaining at least one SSD failure. However, in some cases the IO subsystem performance may be severely impacted until the drive is replaced and the RAID is rebuilt. On FlashArray, a DirectFlash module malfunction is a non-event without any negative performance consequences.

The replica set must have at least one primary and one secondary server available. The single node failure (primary or secondary) is not a catastrophic incident, however in the three node replica set with with only two functioning nodes, the entire database is exposed to a potential downtime should another node fail. Once the malfunctioned node is brought back on line, MongoDB initiates a data sync or copy from another member. Depending on the database size and changes, utilization, node downtime and network bandwidth the sync may be a time-consuming process. The FlashArray data services such as snapshots may reduce the risk associated with the failed node by shortening the time required to sync. By utilizing disk snapshots the failed node can be quickly restored and resynced where only the most recent changes must be applied. DAS devices do not inherently provide data services such as snapshots. Furthermore, FlashArray high availability services including replication and Active Cluster are also available for even greater level of data protection.

Summary

With NVMe and NVMe-oF, Pure Storage and FlashArray //X extend the flash performance beyond the array directly to the host. The low latency, high throughput SAN has become a viable, cost-effective alternative to local disk implementation and with rich set of data and high availability services as well as six nines uptime the time to replace DAS is now.