Automated tiering of data sounds good, right? Storage arrays which aim to tier the right data to the right place at the right time have been a staple for a while. Their proponents argue that the data chunks or volumes that need low latency and IOPS get it, and infrequently used chunks find their way onto lower cost, higher capacity disk after exhibiting low activity. Not too long ago, I also had that view.

There are challenges of course. Does your workload exhibit the right attributes for this? What if these change during the life of the array? Will you unexpectedly have to buy more of the performance tier? Is there enough inactive data for you to be able to use all the capacity tier? And the compromise – Are your users prepared for variable performance?

Putting all that aside, the key argument made in favor of tiering is that it works economically – To offset the cost of flash, you also have to have enough lower cost SATA. More recently, I have seen it argued that tiering with flash and disk must be cheaper than all flash and that this is the only way many customers can afford flash in their arrays.

Why compromise?

But what if 100% flash cost less than a tiered array? Would you still use SAS or SATA disk for infrequently accessed parts of your applications? Spinning disk is a ball and chain around your information. Run any queries that touch the cold data and you soon find it’s too slow.

“You don’t need all-flash” is the mantra of anyone who can’t offer flash for less than the cost of disk.

Ask them when they see flash reaching the price of disk and when the all-flash data centre will be a reality and they will likely say it’s years away. People may disagree on precisely when it will be, but you would be hard-pressed to find anyone who would not agree it will happen and that tiering is a stop-gap until that point.

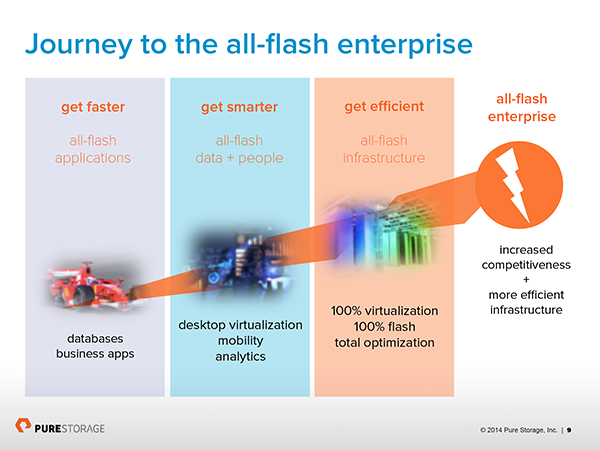

The All-flash data centre is a reality

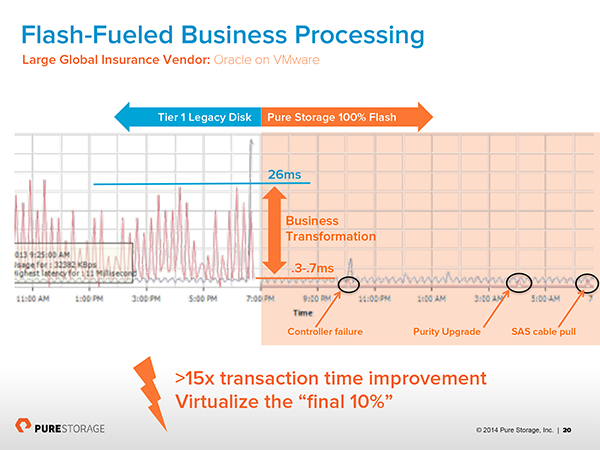

There are many organisations buying the Pure Storage FlashArray right now for less than they were paying for their tiered arrays. How is this possible? It’s enabled by innovative data reduction techniques which can only be achieved with 100% flash. These customers are unlocking the value in their data and are accelerating their business in ways which were previously unthinkable.

That data analysis which today you can only run once a week… What if it could be run daily or twice daily? What would that mean to your business?

What if the storage was so simple to manage that no training or services were needed? What if the rest of IT was free to make decisions without the restrictions usually imposed by a storage architecture.

What if the space and power needed was a fraction of what it was?

What if the architecture provided a level of resilience which meant you could upgrade the array non-disruptively to the next generation, negating the need for tech refresh and all it’s associated pain? And what if that upgrade was free if you were under maintenance?

Would you still make the old compromises?

Tiering has served it’s purpose. Let’s lay it to rest.