Been excited to do this series for awhile! Excited to see vSphere 6.7 released–there is a lot in it.

So as I did for 6.5, I am going to write a few posts on some of the core new storage features in 6.7. This is not going to be an exhaustive list of course, but some of the ones that I find interesting.

Let’s start with UNMAP!

I wrote awhile ago about how in-guest UNMAP didn’t work with snapshots:

In-Guest UNMAP and VMware Snapshots

The short story is it simply didn’t work. Let’s first remind ourselves why.

**Reminder, this works perfectly with VVols, so this fix is only relevant for VMFS**

In-guest UNMAP works if you are using a thin virtual disk. When you delete a file, the guest OS issues UNMAP to the device under the file system (in this case the thin VMDK). The VMDK is then shrunk accordingly. This is why thin virtual disks are needed–they can be shrunk and grown. Thick disks cannot do this.

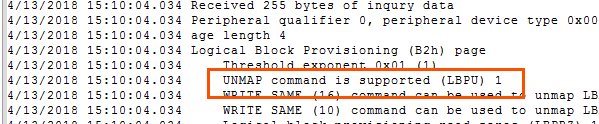

When you do a VPD inquiry on a thin virtual disk you will see the following in the logical block provisioning page:

UNMAP support is “1”, meaning it is supported. So what happens if we create a VMware snapshot?

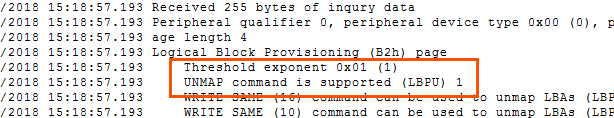

Well when a snapshot is created (in a VMFS world), the active state of the VM is moved. A delta VMDK is created and all new writes go there. Therefore, the VM is not running off of the original thin VMDK, it is running off of the delta VMDK. This delta VMDK is not a thin virtual disk–it is a SESparse type virtual disk. But if you look at the VPD page, it still supports UNMAP.

But like I showed in the previous post, UNMAP goes through, but the space on VMFS was not reclaimed. The SESparse VMDK did not shrink. What actually happens is that the metadata of the SESparse disk is properly marked as freed up, but that is not reflected in VMFS (the disk allocated blocks are not removed). In Horizon, this second step is achieved through the use of a VMware-internal API, that then takes the disk and cleans it up by de-allocating any “freed” blocks.

This process did not occur for SESparse outside of Horizon.

Until vSphere 6.7.

What to Know About vSphere

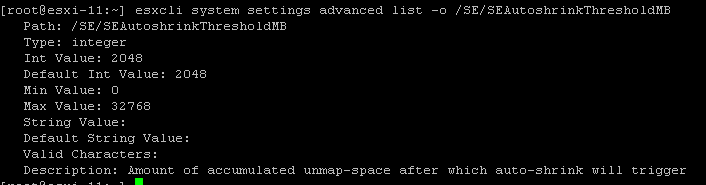

This deallocation is now tracked and is kicked off when a certain threshold is reach. There is a new setting:

|

1 |

/SE/SEAutoshrinkThresholdMB |

The default setting of this is 2 GB. Meaning that, until a virtual disk has accumulated up to 2 GB of dead space, the deallocation procedure will not run.

Let’s run a test, shall we?

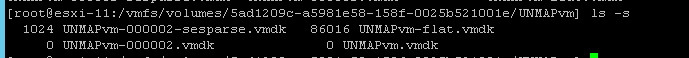

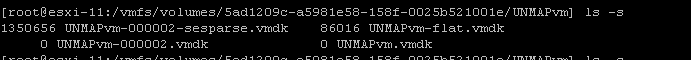

I have a snapshot created on my VM and there is a SEsparse delta VMDK that is storing changes:

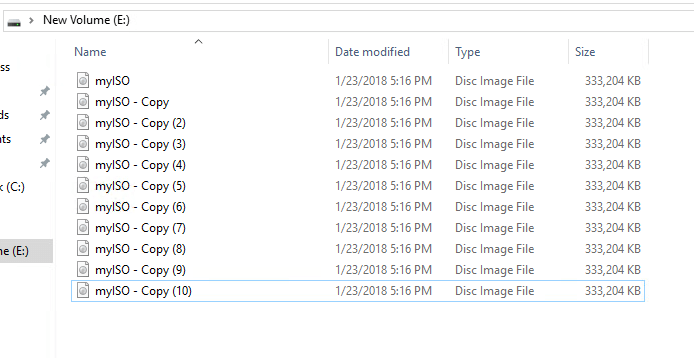

Currently 1 MB. I will add a bunch of data to it, I will throw a bunch of 300 MB ISOs on:

So now my snapshot VMDK is about 3.7 GB:

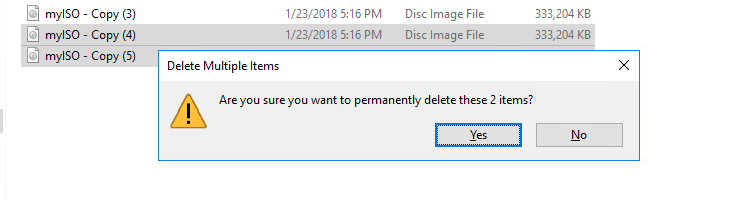

Let’ s start deleting them.

I delete 5 of them which is 1,700 MB about. This should not kick off the UNMAP.

Windows (in this case) will issue UNMAP, but nothing will be reflected on the VMFS layer.

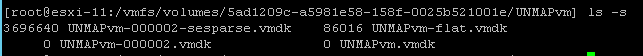

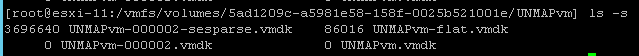

Nothing happened. Let’s delete two more. So we surpass 2 GB.

Dropped to 1.3 GB, which is what I have left in my VM. Worked perfectly and almost immediately! So this removes the limitation of not being able to take advantage of in-guest UNMAP when VMware snapshots present.

This adds a direct benefit of making your snapshots more efficient. But also reduces the restore time and the consolidation time when it comes to deleting them. They are no longer storing dead space–therefore less to copy and store.

I’m sure you might have more questions. So did I. Here are the ones I asked myself and tested out:

Q: Does this require VMFS-6?

A: Seems so. First off, VMFS-5 does not use SESparse for VM snapshots unless the VMDK is larger than 2 TB. It uses VMFSsparse in those cases, which does not support UNMAP at all. So it will definitely not work in that case. In the case of larger VMDKs and VMFS-5, it does use SESparse. In my testing, it still does not work. So it looks like it does require VMFS-6 to be used, I suspect it uses the UNMAP crawler or some related crawler that is not in effect for VMFS-5.

Q: What if my source virtual disk is NOT thin? What if it is thick?

A: No. This does not work. While I can actually get UNMAP to succeed in Linux to the file system on the VMDK, the SE Sparse VMDK never shrinks. My guess here is that since the source is not thin, it is ignored.

Q: Should I change the threshold with vSphere?

A: I wouldn’t. The UNMAP process is an async task that will work on a VM once it has hit 2 GB of dead space, the task will start to clean it out. It works on a 512 MB chunk size, doing 512 MB from one VM, then 512 on another VM (iterating through VMs that have enough dead space) until the VMs are done. It has to do a fair amount of work, re-ordering the VMDK in case the segments deallocated were not contiguous. It can be fairly performance intensive, so VMware opted to do it in small chunks and only when it is needed (2 GB or more). So I would leave it alone.

Q: How granular is this setting with vSphere?

A: This is a host wide setting.

Q: What host runs it on what VMs?

A: The host that is running the VM

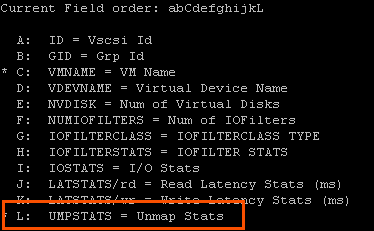

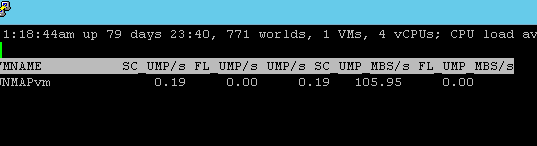

Q: How do I know if it is working with vSphere?

A: It is a good question. As far as I can tell there is nothing historical listed. But, in ESXi 6.7, they did add an UNMAP live tracker to esxtop. If you go to the VM view (press “v”) then add UNMAP stats (press “f” then “L”).

This doesn’t show historical stats, but does show it live. So if you suspect it isn’t working, you can either watch or run it in batch mode and store the results to a CSV.