This article originally appeared on Medium.com and has been republished with permission from the author.

Two common benchmarks used in HPC environments are IOR and mdtest, focusing on throughput and filesystem metadata respectively. These benchmarks are based on MPI for multi-computer processing. As MPI predates the web worldwide and cloud-native architectures, I was presented with a challenge in running these benchmarks using Docker containers. Thankfully, with a few Docker tricks, mostly focused on networking, I was able to build an image that could be easily used to run distributed MPI jobs.

The rest of this article will present the software, storage, and networking challenges I needed to overcome to run IOR/mdtest on a modern Docker environment with Ansible. But why go through this exercise in running older programs on Docker instead of bare metal as they were intended?

- Application isolation in a set of shared physical resources

- Predictable management of library dependencies and software versions

- Portable environment that can easily be moved to a different set of servers

Kubernetes or Docker Swarm offer even more sophisticated capabilities for running a cluster of containers, but these are not required for the benchmarks, so I leave that as future work.

Software Challenges

Building and installing MPI was handled by the great base image by Nikyle Nguyen here. Downloading, compiling, and installing IOR inside the image was then as easy as:

RUN git clone https://github.com/hpc/ior.git \

&& cd ior && ./bootstrap && ./configure && make && sudo make install

The rest of my Docker image and scripts are dedicated to connecting storage and networking so that the resulting binaries can be run in a distributed cluster.

Storage Challenges

This section title is misleading; the storage components are straightforward!

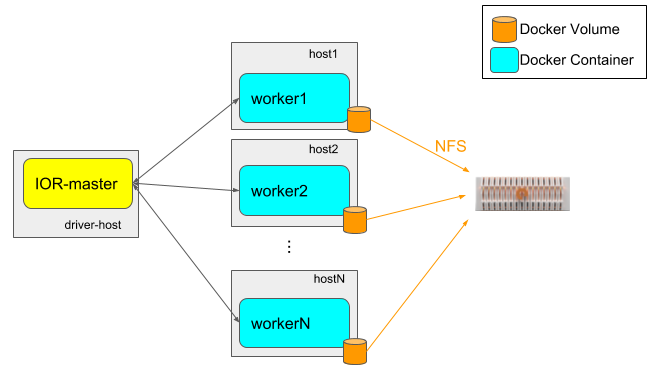

I use the Docker local volume driver to ensure the test NFS filesystem is mounted on each MPI worker container. By using Docker volumes, the dependency on the mount is explicitly encoded, instead of relying on a separate process to ensure the host node mount exists.

docker volume create — driver local -opt type=nfs \

-opt o=addr=$DATAHUB_IP,rw \

-opt=device=:/$DATAHUB_FS iorscratch

The local volume driver handles mounting and unmounting with the creation and deletion of the docker volume, but assumes the filesystem ($DATAHUB_FS) has been previously created and configured on the FlashBlade ($DATAHUB_IP). A simple volume mapping when starting each container then attaches this Docker volume to the running container.

Creating the filesystem on the FlashBlade® can be done manually with the GUI or CLI and automated with the REST API. The recreate-test-filesystem.py script demonstrates the process for creating and deleting filesystems via the REST API.

Networking Challenges

As with most applications that I have containerized, the challenges with IOR lie in networking. Specifically, most of the complexity of the Docker image is to enable communication between master and workers. There are multiple challenges: 1) hostfile and IP mappings, 2) correctly exposed ports, and 3) passwordless SSH access between all pairs of nodes.

My objective was to avoid baking any networking details tied to my particular environment into the Docker image so that is reusable elsewhere.

The topology used consists of:

Master: Single node containing the master container which launches the benchmark on all workers. I launch all scripts from this.

Workers: Multiple physical nodes with a single worker container with access to all CPU/DRAM resources. Workers are defined by an Ansible host group.

How to Set Up Passwordless SSH

In order to coordinate creation and running of an MPI job, we need connectivity between the master and all workers. This seamless connectivity requires us to set up passwordless SSH for communication between any pair of containers.

A word of warning: The resultant container contains secure keys that can be used to access another running instance of the same Docker image. Either secure access to the Docker repository or do not use this approach in a security-sensitive environment!

The first Dockerfile command generates keys and tells the ssh server to allow passwordless connections:

RUN cd /etc/ssh/ && ssh-keygen -A -N ‘’ \

&& echo “PasswordAuthentication no” >> /etc/ssh/sshd_config \

# Unlock for passwordless access. \

&& passwd -u mpi

To configure the client and server sides to have matching keys is more involved:

RUN mkdir -p /home/mpi/.ssh \

&& ssh-keygen -f /home/mpi/.ssh/id_rsa -t rsa -N ‘’ \

&& cat /home/mpi/.ssh/id_rsa.pub >> /home/mpi/.ssh/authorized_keys \

# Disable host key checking and direct all SSH to a custom port. \

&& echo “StrictHostKeyChecking no” >> /home/mpi/.ssh/config \

&& echo “LogLevel ERROR” >> /home/mpi/.ssh/config \

&& echo “host *” >> /home/mpi/.ssh/config \

&& echo “port 2222” >> /home/mpi/.ssh/config

There are several things happening here: First, create a secure key that is also made an authorized key, and second, disable warnings and prompts that hinder automation. The last two lines are the most interesting as they direct the ssh client inside the container to always use port 2222 for outgoing ssh connections. We do this to avoid conflicts between the ssh server running on the Docker host and the one inside the container!

There will now be two SSH ports open on each host: 22 and 2222. Port 22 will continue to be the standard SSH port for the Docker host, while the MPI container running on that host will have a mapping from port 2222 on the host to port 22 inside the container.

-p 2222:22

In this way, we can have two different SSH servers running on the same machine and successfully differentiate between them. This works because the MPI process inside the container always uses port 2222.

Finally, I start the worker containers with the command to run the sshd server process, which will then listen for connections on port 22 inside the container (port 2222 externally).

sudo /usr/sbin/sshd -D

Port Mappings

The previous section describes what is necessary for the control traffic from mpiexec on the master to successfully coordinate all workers, but the MPI processes themselves also need to communicate via custom ports.

We need the MPI binary to use a deterministic port range so that it can be correctly exposed by the Docker container for external access. The slightly magic way to do this with MPICH is by using a lightly documented environment variable, MPIR_CVAR_CH3_PORT_RANGE, inside the container:

ENV MPIR_CVAR_CH3_PORT_RANGE=24000:24100

And then all containers (workers and master) need to be run with the following port mappings exposed:

-p 24000–24100:24000–24100

Hostfile Mappings

The hostfile is a simple textfile with one hostname per line for each worker that will participate in the MPI job. In my scenario, I want an Ansible host group to be used by mpiexec. To translate to the hostfile, I use ansible-inventory to output each hostname in the $HOSTGROUP group and then dig to find the IP address of each host.

ansible-inventory — list $HOSTGROUP | jq “.$HOSTGROUP.hosts[]” | xargs dig +short +search > hostfile

Then, when running the master container, we inject the hostfile we created so that it can be referenced on the mpiexec commandline.

-v ${PWD}/hostfile:/project/hostfile

Note, to volume map a single file correctly, the full path of the source file must be specified.

The MPI jobs are then invoked with the set of workers as follows:

mpiexec -f /project/hostfile -n 1000 mdtest ...

Also, the worker containers need a host mapping to be able to connect back to the master. For this, we inject a hostname mapping in each worker container using the docker option “ — add-host.”

--add-host=ior-master:$(hostname -i)

With these two approaches for managing host mappings and injecting them into a container at runtime, we avoid the Docker image containing any details specific to one particular networking environment.

Summary

I presented here a Dockerfile and script useful for running MPI-based benchmarks IOR and mdtest using Ansible and Docker. As with many Docker problems, most of the challenges lie in enabling communications between different containers.

Written By: