Legacy vendors offering Retrofit All-Flash Arrays (AFAs) have been at the forefront of supporting “Big SSDs” – 1.92TB and 3.84TB SSDs over the last couple of years and have now announced support for 15TB SSDs, an impressive 4X increase. Key benefits promoted in these announcements are improved rack density, workload consolidation and overall scale. It is pretty incredible to have 360TB of raw flash now fitting in just 2 rack units and tens of PBs on a single AFA!

Will Big SSDs alone unlock the full value of flash for your business?

Let us dig a little bit deeper into this question.

A few things need to be considered. Let’s examine one after the other.

Currently, a very small portion of the market require raw capacity from Big SSD systems. AFA systems with data reduction tend to be smaller in raw capacity than their disk counterparts. Our experience and market analysis from industry analysts suggest that only 5% of AFA market today is arrays over 200TBs raw. As with any AFA and especially with Big SSDs, customers will want dual-drive failure protection to avoid data loss exposure. Assuming a reasonable RAID stripe width, the minimum viable AFA configuration with 15TB SSDs is 180TBs of raw capacity (12 x 15TB SSDs). This would seem to exceed the size requirements of 95% of the AFA market.

Also, Big SSDs can reduce the reliability of these systems. When 15TB SSDs are used for a 180TB AFA configuration, that is basically 12 of these Big SSDs. This configuration dramatically increases the exposure when one of those SSDs fails. A single Big SSD is almost 10% of this system, so upon an SSD failure, a significant amount of capacity is lost and a lot of data needs to be rebuilt. This is similar to losing 100 drives simultaneously on a 1000 drive disk array!

So, what about the 5% of the market that wants Big SSD systems?

Are Big SSDs inside Retrofit AFAs well suited for large capacity configurations? The short answer is No!

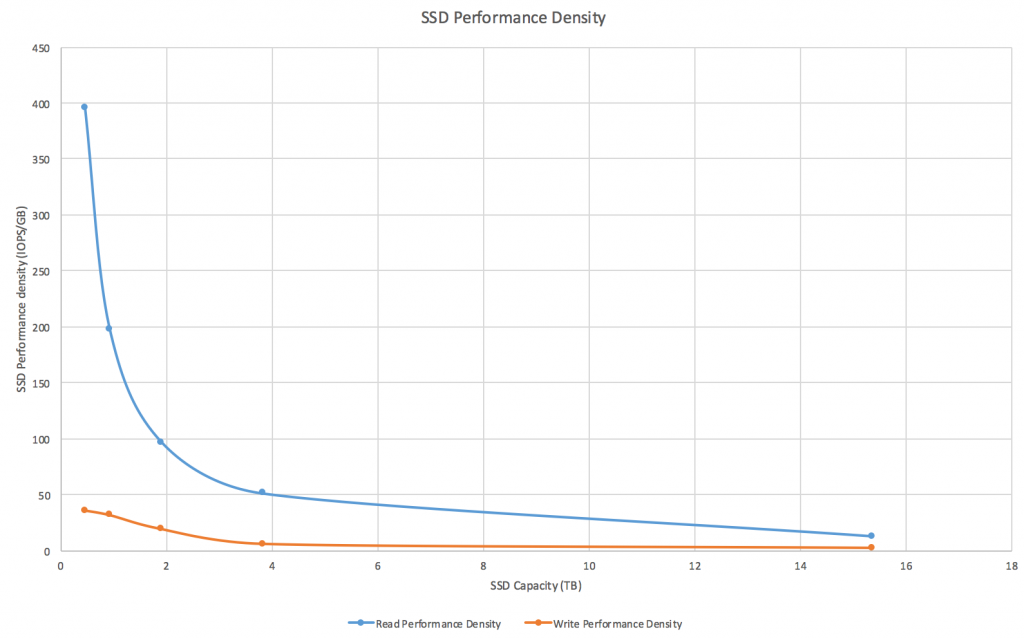

AFAs, especially in larger configurations, are typically deployed for accelerating and consolidating mission-critical application workloads. While rack density is important, delivering high and consistent all-flash performance is the bedrock for enabling massive workload consolidation. Yet, the performance of a Big SSD is similar to a smaller SSD so per TB the performance (i.e. performance density measured in IOPS/GB) is lower as the graph below illustrates.

Lower performance density increases the likelihood of performance variability as the SSD capacity is consumed. Gaining rack density should not come at the expense of performance density.

Retrofit AFAs are 20-year old architectures and re-configured with all-flash. Originally built for disk, these architectures lack rich, always-fast metadata architecture – the metadata speed and granularity that is needed to implement advanced data reduction and flash management. In the absence of compelling data reduction, Retrofit AFAs are often forced to drive rack density gains by seeking to support Big SSDs.

At Pure, our strategy is to deliver all-flash with purpose-built hardware and software. Purpose-built AFAs that scale down and scale up without making a customer choose between rack density or performance density IOPS/GB.

We custom-designed Flash Modules for FlashArray, based upon industry-standard consumer MLC and 3D TLC SSDs. Each Flash Module combines the power of two SSDs into the same space as a typical 2.5” drive, so you get double the capacity with double the performance per drive slot compared to competitors’ single drives of the same capacity. Coupling the density of our 3.8TB Flash Modules with Pure’s industry-leading, average 5.2-to-1 data reduction across our install base means that we’re delivering nearly the effective capacity of 15TB SSDs – at the performance density of 1.92TB SSDs. You get the rack density advantage without trading off for performance density IOPS/GB.

So, is there a future for Big SSDs in the Pure Storage FlashArray?

Of course! However, at the 15TB SSD size and potentially larger capacity sizes in the future, we believe that there is too much flash locked away as a black box behind an ordinary SSD controller. Inside an SSD there are effectively 64 small drives which can be driven in parallel if they were not constrained by SATA/SAS protocols. To use the flash effectively at higher NAND densities, we need to break through the old disk access methods and gain direct access and visibility to NAND. This enables centralized, system-level decisions to optimize performance, efficiency and economics – thereby delivering both rack and performance density.

NVMe is a technology that promises to unlock the full value of flash. It offers parallel access and direct visibility into NAND. We had this foresight and designed in dual-fabric (SAS and PCIe/NVMe) into FlashArray//m from the start. We also invested in developing dual-ported, hot-pluggable NV-RAM Modules that connect via NVMe within the FlashArray//m. And, we have gone even further in our recently announced FlashBlade product where we have invested in internal software that communicates directly to NAND flash and performs all flash management. The benefit: performance density is not compromised while increasing rack density.

With closely-coupled flash-optimized hardware and software, we’re just getting started in exploiting its advantages. Best of all, Evergreen™ Storage means that customers will have a non-disruptive path to those advantages, including future gains in rack density and performance density. Interested in learning more? Stay tuned!