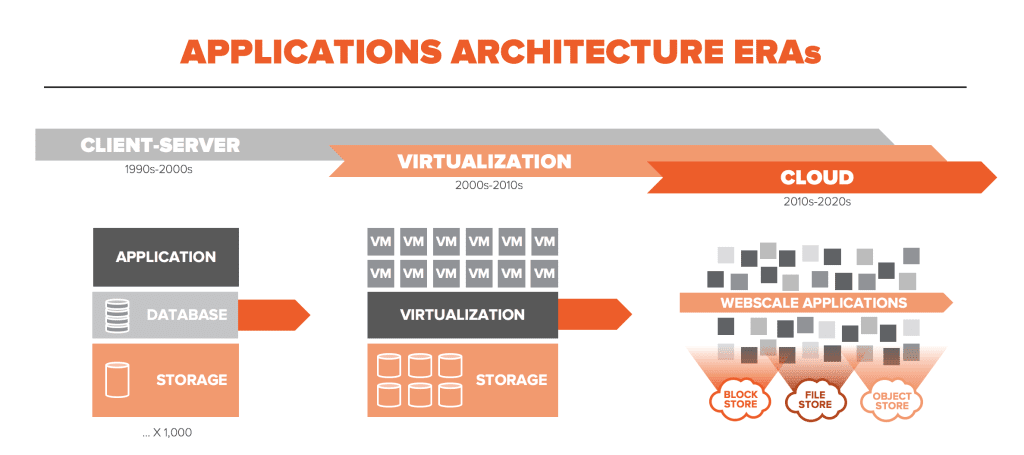

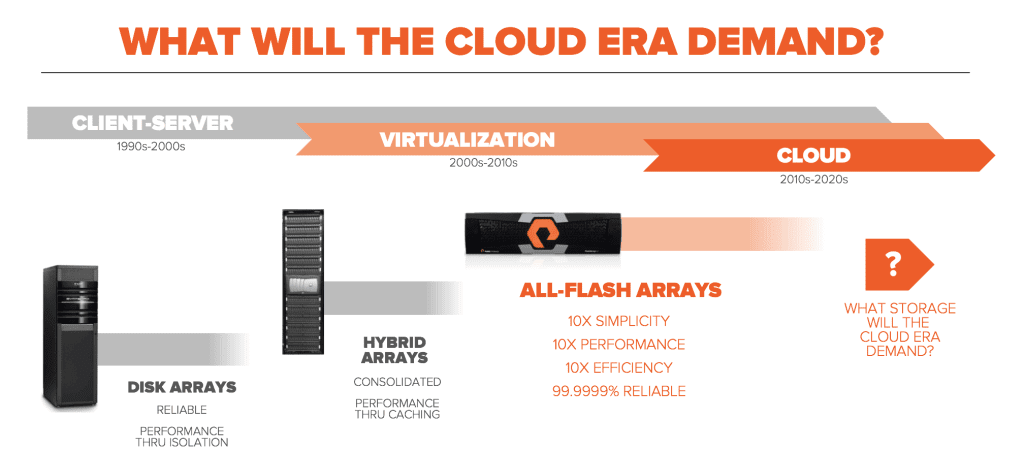

At Pure, innovation is Job 1. When we first started in 2009, we saw an incredible opportunity to re-think storage for the era of virtualization using flash. Virtualization was increasingly prevalent and driving new levels of mixed IO, and flash was rising as the obvious solution.

But at the time, most vendors weren’t architecting for the true potential of flash as a mainstream storage media. Companies like Violin and Fusion-IO were focused on building exotic flash “race cars,” and disk vendors like EMC (now subsumed into Dell) and NetApp were convinced that flash was just a caching tier in the architecture. So we created a new recipe for flash – combining consumer-grade flash with sophisticated, purpose-built storage software to drive down cost and deliver enterprise-class resilience – thus creating the all-flash array category. While it’s been fun leading the AFA transformation, a new era is upon us – the Cloud Era – and it’s time to update the recipe once again.

New Needs, New Ingredients

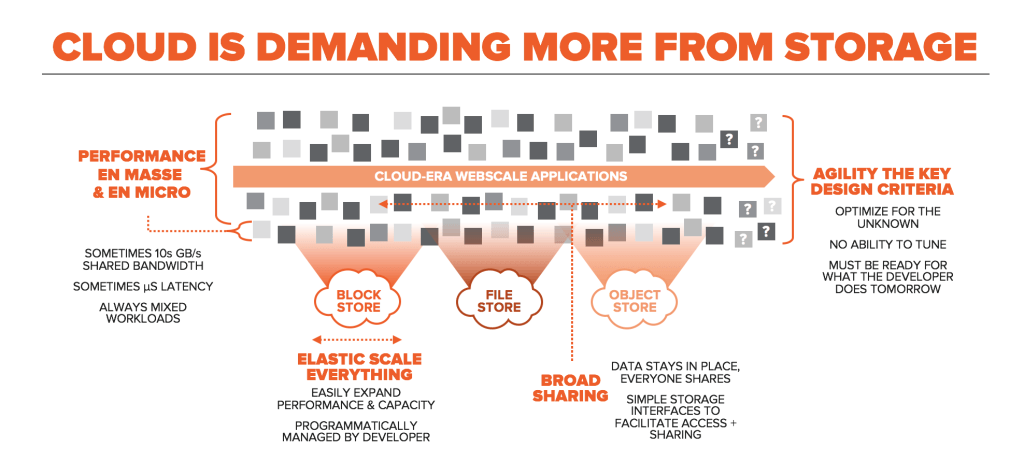

Cloud applications are different, and require more from storage. When a developer builds a web-scale application at a public cloud provider, they program to a well-defined set of storage services: block, file, and object. These services are assumed to be endless, elastic, facilitate broad sharing of data, and easily automatable and integratable into the application itself.

Cloud-era applications are far more distributed than their predecessors. Facebook has remarked that their “East/West” traffic between machines can be 1000-fold that of the “North/South” traffic between end-user and machine. Programming models have necessarily evolved to make distributed systems more efficient. Remote direct memory access (RDMA), for example, can double CPU performance and quadruple memory bandwidth. NVMe both within the storage system and transported over fabrics (think RDMA + NVMe) will provide a similar leap forward for data storage, especially when coupled with ubiquitous fast Ethernet (now growing to 25/50/100 Gb/s speed). NVMe allows each CPU core within a storage array to have a direct and dedicated queue to each flash device, and NVMeF allows data stored on remote systems to be accessed more efficiently than local storage (the local system is doing less work). By extending intra-server protocols across the network, RDMA and NVMeF are expanding the notion of locality from the server chassis to the LAN.

Cloud-era applications are far more distributed than their predecessors. Facebook has remarked that their “East/West” traffic between machines can be 1000-fold that of the “North/South” traffic between end-user and machine. Programming models have necessarily evolved to make distributed systems more efficient. Remote direct memory access (RDMA), for example, can double CPU performance and quadruple memory bandwidth. NVMe both within the storage system and transported over fabrics (think RDMA + NVMe) will provide a similar leap forward for data storage, especially when coupled with ubiquitous fast Ethernet (now growing to 25/50/100 Gb/s speed). NVMe allows each CPU core within a storage array to have a direct and dedicated queue to each flash device, and NVMeF allows data stored on remote systems to be accessed more efficiently than local storage (the local system is doing less work). By extending intra-server protocols across the network, RDMA and NVMeF are expanding the notion of locality from the server chassis to the LAN.

If one wants to understand more about how these technologies are being assembled to create cloud-era storage, one can look no further than the hyper-scale cloud providers themselves. A common misnomer of the cloud is that it runs on hyper-converged, software defined DAS infrastructure. In actuality, the hyperscalers leverage multi-tier architecture (separating data from compute for greater efficiency), and combine custom software with specialized hardware to create multiple highly-optimized and independent compute and data tiers within their infrastructure. Reflective of this architectural shift, Facebook has released Open Compute Project specifications for an NVMe JBOF (Just a Bunch of Flash) appliance called Lightning. Azure has publicly talked about its heavy use of FPGAs in its infrastructure, and AWS purchased stealth startup Annapurna Labs, focused on silicon for NVMe. If you’ve rented a VM, used a database service, or instantiated a block store recently in the public cloud using flash, then you’ve likely already used RDMA, NVMeF, and NVMe flash.

A New Recipe for Cloud-Era Flash

We’re all-in on leveraging these ingredients to create a new recipe for flash for the cloud era. Doing so requires building an engineering organization that can innovate from software to silicon, deeply integrating all the layers to make complex technology simple.

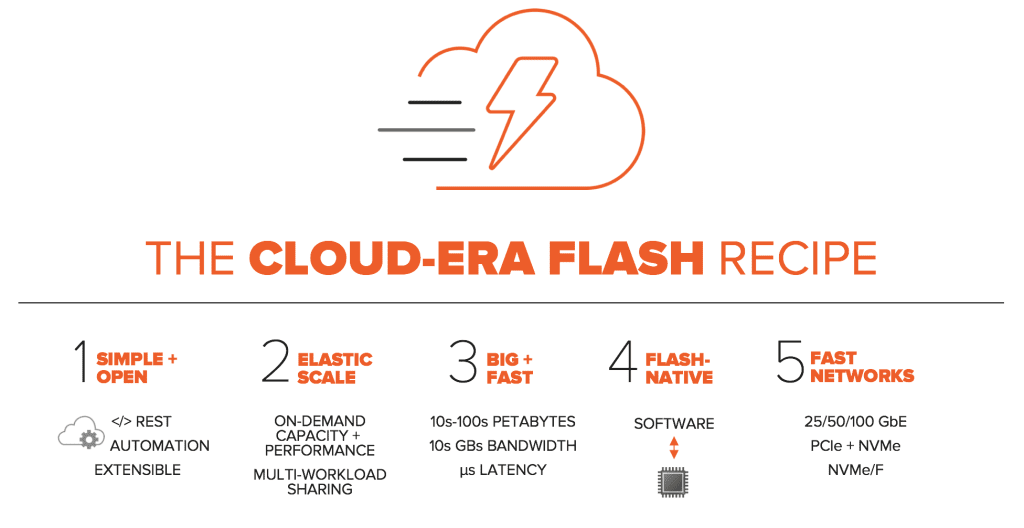

We believe flash for the cloud era will be defined by five key principles:

First, the cloud demands simplicity. To deliver true infrastructure as code, you must automate, and you can’t automate what’s complex. This next generation will be automation-centric, to make storage a natural extension of the application.

Second – cloud era storage scales elastically. This means we need to create an experience for our developers that is just like the public cloud – block, file, and object storage services that simply feel endless.

Third – the cloud demands new levels of big and fast. 10s-100s of PBs of storage, 10s of GBs of bandwidth, µs latency, and the ability to share this performance broadly between application micro-services without copying data.

And finally – we believe there are two key technical underpinnings of this next generation: Storage intelligence must be flash-native. In the early AFA recipe, we started by purpose-building storage software for flash, but in the next generation we need to push forward to integrate this intelligence with the flash directly to get to the next level of performance and density. And the last piece is fast networks running fast protocols. There’s no room in the cloud era for legacy disk protocols like SCSI, and we must design to assume a world of fast interconnects, RDMA, and NVMe.

It’s these last two bits that we expect legacy vendors, who have been focused on retrofitting flash to 20+ year-old storage systems, to stumble over. Retrofits can’t make disk-era software speak natively to flash, and can’t exploit the massive parallelism of these new protocols without purpose-building the software and hardware for it. We expect other vendors to adopt NVMe in some form, but ultimately, we view technologies like NVMe, much as we viewed flash, as an enabler – one key ingredient in delivering a generational advance.

FlashBlade: We’ve Already Begun

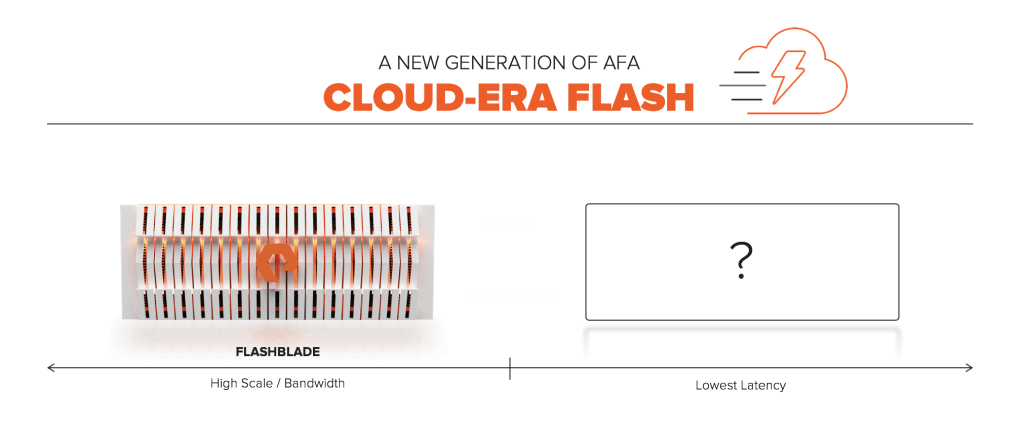

Although the recipe is new, you can already see it in action at Pure Storage. Last year, we announced FlashBlade – the first all-flash array designed from the ground-up – for tackling the opportunity of cloud-era unstructured data use cases.

We built FlashBlade from three simple ingredients: flash, scale-out software and fast networking (both 40 Gb/s Ethernet and PCIe). Today, it’s resetting the bar for performance (>15 GB/sec. of bandwidth), density (1.5PB in 4 rack units) and economics for big data. FlashBlade has already won some of the most data intensive workloads in the industry—vehicle automation, genomic sequencing, facial recognition, rocket simulation, textual analysis, software development, chip design, targeted advertising, geophysics, and more. Moreover, FlashBlade has allowed multiple customers to consolidate 20 or more racks (think refrigerator-sized) of legacy storage down to a single 4U FlashBlade (about the size of a microwave, yielding huge power and space savings).

FlashBlade is designed for when big + fast matters, and in particular when high bandwidth over a very large multi-PB data set matters. This represents a lot of cloud-era workloads, but not all. Other workloads need the very lowest latency – think fast transactions, index lookups, or real-time interaction with users. For that, we have something new on the horizon.

Join us tomorrow at 10am Pacific Time for our launch event – and see how we apply what we’ve learned with FlashBlade to create a new recipe for low-latency cloud-era block storage.

Delivering the Data Platform for the Cloud Era

At Pure our mission is simple – we help our customers put their data to work to drive innovation. This means bringing their business three critical attributes to succeed in their own digital transformation:

- Speed: we help customers be faster, everywhere, by democratizing flash and enabling it to run every business process and speed every innovation project;

- Insight: we help customers gain insight from data at scale, bringing analytics to real-time and changing what is possible when you can look at all your data at once;

- Agility: we help simplify hybrid cloud and empower developers, making it possible for CIOs to leverage the best of both cloud worlds, and deliver their developers infrastructure on-demand.

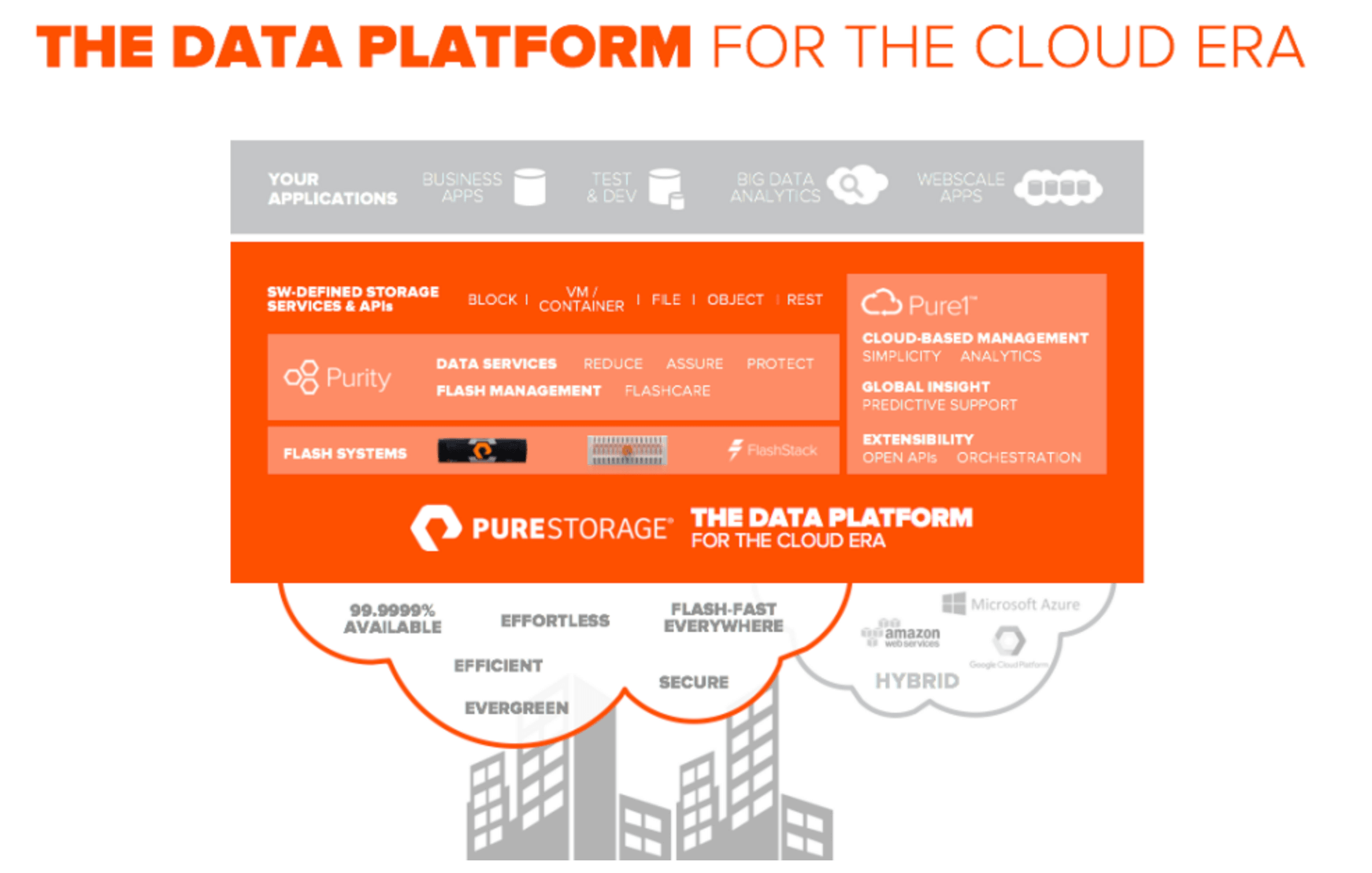

We do this by delivering the Data Platform for the Cloud Era – a well-integrated set of software-defined data services (block, file, object, and VM/container), delivered by our flash systems, software, and cloud-based management that enables our customers to put their data to work to gain business advantage.

Our Data Platform is meant to accelerate everything – from traditional databases and business applications to big data analytics to web-scale applications – because you have it all. Our Data Platform is built on software – Purity Operating Environment – our software that takes flash from our flash systems and turns it into software-defined storage services, ready to host your applications. And our Data Platform is driven by APIs and managed from the cloud – Pure1 – a whole new level of simplicity and sophistication. In the coming months you’ll hear a lot more about our Data Platform – both what it can do for you today, and where we’re taking it tomorrow.

Getting Storage’s Mojo Back

It’s been a tumultuous, change-driven decade for storage – but a sea change is underway. Storage has rapidly become one of the most critical enablers of innovation in the new cloud-era stack. Legacy vendors who took incumbency for granted and didn’t invest in innovation are being pushed aside, and technology change coupled with innovative new entrants are bringing the mojo back to the storage industry. And the vendors who are innovating are being rewarded – and that funds more innovation – in a self-sustaining cycle:

Hopefully this post gives you a sense for how Pure is innovating, why we’re winning, and what the next decade will bring. We’re excited to see the transformation of all-flash solutions to meet the needs of the cloud era, and we’re investing to lead that transformation. Tune in tomorrow to see what’s next!