Introducing vSphere Virtual Volumes

Cloud-Era Flash: “The Year of Software” Launch Blog Series

- The Biggest Software Launch in Pure’s History

- Purity ActiveCluster – Simple Stretch Clustering for All

- FlashBlade – Now 5X Bigger, 5X Faster

- Fast Object Storage on FlashBlade

- Purity CloudSnap – Delivering Native Public Cloud Integration for Purity

- Simplifying VMware VVols – Storage Designed for Your Cloud (THIS BLOG)

- Purity Run – Opening FlashArray to run YOUR VMs & Containers

- Extending our NVMe Leadership: Introducing DirectFlash Shelf and Previewing NVMe/F

- Windows File Services for FlashArray: Adding Full SMB & NFS to FlashArray

- Policy QoS Enables Multi-Tier Consolidation

- Introducing Pure1 META: Pure’s AI Platform to enable Self-Driving Storage

—

This is a blog post I have been waiting to write for quite some time. I cannot even remember exactly how long ago I saw Satyam Vaghani present on this as a concept at VMworld. Back when the concept of what is now called a protocol endpoint (more on that later) was called an I/O Demultiplexer. A mouthful for sure. Finally it’s time! With pleasure, I’d like to introduce VVols on the FlashArray!

Virtual Volumes solve a lot of the problems VMware and/or storage admins face today. The central problem is one of granularity. If I want to leverage array-based features I don’t have the option to apply it at a VMDK or even VM level. I set it on a volume (a VMFS datastore). So it is all or nothing, for every VM that is on that VMFS. If I want to replicate (for instance) a single VM, I had to either replicate the volume and every one else on it. Or move it to a datastore that is replicated.

But how do I know that volume is replicated? How do I make sure that volume STAYS replicated?

Well with VMFS you can use plugins and extra software to handle this. But it is still not in the intelligence of VMware–and once again not a particularly useful granularity.

So then comes VVols!

One Platform.

Every Workload.

Learn how Pure Storage unified, as-a-service storage

platform powers your data strategy with ease and efficiency.

What is a vSphere Volume?

VVols provides, at a basic level, virtual disk granularity on an array level. Allows you to create, provision, manage, and report on your VMs and their virtual disks on a VM or virtual disk level. But, in reality, VVols means much more than that. It means:

- VM granularity. Of course.

- Storage Policy Based Management

- Direct and standardized integration from an array level to vCenter

- Tighter control and reporting on your VMware infrastructure

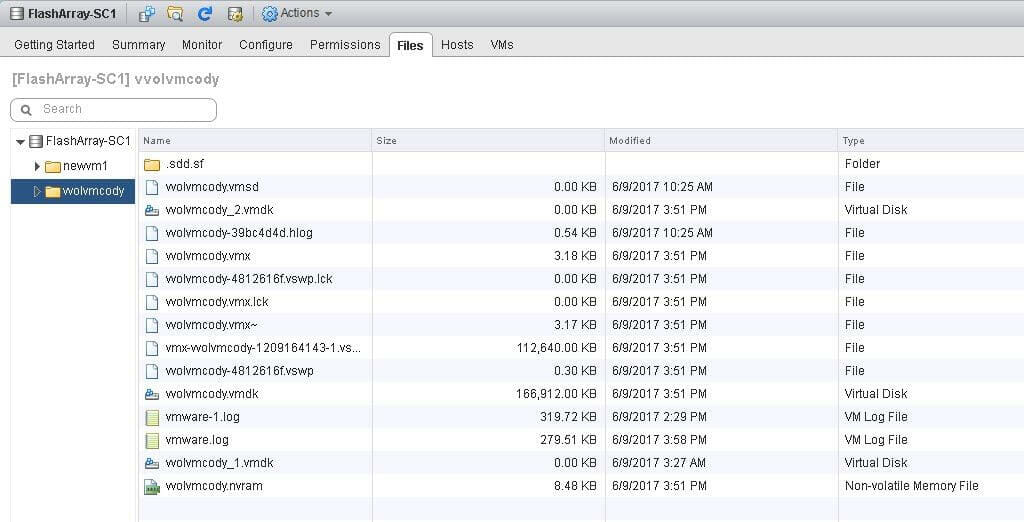

First off, what is a VVol? A VVol is a standard SCSI volume, just like an RDM in a sense, except it goes through the vSCSI stack of ESXi. This grants you the ability to use things like Storage vMotion, SDRS, SIOC, and other virtual storage features in vSphere without sacrificing efficient use of array-based features. There are a few types of VVols. The main three are:

- Config VVol--every VM has one of these and it holds the VMX file, logs, and a few other descriptor files. 4 GB in size.

- Swap VVol--every VM has a swap VVol that is created automatically upon power-on of the VM and deleted upon power-off.

- Data VVol--for every virtual disk, there is a respective volume on the array that is created. Different configurations and policies can be applied to each one.

Now what a VVol looks like and is reported as depends on the underlying array to a certain point. On the FlashArray, it is no different than any other volume. Therefore it can be used with the features you use today and managed in the same way. The only major difference between a VVol and a “regular” volume (like one hosting a VMFS) is really just how it is addressed by a host. A “regular” volume is presented with a normal LUN ID, so let’s say 255. A VVol is a sub-lun, so it would be 255:4 for instance, it is addressed through a primary volume.

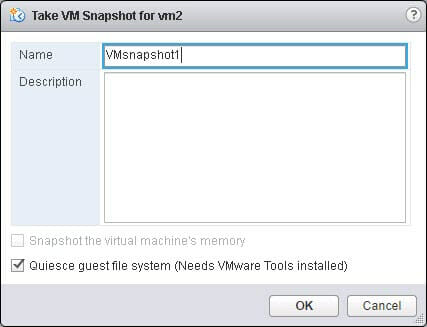

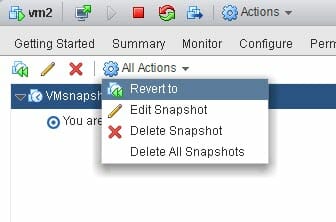

Snapshots

Now that we have virtual disk granularity on the array, we can ditch the VMware snapshots. VMware snapshots do well in a pinch, but aren’t meant for any amount of long term life and can hurt performance when in existence and during the consolidation when deleted. But since we have this granularity and the connection through VASA, we can use FlashArray snapshots instead!

Benefits of FlashArray snapshots are:

- Created instantly, no matter how large the volume

- 100% deduped when created, so no capacity consumption upon creation.

- No performance impact. They are not copy-on-write or redirect-on-write so they will not affect the performance of the VM when created and through their life

See the difference in a great post by Cormac here:

So now when you click on a VM that uses VVols and choose create snapshot, it actually creates a FlashArray snapshot.

If you have VMware tools installed, you can have it quiesce the guest filesystem before taking the FlashArray snapshot, giving you the benefit of per-virtual disk granularity AND file system consistent snapshots.

There are two “types” of snapshots too. There is a managed snapshot. This is a snapshot that VMware “knows” about, in other words one created via VMware (whether that be the GUI, PowerCLI etc.). Then there is an unmanaged snapshot. This is a snapshot that VMware doesn’t “know” about. Essentially one that was created directly on the array. Both can be used to restore from. VMware only knows about the managed ones, the FlashArray knows about both the managed and unmanaged snapshots.

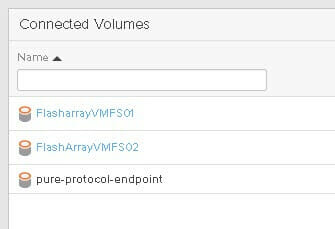

Protocol Endpoints

So what is this primary volume? Well it is called a protocol endpoint. A PE is essentially a mount point for a VVol. This is the only volume you need to manually provision to a ESXi server. Multipathing is configured on this volume, so are things like queue depth limits etc. On the FlashArray, one protocol endpoint is automatically created when the Purity Operating Environment is upgraded to the version (5.0) that includes VVols. This volume is called “pure-protocol-endpoint”.

You can connect this PE to the host or host groups in which you plan on provisioning VVols to. When a new VM is created (or a new virtual disk), the VVols are created and the array “binds” them to the appropriate PE that is connected to that host.

Do we support more than one PE? Yup. Do you need more than one PE on the FlashArray? Like ever? I would say a pretty emphatic no. The auto-created one should be suffice for all hosts. Look for a blog in the near future where I will go into more detail on that.

Storage Containers

So with no VMFS anymore, what are you choosing when you provision a VM? What are you choosing from if there is no more datastore?

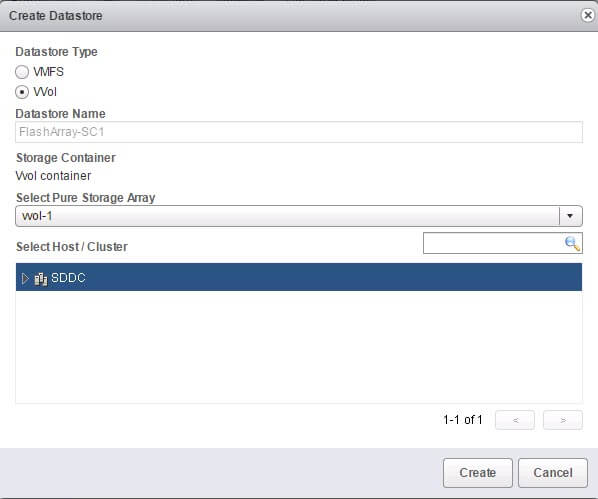

Well, a very nice design feature of VVols in general by VMware is to not change the look/feel/experience of VM provisioning and management too much. So VMware admins don’t really need to learn new processes to use VVols instead of VMFS. So in the case of VVols, there still is a datastore, it is just not a filesystem or a physical object. A VVol datastore, also called a Storage Container is what you choose now. A storage container doesn’t really have configurations applied to it. How a VVol is configured is based on the capabilities assigned to it in a VMware storage policy. More on that later.

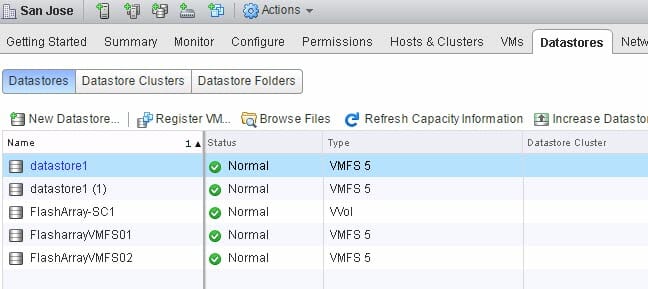

A storage container represents one array and one array only, this is a requirement of the VMware VVol design.

Currently the FlashArray auto-creates your storage container and it defaults to 64 TB, though this size is configurable. It is important to note that at current we only support one storage container per FlashArray. This generally should not be a big deal, because since array based features are applied at a VVol level, not a datastore one. So the concerns of the past of having multiple datastores (replication granularity, snapshot granularity, restores, reporting, etc.) are exactly that–things of the past.

That being said, we will be introducing multiple storage container support in the near future. We decided to delay it mainly due to the fact that we have a feature coming out a bit later than VVols that will fit nicely with the storage container object type.

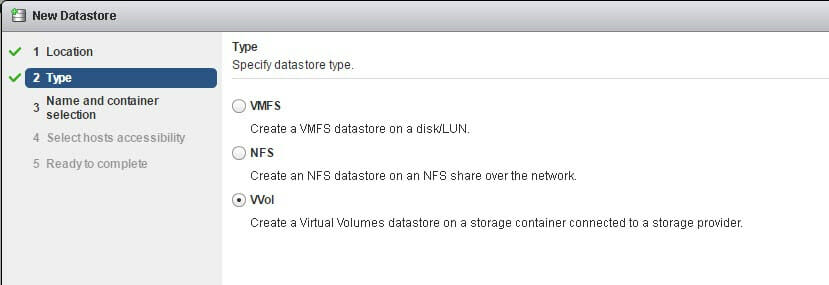

Note that a SC shows up like a datastore, and is mounted in the same process as a VMFS–through the add storage wizard.

Lastly, you can still navigate a SC just like a VMFS if you want to. A SC is basically an abstraction of your VVol elements and relationships and “fakes” a file system:

VASA Provider

So that takes care of the physical connectivity and datastores, but what about communication between vCenter and the FlashArray? Well this is where VASA comes in. The vSphere API for Storage Awareness (VASA) was introduced back in vSphere 5.0 and provided some basic communication between an array and VMware to indicate how a VMFS volume was configured (RAID level, tiering, replication etc).

A few vendors supported VASA 1.0 and very few customers actually used it (in my experience). In vSphere 6.0, VASA 2.0 was released. This included the initial release of VVols (version 1). In vSphere 6.5, VASA version 3.0 was released and VVols version 2.0 came with it. The main difference between VVols 1.0 and VVols 2.0 is replication. Before VVols 2.0, array-based replication was not supported by VMware. You could technically use array-based replication if the array supported it, but there was no good way to actually implement disaster recovery, because it wasn’t in the VASA specification. This missing feature slowed down VVol adoption for version 1 quite a bit.

VASA 3.0 add specifications for replication and SDKs for DR of replicated virtual volumes. See the post below about using PowerCLI for DR automation of VVols:

The FlashArray VASA Provider is version 3. So this means to deploy the FlashArray VVol implementation, you need to be on vSphere 6.5. There are quite a few benefits of 6.5, so I think moving as soon as possible to it is a good idea regardless.

The FlashArray VASA service has the following architectural features:

- Runs inside of the FlashArray controllers. There is no need to deploy a VM and configure HA for our VASA Provider. Each controller runs a copy of the provider.

- Our provider is active-active. Either VASA instance can accept calls from vCenter.

- There is no VASA “database” with the FlashArray. As far as the controllers are concerned the VASA service is stateless. All of the binding information, VVol identifiers, etc are stored in the same place as your data. On the flash. So if you lose both controllers somehow. Just plug in two new controllers and turn them on. The VASA service will start and pick up where it left of. No need to restore or rebuild anything.

When you register our VASA provider with vCenter, the FlashArray will tell vCenter about all of the possible FlashArray capabilities. What these capabilities are is entirely up to the vendor, in this case Pure.

Storage Policy Based Management and Storage Profiles

We have the following capabilities:

| Capability | Value | Description |

| FlashArray | Yes or no | I want a VM/VMDK to be (or not be) on a FlashArray |

| FlashArray Group | Names of FlashArrays | I want a VM/VMDK to be on any one of these particular FlashArrays |

| QoS | Yes or no | I want a VM/VMDK to be (or not be) on a FlashArray with QoS enabled |

| Local Snapshot Capable | Yes or no | I want a VM/VMDK to be on a FlashArray that has one or more snapshot policies enabled |

| Local Snapshot Interval | Frequency in minutes, hours, days, months, or years. | I want a VM/VMDK to be protected by a snapshot policy of a specified interval |

| Local Snapshot Retention | Frequency in minutes, hours, days, months, or years. | I want local snapshots for a VM/VMDK to be retained for a specified duration |

| Replication Capable | Yes or no | I want a VM/VMDK to be on a FlashArray that has one or more replication policies enabled |

| Replication Interval | Frequency in minutes, hours, days, months, or years. | I want a VM/VMDK to be protected by a replication policy of a specified interval |

| Replication Retention | Frequency in minutes, hours, days, months, or years. | I want replication point-in-times for a VM/VMDK to be retained for a specified duration |

| Target Sites | Names of FlashArrays | I want a VM/VMDK to be replicated to any one of these particular FlashArrays |

| Minimum Replication Concurrency | Number of targets | I want a VM/VMDK to be replicated to at least this number of FlashArrays. If you have three target sites listed and a concurrency of two, for the VVol to be compliant it must be replicated to two of those three |

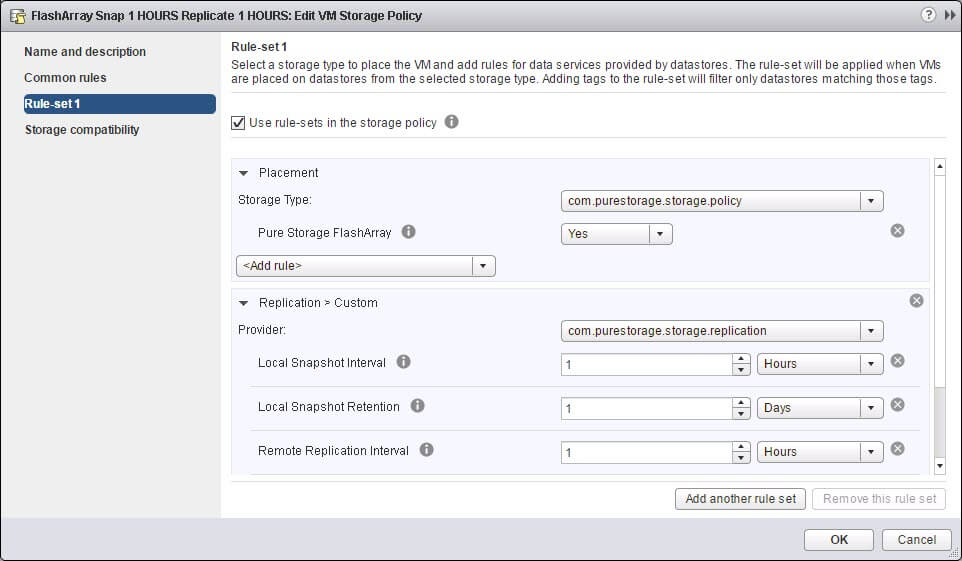

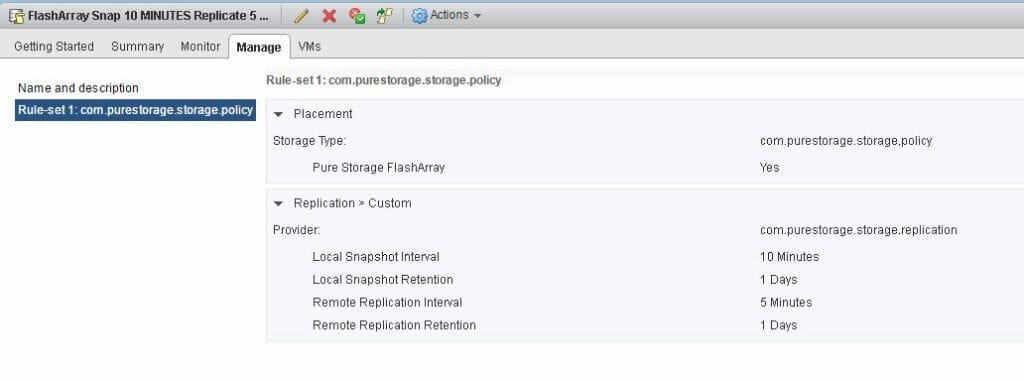

So what do these capabilities mean? Well once you have registered the provider, you can create storage policies inside of vCenter with a mixture of these capabilities and if you want VMware capabilities (like IOPS limits etc.).

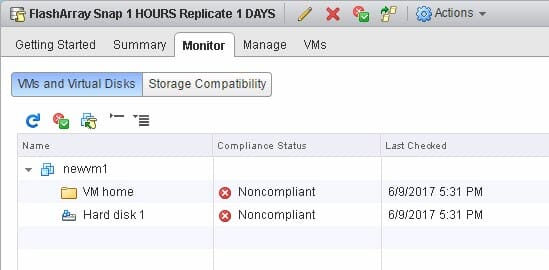

So the above policy has the following items:

- Must be on a FlashArray

- Must be locally snapshotted every 1 hour

- Those snapshots must be retained for 1 day

- Must be replicated with an interval of 1 hour

Once you create a policy you can assign it to a VM. You can do this after a VM has been created or when you are provisioning a new one. Below I am deploying a VM from a template and I am assigning the policy above. vCenter automatically tells me which storage is capable of fulfilling this policy by marking them compliant.

When you choose a policy, vCenter will filter out all datastores that are not compliant with that policy. So what does it mean to be compliant? Well, the storage container for the FlashArray is compliant if the respective FlashArray has one or more protection groups that matches the policy. A FlashArray protection group is essentially a consistency group with a local snapshot and/or replication policy applied to it.

It will match as specific as you indicate. If you just say 1 hour snapshot and that’s it, all protection groups that have a 1 hour snapshot policy will be returned. Some might also be replicated, some may not. If you want it to return compliancy for 1 hour snapshots but NOT be replicated, you would indicate replication capable as No.

Since I am choosing a policy that has a snapshot/replication capability in it, I have to choose a replication group. For the FlashArray, a replication group is a protection group. This is how I can choose which protection group I want it to be in.

When I finish the provisioning wizard, the FlashArray will:

- Create the VVols (config and data)

- Add them to the appropriate protection group(s) if specified

- Bind them to the PE presented to the ESXi host that will run the VM

A really nice benefit of policy-based provisioning is not just initial placement. But ensuring your VVols stay that way. If I go to a protection group that a VVol is in and change the replication schedule from 1 hour to 30 minutes, VMware will mark that VM or virtual disk as non-compliant.

Now you can either fix the configuration, or re-apply the policy to the VM so it can be properly configured by VASA. Cool stuff.

Other Benefits

In a nutshell, that is the FlashArray VVol architecture. But there are some additional benefits I haven’t got into yet. Here are just a few.

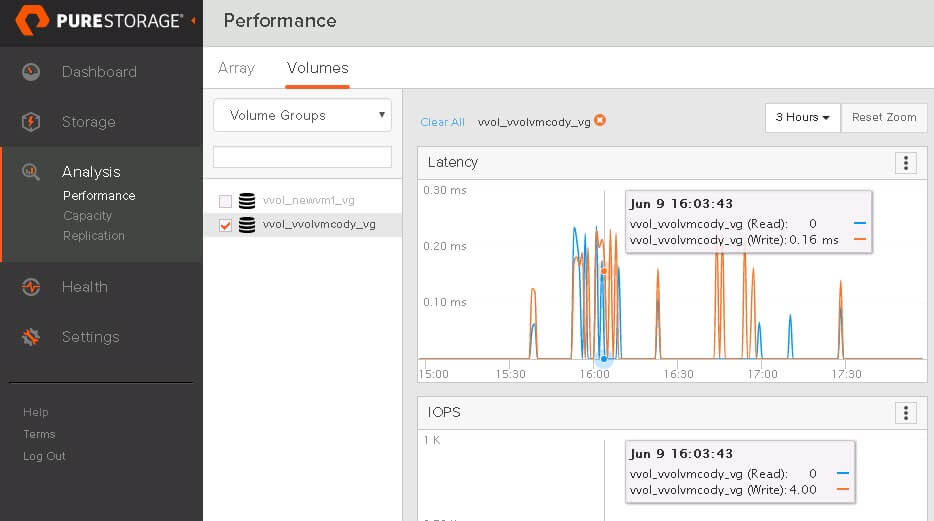

VM reporting. When a new VM is created the FlashArray will create the volumes for the VVols but also we introduced a new object called a volume group. Nothing particularly profound, but a volume group will represent a virtual machine. So you can report on the VM as a whole by reporting on the group, or report individually on the VVols as they are just volumes

This includes real time reporting, and historical, on the VM or the volumes. Also with the FlashArray, you can now see how well each virtual disk is reducing.

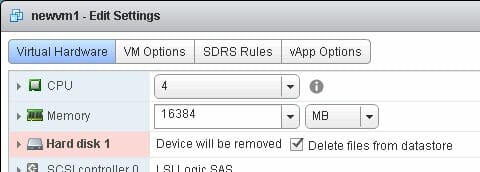

Virtual disk undelete. When you deleted a virtual disk from a VM on VMFS, you needed to resort to something backup that hopefully was configured. With the FlashArray, even today, when you delete a volume it goes into our destroyed volumes folder for 24 hours. For 24 hours it can be recovered. So you get this same benefit with VVols because they are volumes. So if you delete a virtual disk from a VM you have one day to revert the deletion without any pre-configured backup (of course you should always still use backup appliances for important VMs, but this gives you some “oops” protection).

Simplicity. Seems like an obvious thing, but this really makes a big difference with VVols. VMware leaves a lot of room for vendors to make decisions and this can lead to complexity. We have strived to make our VVol implementation as simple as possible, and I think we really have. After your Purity upgrade, you can configure and start provisioning VVols from scratch to your VMware environment in a few minutes.

Flexibility. This is a big one. With VMFS, moving data from a VMware VM to a physical server, or to another hypervisor or whatever required conversions or some kind of host-based copy. This led people to still be using Raw Device Mappings even today. Which has its own set of drawbacks. With VVols, on the FlashArray, this is no longer an issue. A VVol on the FlashArray is just a regular old volume. The only major difference is that it is just addressed as a sublun. So if you have an RDM today, it can be a VVol, just add it as a sublun. Bam! Now it is a VVol. Have a volume presented to a physical server? Present it as a sublun. Bam now it is a VVol. No conversions/copying etc. Want to share a volume between a VM and something else? Connect it to your ESXi as a sublun (VVol) and at the same time to another non-ESXi host in the regular fashion. Bam! It’s a VVol and a “regular” volume at the same time. The process of importing a existing volume or snapshot as a VVol is described in this post generically (look for more FlashArray specifics in the future):

So moving from VMFS to VVols on the FlashArray actually gives you better data mobility, not worse. It’s actually the opposite of what many had feared about supposed additional lock-in with VVols. Because there is no proprietary file system (VMFS) these issue goes away.

FlashArray vSphere Web Client Plugin

And just like with VMFS, there is value add to be had (yay rhymes) with a vSphere Web Client Plugin. We have updated our plugin to include some new VVols features:

Storage Container/Protocol Endpoint provisioning. Before you mount a storage container you need to have a protocol endpoint provisioned to the ESXi hosts you want to mount it on. So this requires you to use the GUI/CLI/REST to add the PE to the host or host group then mount the SC inside of vSphere. With our plugin this whole process is automated. Choose the cluster, we will then provision a PE to the hosts (if needed) then mount the SC, all in a few clicks in one spot.

VVol Tab for a VVol-based VM. This shows the underlying volume information from the FlashArray for the virtual disks of that VM.

Also there are a few workflows, you can access them on this tab or through the right-click menu of the VM.

VM Undelete. We automate the process mentioned earlier in this blog to restore a deleted virtual disk. Finds any VVols in the destroyed volumes folder that belongs to selected VM. You choose the vSphere volume and we import it and attach it back to the VM.

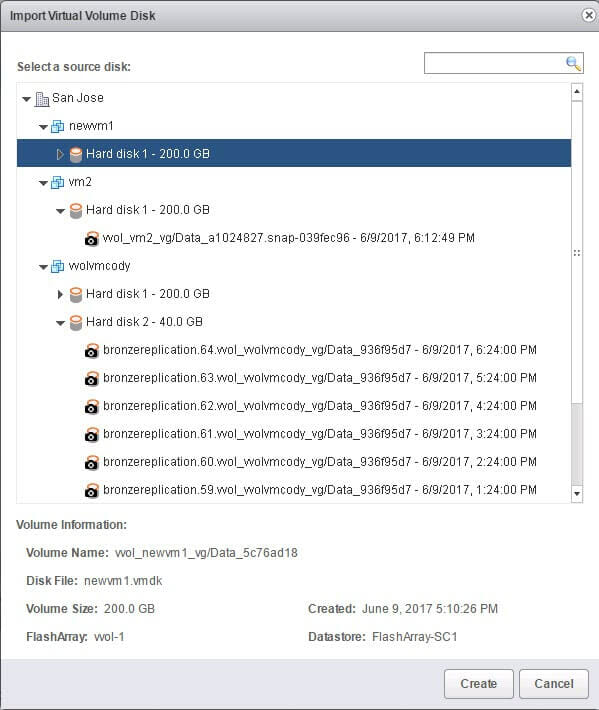

Import Existing vSphere volume or vSphere volume Snapshot. This action allows you to take a vSphere volume-based virtual disk (or any snapshot of it) of a VM and present a copy of it to another VM that is on the same array. This is a nice feature for dev/test of a database for instance.

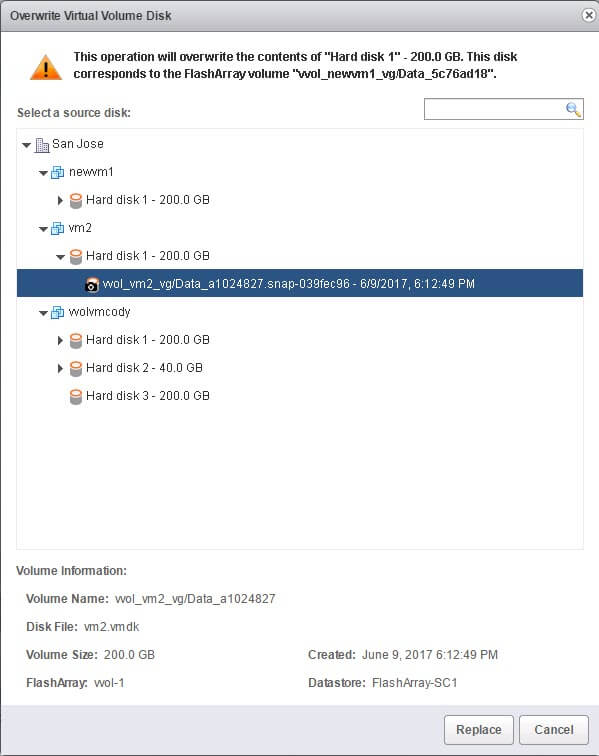

Overwrite an Existing vSphere volume with a VVol or a VVol Snapshot. This action allows you to take a vSphere volume-based virtual disk (or any snapshot of it) of a VM and present a copy of it to another VM that is on the same array. Similar to the previous case, but overwrite instead of creating a new copy.

Configuration of VASA and Storage Policies. In the home screen of the FlashArray vSphere Web Client Plugin there are few new features that will help configure your vSphere volume and FlashArray environment.

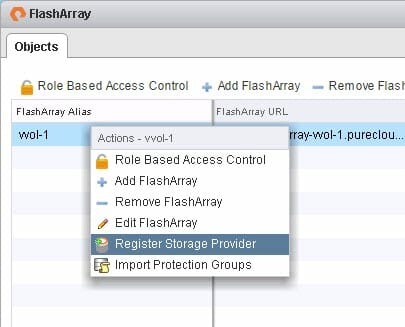

Register VASA Provider. Click on a FlashArray and choose register storage provider. This will register both VASA providers on that array with vCenter.

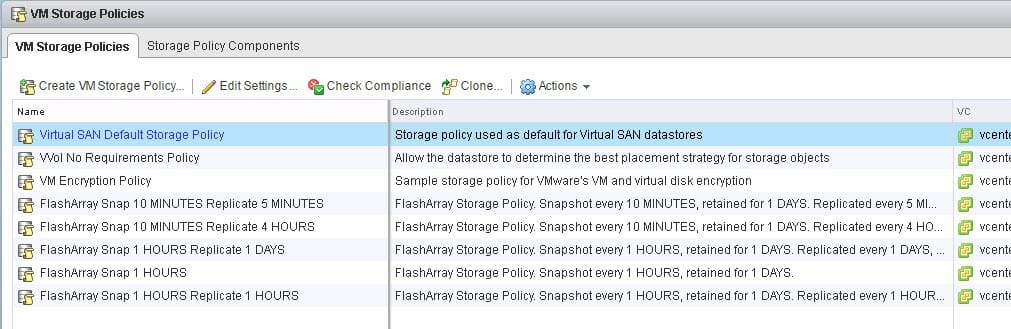

Auto-creation of Storage Policies. To automatically create some storage policies, you can import the protection groups on your FlashArray and the plugin will convert their configuration into a storage policy. Their local snapshot interval and retention and their replication interval and retention for instance.

Pure Storage Leads as the Most Deployed vVols Platform

Pure Storage FlashArray is now the most deployed platform for VMware vVols, reflecting deep integration with VMware and a long-standing commitment to simplifying virtual infrastructure.

Recent advancements include:

- Full support for vSphere 8.x, including features like vVols replication and NVMe-oF.

- Streamlined policy-based management using Storage Policy-Based Management (SPBM), enabling precise control of SLAs at the VM level.

- Improved snapshot and clone operations with per-VM granularity and no performance impact.

- Tight integration with the Pure vSphere Plugin, enabling end-to-end visibility and control directly from vCenter.

- Seamless hybrid cloud support via VMware Cloud Foundation and Pure Cloud Block Store.

These enhancements make Pure’s vVols implementation one of the most robust, scalable, and easy-to-use solutions available for VMware environments.

So that’s it for now! We are finishing up our beta, so depending on feedback there still might be a few minor changes here and there. Look for release in Q3! Will be blogging a lot more details in the coming weeks and months.

Free Test Drive

Try FlashArray

Explore our unified block and file storage platform.