Sun Microsystems used to say “the network is the computer.” At the time, they were referring to the distributed Unix RISC servers that underpinned the early growth of Internet, but I see a more fundamental milestone happening today: Ethernet is now so fast (in terms of bandwidth as well as latency) that there’s negligible performance advantage relative to the PCIe bus within the server chassis: With the latency of a fast SSD at 100us and the cost of LAN roundtrip below 5us, effectively all of the storage media in a rack is now equidistant from all of the compute!

Going on twenty years ago, Google and others pioneered scale-out data center architecture by realizing mechanical disk was hopelessly overmatched by CPU, necessitating the colocation of many hard drives in the same server chassis to achieve better balance—that is, they resurrected direct attached storage (DAS) for large scale distributed computing (distributed DAS or DDAS). We believe a similar sea change is now underway: High performance Ethernet is disaggregating the server chassis, making racks rather than servers the building blocks of the data center, and enabling a new generation of shared rack storage, which is both faster and easier than DDAS, to serve the needs of data-driven computing, including artificial intelligence.

Rich predictive analytics including AI need rack storage. This architectural shift arrives just in time. Big data applications, including predictive analytics, Internet of Things (IoT) and especially deep learning, depend upon amassing ever larger “lakes” of data with ever more programs, orchestrated within pipelines (workflows), processing that data. Machine learning most often entails alternating between data ingest/transformation and model execution (on commodity compute like Intel CPUs), and model training (on highly-parallel compute like NVIDIA GPUs). Shared storage allows switching between tasks without copying the large training data set between systems. In fact, with shared storage the pipeline stages can be run simultaneously. More importantly, shared storage makes all data available to all compute, enabling CPUs, GPUs and storage capacity to each be scaled and refreshed independently.

With high performance commodity networks, today’s compute and storage are being optimized for rack-local access instead of CPU-local access. This is why AI and other data-driven approaches are driving the adoption of new rack-optimized storage at the expense of server-optimized DDAS.

Making rack storage faster than DDAS. Investments in distributed systems performance at hyperscalers has made disaggregation feasible. Scale-out applications are far more distributed than their predecessors. Facebook has remarked that its “East/West” traffic between machines can be 1000-fold that of the “North/South” traffic between end user and machine. Infrastructure has necessarily evolved to make distributed applications more efficient: Remote direct memory access (RDMA), for example, can double CPU capacity and quadruple memory bandwidth by offloading work to smart network interface cards (NICs), freeing up increasingly scarce compute capacity for the application.

Traditional storage block protocols can similarly leverage RDMA for dramatic performance acceleration. To date, we have talked about how NVMe accelerates parallelism and performance within all-flash storage (and massively parallel AI needs massively parallel storage). And now we can finish the story by reinforcing how NVMe over fabrics (NVMe-oF, think RDMA + NVMe) allows data stored on remote systems to be accessed more efficiently than server-local storage, delivering the performance at scale essential for today’s data-driven applications. In June, Cisco and Pure provided a technology preview of our FlashStack all-NVMe Converged Infrastructure.

Acceleration via RDMA and similar techniques are moving rapidly moving into the file and object storage space as well, introducing similar opportunities for a quantum leap in parallelism and performance (e.g., Pure’s FlashBlade leverages such technology to scale out).

Making rack storage lower cost than DDAS. Once again, the re-emergence of shared storage arrives just in time. Scaling and load balancing of data-driven applications on DDAS is far too complicated. (Outsourcing the complexity of managing big data applications is arguably one of the principal growth drivers for public cloud.) With shared storage, there is no need to arbitrarily partition and repartition data across individual nodes. Plus capacity and compute can be independently scaled and refreshed as needed. Since most IoT data is too big to fit in the Public Cloud, these challenges must be solved with on-premises infrastructure.

Shared storage also allows us to save storage media. DDAS generally depends on mirroring across nodes to protect data from failures (particularly when performance is crucial), and mirroring requires roughly 2.5X more storage media than the dual parity erasure coding employed by Pure. Moreover, it is very hard to get effective data deduplication and compression in a DDAS architecture, because each node sees only a fraction of the data. For example, best in class DDAS solutions tend to top out at 2:1 data reduction on virtual machines (at a cost of degraded performance), whereas Pure Storage averages greater than 5:1. The result can be three- to six-fold more storage media for the same workload, which leads to sprawl and cost (particularly if the storage is all-flash). Infrastructure guru Howard Marks recently showed how DDAS and Hyper-Converged Infrastructure (HCI), which is built on DDAS, can be substantially more expensive than converged infrastructure (compute + shared storage) at scale.

(HCI is an architecture which attempts to collocate application compute, data compute, storage and networking all within a single server chassis. The architecture makes great sense for small-scale deployments, such as for remote and branch office or small business. However, in scaled data centers (including the public cloud), greater efficiency and performance is afforded by partitioning application and data processing/storage into multiple tiers, which is the converged rather than hyper-converged model. Indeed, it is not clear what HCI will mean in the data center as Ethernet rather than the server chassis serves as the “backplane.” More on HCI vs. all-flash shared storage can be found here.)

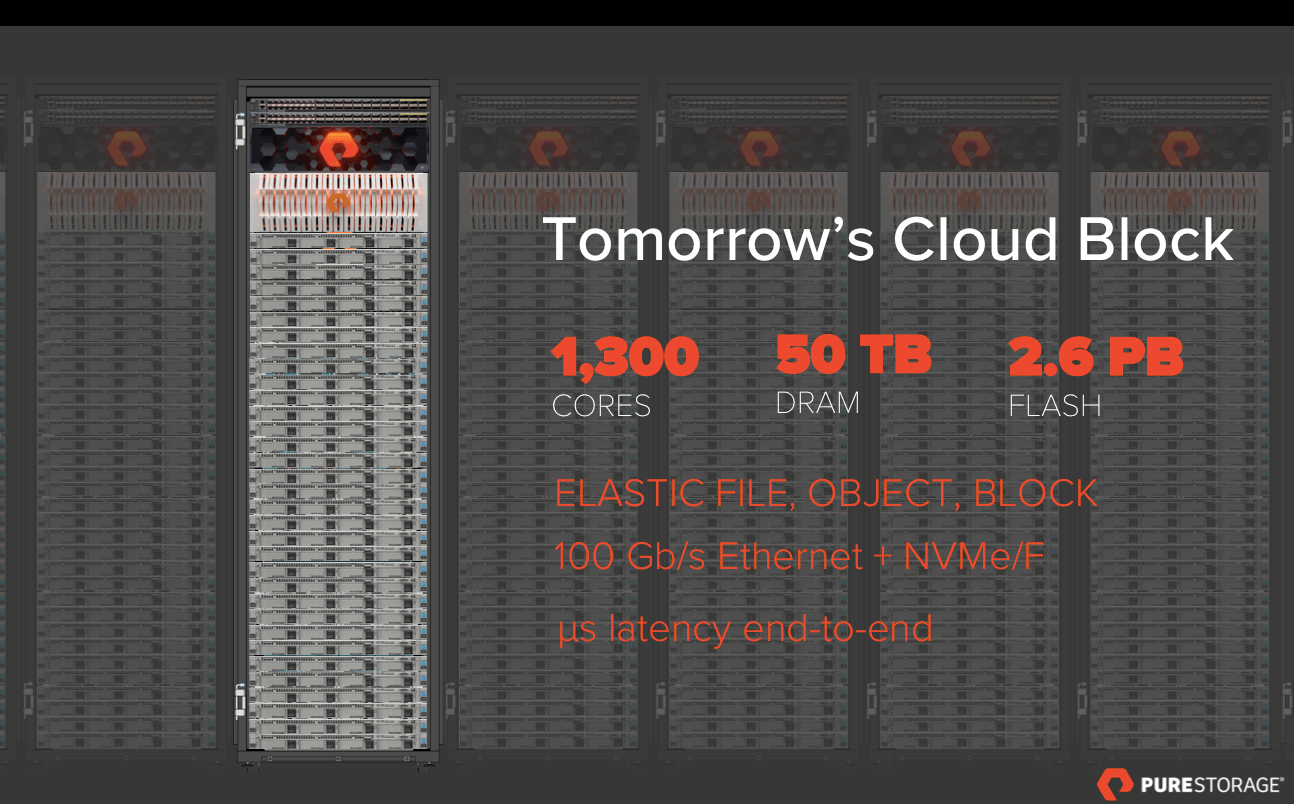

Rack shared storage for the cloud era. The result of these innovations is a faster, radically denser, radically easier to manage architecture. One of our SaaS customers found the increased density jaw dropping—they have already doubled rack capacity while unleashing a five-fold performance improvement for certain applications, and they expect to compound these savings yet again with future advances. Their DDAS architecture is more costly in that they must overprovision flash (accommodate uneven data growth), write capacity (for hotspots) and CPU (uneven workloads), rather than have all data addressable by all compute, and writes evenly amortized across the much larger pool of flash to the point that Pure can guarantee it in perpetuity (via our evergreen subscription model). Coming from DDAS, the availability of higher level data services like rollbacks (snapshots), fault tolerance (replication), authentication (LDAP/Kerberos), and non-disruptive upgrade, all for no additional cost and near zero impact on application performance, is “pure” upside. If you’re a SaaS provider, the next generation architecture could look like this:

Pre-cloud, disk-centric storage will not make this leap. All of this is bad news for the mainframe- and client/server-era technology that Pure Storage predominantly competes against. The disruption extends far beyond fast networks and protocols like RDMA and NVMe-oF, but all the way through the storage software and hardware to the flash devices: Pure’s first FlashArray was built with 250GB SSDs. Today there are 50TB SSDs on the market, but in legacy storage they are still accessed with the same sequential disk-oriented protocols through similarly sized pipes, resulting in a 200-fold reduction in I/O per GB of data. This is problematic for AI and other GPU-based applications as the latest generation of NVIDIA chips demand 2-3X more data bandwidth.

Shifting to NVMe-capable hardware helps by providing a lower latency, higher bandwidth path to read and write the SSD. However, hardware changes alone do nothing to address limitations inherent in legacy storage software designed for sequential “one bit at a time” devices like hard drives. As a result our legacy competitors get limited value from retrofitting with all-flash—within their storage, 15+TB SSDs perform no faster than mechanical disk! Pure’s software was instead crafted to maximize parallelism within as well as across flash devices. This is why traditional storage vendors do not appear to be getting traction for cloud, AI, and other next-generation applications.

Pure vision. As the Economist has said, the world’s most valuable resource is no longer oil, but data. With the growth in data-driven applications, the center of gravity in the data center is shifting away from compute and toward data storage. With disaggregation, fast networking, RDMA, NVMe-oF et al., these trends will only accelerate in the months and years ahead. All of this is happening just in time to provide a far more effective data platform for rich, data-driven prediction including AI and deep learning, and positioning Pure as an ideal partner to help organizations derive maximal value from their data.