AI and Machine Learning

AI and machine learning workloads require GPUs, specialized processors that process massive amounts of data from structured and unstructured sources. Maximize GPU efficiency and ROI with high-performance, architecturally optimized solutions. The Pure Blog will keep you up to date on the latest in AI and machine learning trends, what they mean for IT, and how data infrastructures can deploy the latest trends to be ready for whatever comes next.

-

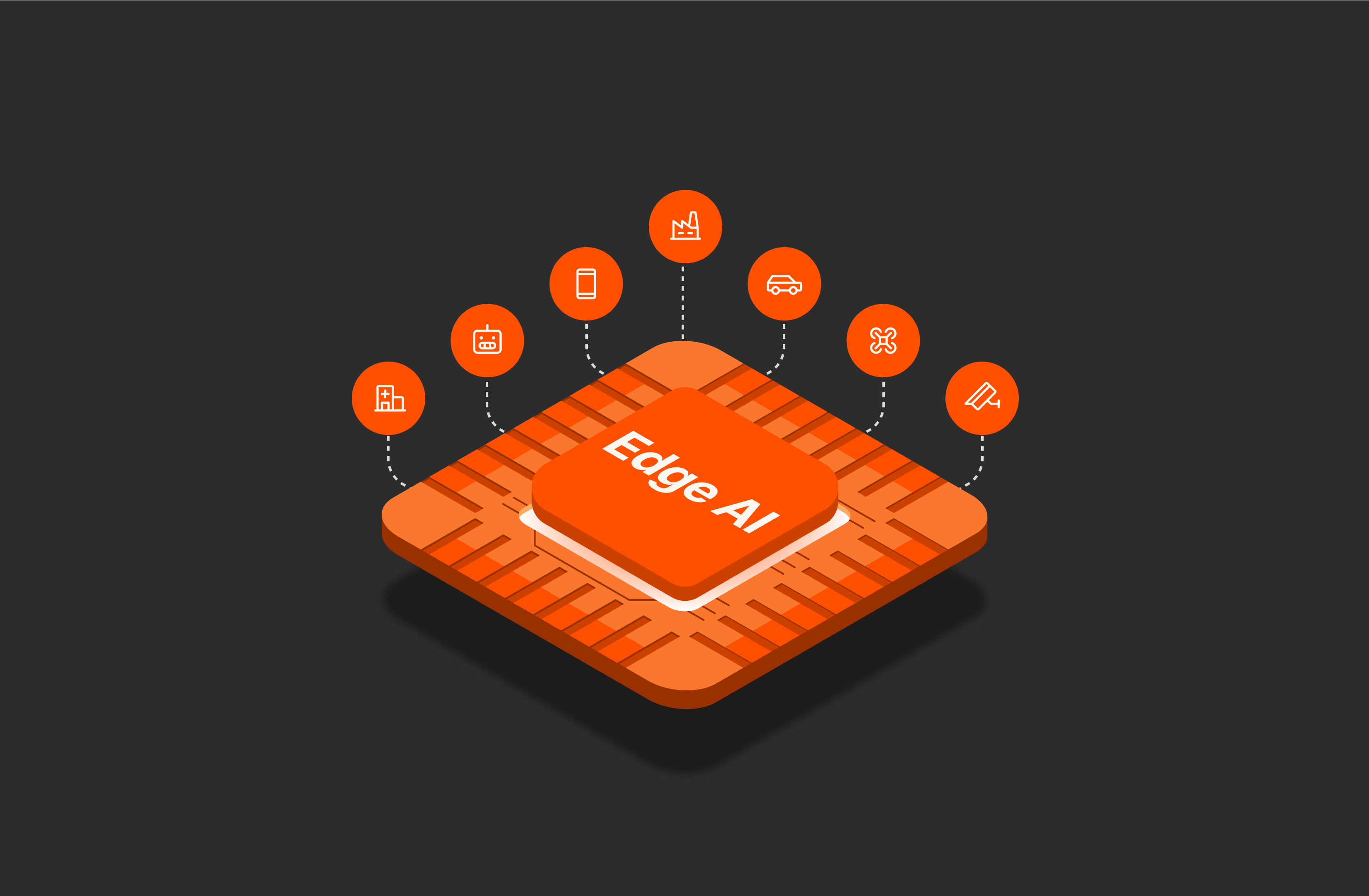

How Edge AI Can Revolutionize Industries with On-device Intelligence

From robotic devices to self-driving cars, edge AI has exciting potential. Learn more about what it is, how it works, its benefits, and the challenges it presents.

By: