We recently wrote about a new category of storage: Unified Fast File and Object. We believe Unified Fast File and Object (UFFO) is the optimal platform for all the key challenges and requirements of modern data—because modern data requires modern storage.

UFFO addresses the needs of modern data. At Pure Storage, our goal is always to solve the hard problems so that you don’t have to, today or into the future. It’s why we’ve designed a modern architecture from the ground up, one that addresses the fundamental shifts in how to simplify, manage, and consolidate unstructured data.

It’s not enough to simply build a storage platform that can address the needs of modern data and its requirements. A UFFO platform must also account for and deliver the characteristics of modern cloud computing.

The ability to provide deep analytic insights into data usage and performance, and to take proactive action or alert management teams when issues arise, is paramount. Hardware and software upgrades and updates must be non-disruptive both to the teams that manage them and the data itself. Furthermore, flexible consumption models must exist to allow the delivery of cloud-like consumption and adoption on-premises in a cost-effective and seamless manner that provides CAPEX infrastructure dynamics with the simplicity of an OPEX purchase structure.

Some may ask why can’t legacy storage systems meet these same needs? Can’t you just add a set of features and new hardware media on an existing legacy system to meet the simplicity, performance, and consolidation requirements? In short, no. And there’s a good reason for it.

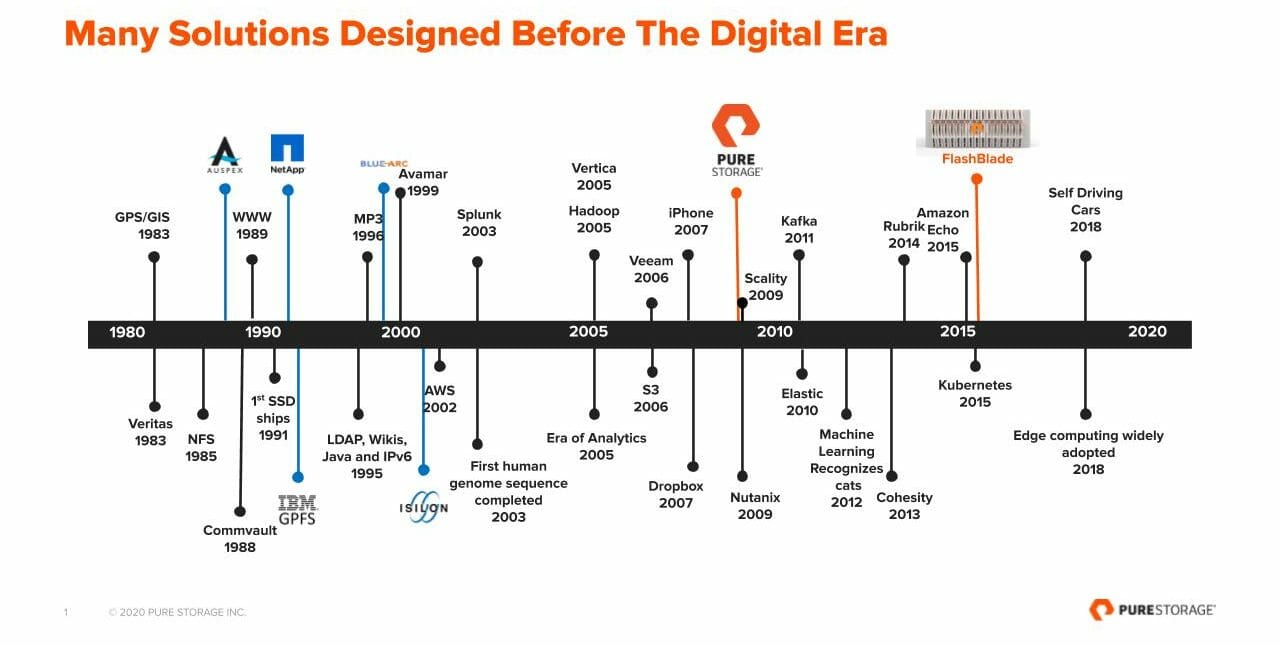

Technology disruption over the last 15 years has been massive. At the same time, it’s easy to forget that internet ubiquity, public cloud, virtualization, containers, and so many other mainstream technologies didn’t exist—and in some cases hadn’t even been conceived by the 2000s.

When it comes to storage, innovative technologies over the last 15 years have been architected to solve challenges associated with trends in the 1980s and 1990s.

Importantly, the scale-out NAS and object systems of the time were designed to handle specific workloads with specific characteristics. The number of files may have been moderate or low (at least in comparison to the hundreds of billions of files we see today). Metadata performance also wasn’t as critical as it is today. Small files (1KB) wouldn’t be addressed at that level and would more likely be lumped into a much larger file to address the contemporaneous lack of CPU performance. The biggest challenge was managing hundreds of thousands of objects and terabytes of scale at the same time they were dealing with the rapid growth of 1Gb networks. This would all be acceptable if we were still dealing with the data challenges of the early 2000s. But we’re not.

Speaking of modern data, and legacy retrofits that are trying to bridge the gap with gussied up features as opposed to designing from scratch with an architecture-first approach. Here are some questions customers should consider:

- Is your legacy vendor’s offering still solving for data challenges of the 2000s?

- How quick was your legacy vendor to embrace the shift to all-flash?

- Does your current solution enable your business to tackle unstructured data needs?

- Are the technologies being offered today truly net new to address the ever-changing modern data landscape?

- What if you didn’t have to sacrifice on performance, consolidation, and simplicity — what kind of outcomes could you drive with each of these enabled at full tilt 100% of the time?

3 Reasons Legacy Storage Struggles to Be UFFO

In today’s marketplace, you find a hodge-podge of solutions that may help in some specific scenarios where customers need fast file and object storage. But these ultimately end up being point solutions, dedicated to a specific workload or workflow, or focused on object but not file—and visa-versa.

Bolting on an Amazon S3 protocol to a storage platform architected two decades ago for all-HDD capacity and say that it’s suddenly addressing the needs of UFFO all-flash storage is an approach doomed for failure. Not to mention it will leave customers unfulfilled (and may introduce risk exposure) when it comes to their digital transformation. Solutions that want to deliver a UFFO need to be performant, simple, and capable of consolidating the varied and growing data sets that modern organizations require. The list of reasons as to why legacy storage struggles as UFFO is long, but in the spirit of being direct, here are three major reasons.

Reason 1: Modern Demands Native

Legacy solutions don’t support native file and native object storage. Native features always perform better than bolt-on technologies that are not implemented using native functions. Putting an object store on top of a legacy NAS platform is essentially putting one storage system on top of another, aka the opposite of modern architecture. Users of such retrofitted storage platforms are left stranded on a platform that will never be able to provide a highly compliant and feature-rich object store. UFFO is not a rebranding exercise with some additional functionality. It requires a built-from-the-ground-up approach to designing and delivering the software and hardware built with modern development principles for modern applications.

Reason 2: Customers Lose When Infrastructure Limitations are Grandfathered In

Modern data requirements have exposed some of the inherent limitations of leading legacy storage platforms that had been architected for all-HDD capacity. Beyond the typical weakness of addressing the performance requirement of only a specific data profile, the list of legacy storage limitations includes complexity, lack of reliability, inefficiency in storing small-sized files, and the inability to support large numbers of files. UFFO addresses any such limitations natively without short-cuts or incurring complexity and trade-offs for customers.

Reason 3: Retrofits Will Never Ride the Longtail of Modern

A UFFO storage platform remains modern over time, combining the best of traditional on-prem storage with a subscription to continuous innovation. Additionally, the rise of the cloud-like experience means that a UFFO platform must provide flexible consumption choices and satisfaction guarantees. Legacy infrastructure incurs disruptive forklift upgrades, imposes a-la-carte licensing for individual enterprise features, and perpetuates the complexity of management and operations. These cannot co-exist in a UFFO world with rapidly growing volumes of modern data.

What’s Next for Unified Fast File and Object?

As the world’s first UFFO storage platform, Pure Storage FlashBlade® is designed to enable you to tackle your most challenging modern data requirements. FlashBlade delivers multi-dimensional file and object performance via a highly parallelized architecture purpose-built for today’s workloads. This architecture is modern in its design and was built specifically to address the challenges that lay ahead for modern data.

We’ve always welcomed competition because more competition ultimately makes for better outcomes for customers. At the same time, anytime there’s a launch of something that’s supposedly “new” or “modern” but is based on technologies from the past, we urge customers to remember that they have a choice — the choice to consider a new, more a modern approach. We’d love to partner with you to talk it through, reach out today.

Look for future posts that dive into the design elements and technical reasoning behind unifying fast file and object storage into a single platform—and why that architectural choice is the only choice for organizations looking to address the needs of modern data and modern applications.