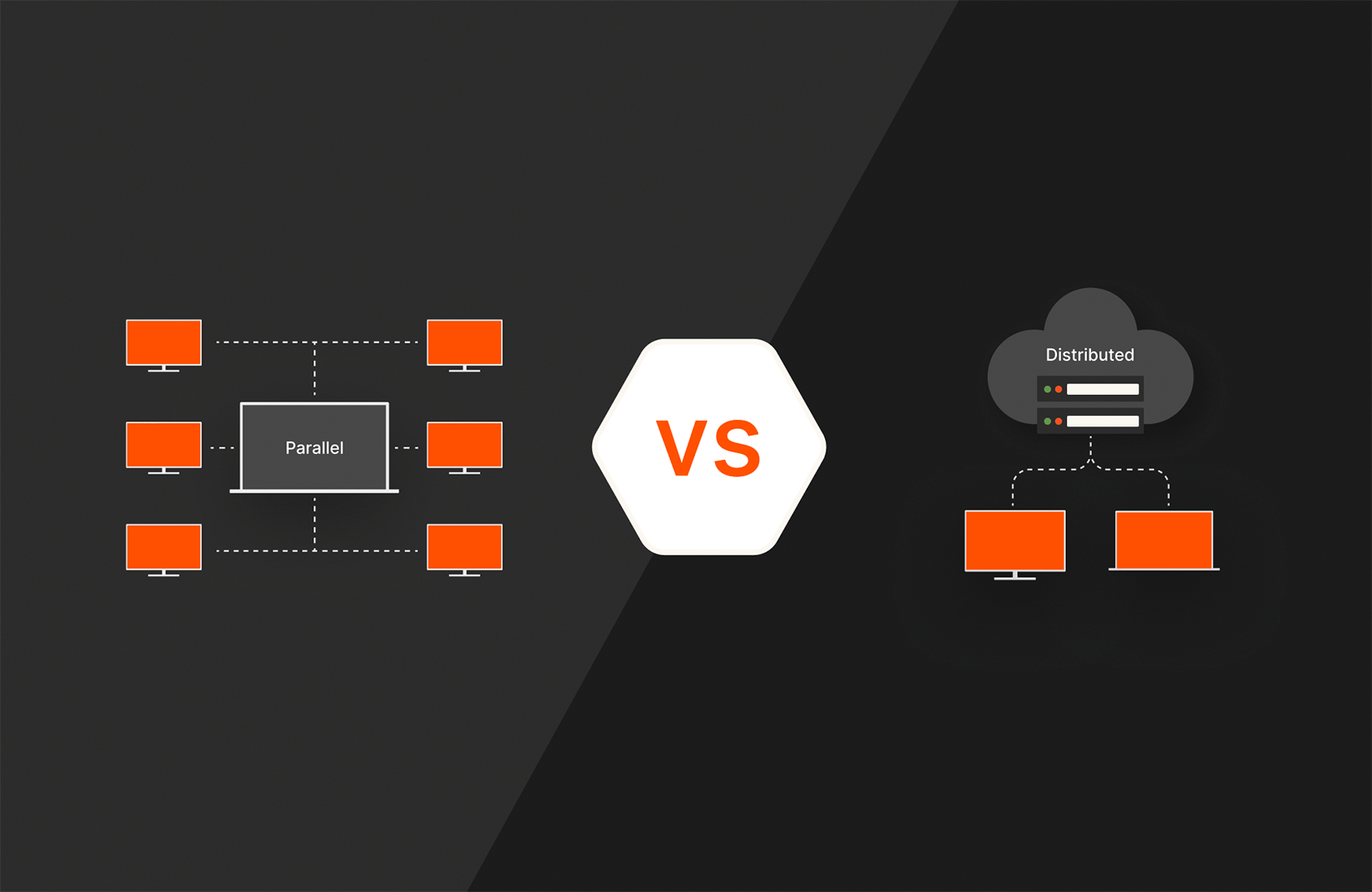

Both parallel and distributed computing have been around for a long time and both have contributed greatly to the improvement of computing processes. However, they have key differences in their primary function.

Parallel computing, also known as parallel processing, speeds up a computational task by dividing it into smaller jobs across multiple processors inside one computer. Distributed computing, on the other hand, uses a distributed system, such as the internet, to increase the available computing power and enable larger, more complex tasks to be executed across multiple machines.

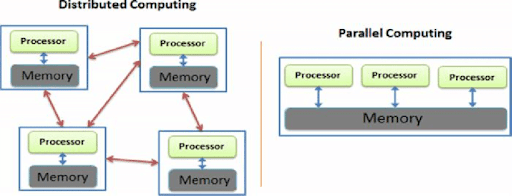

Figure 1: A distributed computing system compared to a parallel computing system.

Source: ResearchGate

What Is Parallel Computing?

Parallel computing is the process of performing computational tasks across multiple processors at once to improve computing speed and efficiency. It divides tasks into sub-tasks and executes them simultaneously through different processors.

There are three main types, or “levels,” of parallel computing: bit, instruction, and task.

- Bit-level parallelism: Uses larger “words,” which is a fixed-sized piece of data handled as a unit by the instruction set or the hardware of the processor, to reduce the number of instructions the processor needs to perform an operation.

- Instruction-level parallelism: Employs a stream of instructions to allow processors to execute more than one instruction per clock cycle (the oscillation between high and low states within a digital circuit).

- Task-level parallelism: Runs computer code across multiple processors to run multiple tasks at the same time on the same data.

Modern Hybrid Cloud Solutions

Seamless Cloud Mobility

Unify cloud and on-premises infrastructure with a modern data platform.

What Is Distributed Computing?

Distributed computing is the process of connecting multiple computers via a local network or wide area network so that they can act together as a single ultra-powerful computer capable of performing computations that no single computer within the network would be able to perform on its own.

Distributed computers offer two key advantages:

- Easy scalability: Just add more computers to expand the system.

- Redundancy: Since many different machines are providing the same service, that service can keep running even if one (or more) of the computers goes down.

Parallel Computing: A Brief History

Etchings of the first parallel computers appeared in the 1950s when leading researchers and computer scientists, including a few from IBM, published papers about the possibilities of (and need for) parallel processing to improve computing speed and efficiency. The 1960s and ’70s brought the first supercomputers, which were also the first computers to use multiple processors.

The motivation behind developing the earliest parallel computers was to reduce the time it took for signals to travel across computer networks, which are the central component of distributed computers.

Parallel computing went to another level in the mid-1980s when researchers at Caltech started using massively parallel processors (MPPs) to create a supercomputer for scientific applications.

Today, multi-core processors have made it mainstream, and the focus on energy efficiency has made using parallel processing even more important, as increasing performance through parallel processing tends to be much more energy-efficient than increasing microprocessor clock frequencies.

Key Differences Between Parallel Computing and Distributed Computing

While parallel and distributed computers are both important technologies, there are several key differences between them.

Difference #1: Number of Computers Required

Parallel computing typically requires one computer with multiple processors. Distributed computing, on the other hand, involves several autonomous (and often geographically separate and/or distant) computer systems working on divided tasks.

Difference #2: Scalability

Parallel computing systems are less scalable than distributed computing systems because the memory of a single computer can only handle so many processors at once. A distributed computing system can always scale with additional computers.

Difference #3: Memory

In parallel computing, all processors share the same memory and the processors communicate with each other with the help of this shared memory. Distributed computing systems, on the other hand, have their own memory and processors.

Difference #4: Synchronization

In parallel computing, all processors share a single master clock for synchronization, while distributed computing systems use synchronization algorithms.

Difference #5: Usage

Parallel computing is used to increase computer performance and for scientific computing, while distributed computing is used to share resources and improve scalability.

When to Use Parallel Computing: Examples

This computing method is ideal for anything involving complex simulations or modeling. Common applications for it include seismic surveying, computational astrophysics, climate modeling, financial risk management, agricultural estimates, video color correction, medical imaging, drug discovery, and computational fluid dynamics.

When to Use Distributed Computing: Examples

Distributed computing is best for building and deploying powerful applications running across many different users and geographies. Anyone performing a Google search is already using distributed computing. Distributed system architectures have shaped much of what we would call “modern business,” including cloud-based computing, edge computing, and software as a service (SaaS).

Which Is Better: Parallel or Distributed Computing?

It’s hard to say which is “better”—parallel or distributed computing—because it depends on the use case (see section above). If you need pure computational power and work in a scientific or other type of highly analytics-based field, then you’re probably better off with parallel computing. If you need scalability and resilience and can afford to support and maintain a computer network, then you’re probably better off with distributed computing.

Modern Hybrid Cloud Solutions

Accelerate innovation and agility with a modern, unified cloud.