Introduction

MariaDB has undergone a steady evolution since it was introduced back in 2009 (random trivia: that was the same year that Pure Storage was founded) as a fork of MySQL. Initially used primarily in single host or small clusters for small and medium businesses, as it has matured, we are now enabled to utilize it across many platforms and in large-scale enterprise deployments for business-critical functions. We have seen this evolution in many of our customers firsthand, so we thought that the release of DirectFlash™ Fabric represented a good opportunity to investigate and challenge some of the standard maxims that surround MariaDB deployments.

Challenge: Performance

Probably the first consideration on a lot of readers’ minds is why they should consider using a Pure Storage FlashArray™ when Direct Attached Storage (DAS) is, and has largely been, the de-facto standard for MariaDB deployments on-prem? It’s a valid question, as it is critical to keep the analytics pipeline full for applications that primarily run in host memory such as MariaDB. The time it takes to finish a query or analysis and get tangible results have major business ramifications at any scale so businesses have traditionally sacrificed feature-rich shared storage functionality for the local performance gained from DAS in both single and clustered instances.

However, with the efficiencies gained in DirectFlash at the array level and with DirectFlash Fabric in connecting the Pure FlashArray to the compute and host memory- we figured it was time to run some new experiments to see how some of the latest storage and networking innovations compare against the existing status-quo.

MariaDB Performance Test Setup

We ran a series of experiments on a subset of applications that are poised to take advantage of the new DirectFlash Fabric paradigm (see more on the technology and the other applications here) and found that MariaDB responded amongst the most favorably.

The primary goal of this testing was to build a static MariaDB configuration so that HammerDB and TPC-C could be executed consistently against the hardware with the primary variable being storage connectivity. That is, between benchmark runs the main change we were making was at the storage layer between NVMe-oF, iSCSI and DAS.

Using HammerDB as our load generation utility for running against MariaDB v10.3.12 we setup a single host with the following characteristics:

OS: Red Hat Linux 7.6

Host to Pure Storage //X90R2 Connectivity: Mellanox ConnectX-4 Lx (Dual Port 25 GbE) and 10Gb iSCSI

DAS: 4x 1.6TB 12Gb/s SAS SSD (RAID 10)

We configured HammerDB to build a schema of 5000 Data Warehouses with 100 Virtual Users running against MariaDB with the TPC-C workload.

Finally, we set ‘vm.swappiness’ to zero and set the following InnoDB parameters that MariaDB used upon invocation within the /etc/my.cnf.d/server.cnf file on the Linux host to drive more concurrent operations:

[mysqld]

innodb_buffer_pool_size=8G

innodb_buffer_pool_instances=8

MariaDB Performance Results

Running the HammerDB benchmark provided us with a Transactions Per Minute (TPM) metric that we could use as a relative comparison point between the different test cases described above. Before gathering these results, we let it run for at least 8 minutes to hit steady-state, ensuring that there were no virtual users in an error state or other problems within the test case and repeated it several times to ensure consistent results. Impressively, we found that DirectFlash Fabric performed 25-30% better than 10Gb iSCSI and also performed ~30% better when compared with DAS. Another metric we paid attention to was the variability in TPM throughout the test (peaks and valleys) and we noted that DirectFlash Fabric and iSCSI were able to provide consistent TPM values within 7% of the average value while DAS varied significantly more (~15%).

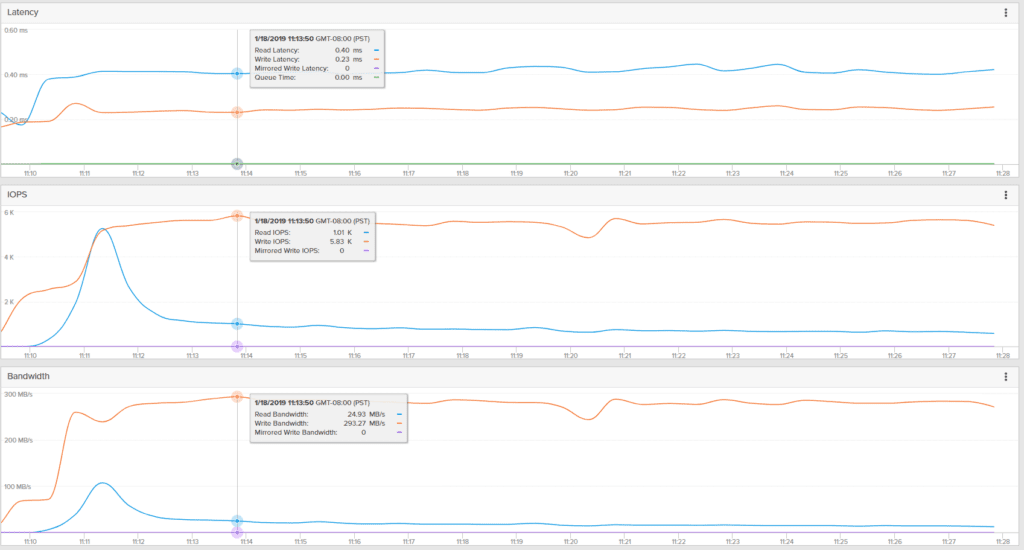

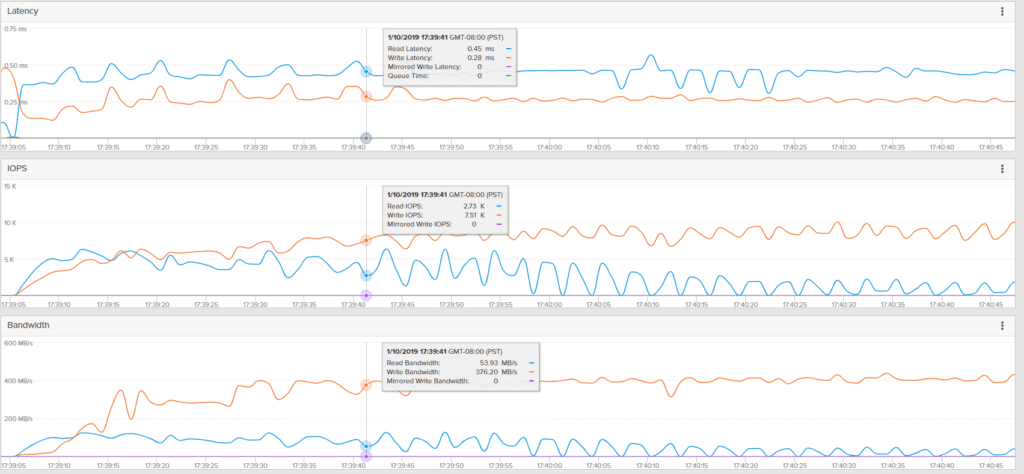

Another way to measure iSCSI vs DirectFlash Fabric performance is from the Pure Storage FlashArray//X90R2 GUI, and the results are telling. We were able to drive nearly 30% more bandwidth and IOPS at comparable latency with DirectFlash Fabric relative to iSCSI (keep in mind this was a small, single database and host test so the overall metrics shown are going to be similarly limited relative to the overall performance capacity of the array).

Pure Storage //X90R2 iSCSI Testing Metrics for Single MariaDB Host

Challenge: Scaling, Sizing and Resiliency

Now that we’ve shown that there are some compelling results for MariaDB with our HammerDB benchmark testing, we can expand into the additional value that Pure Storage unlocks for this and similar workload types. There are a couple key advantages that we will cover here:

Sizing and Data Reduction: A fundamental challenge for DAS is by no means a new one: proper sizing. Customers are often stuck selecting a ‘best guess’ storage device that incorporates database growth projections that at the time seem reasonable but unforeseen changes in the business may render that estimate obsolete at any point. If the business or database grows faster than expected, a suddenly improperly-sized set of disks can become a bottleneck due to capacity limitations – forcing the host in which it resides to either be throttled in some fashion or taken out of service entirely for an upgrade to a larger, more expensive set of drives that was not a planned purchase. This problem compounds as MariaDB Galera clusters grow and having a uniform host composition becomes inflexible or impossible with respect to the needs of the business.

Pure Storage solves this problem in two main ways: by providing universal access to the database for all appropriate hosts and by increasing datastore size in just a few simple clicks from our GUI. Furthermore, through our FlashReduce in-line deduplication and compression, we enable you to maximize your effective capacity with an average of 3:1 data reduction for database workloads across our install base.

Resiliency and Mobility: DAS provides a bare-minimal set of data services necessary to keep this and other workloads online. Yet those processes do not provide seamless access to the rich ecosystem of data protection, hybrid cloud and availability services that Pure Storage FlashArray customers have been enjoying for years. Capabilities like on-demand or scheduled datastore snapshots, with replication to the cloud or a true Active-Active replication solution with ActiveCluster. Best of all, these and future innovations are delivered to our customers at no additional cost through our Evergreen Storage Program.

Setting up DAS in a RAID configuration and running Mariabackup and/or mysqldump backup jobs is an expectation for data protection and has worked reasonably well. However, application degradation and long RTO and RPO for a corrupt database or cluster-level recovery can cause an outage and lost dollars for the business. Pure Storage snapshots, on the other hand, can be restored instantly to any previous point in time and easily replicated for use in test/dev instances. Put simply, Pure allows you to recover faster and put those backup datasets to work in new ways not easily done before.

Conclusion

New stack applications like MariaDB that have native compatibility with both on and off-premises solutions now have the potential to unlock previously unavailable data services when coupled with Pure Storage DirectFlash Fabric performance. Flexibility and agility in scaling this and other workloads as business requirements churn on the appropriate tier allows corporations to spend wisely while getting maximum ROI from their data.

As is always the case, we encourage customers to experiment with their own unique applications and workloads against the capabilities shown in this post in order to gain insights on how these new technologies can benefit them. Stay tuned as well for the next part of this blog post, which will focus on performance results from a larger Galera-based cluster where we should see even further performance advantages from DirectFlash Fabric.

Finally, if you’re interested in reading about some of the other exciting applications we’ve been testing as part of this launch, please check out one of the links below:

DirectFlash Fabric vs iSCSI with Epic Workload

Oracle 18c

MongoDB