Oracle ASM always stripes and mirrors the data across all disks in a disk group when the redundancy mode is either NORMAL or HIGH and does only stripping when it is in EXTERNAL mode. Often times, we get question about whether customer can migrate their Oracle data out of their current storage to Pure Storage® when the ASM diskgroup is in EXTERNAL redundancy mode.

The answer is Yes, it can and can be done online.

This post illustrates the ASM migration on diskgroup with EXTERNAL redundancy.

ASM migration on diskgroup

Test environment: Oracle 12c on VM with ASM along with ASMlib.

Test sequence

- Create two pure storage volumes, attach to the ESX host, rescan the storage.

- Create a new datastore out of the first volume.

- Provision a VMFS disk out of the datastore and attach to the VM as a new disk.

- Using ASMlib perform a createdisk to update the header of the disk as ASM disk.

- Create ASM diskgroup with external redundancy on this disk.

- Setup Oracle database on that disk group

- Setup SLOB & run load

- Add a new RDM disk using the second volume that was attached to the ESX and attach to the VM.

- Using ASMlib perform a createdisk to update the header of the disk as ASM disk on the partition.

- Attach the disk to the diskgroup and rebalance it and remove the other disk with the same command

- Validate the database

For brevity, will start after sequence#3, where the VMFS disk attached to the Oracle VM as /dev/sdc and we have created a partition using fdisk.

At this point we installed SLOB on the PROD database using the +DATA diskgroup and started the SLOB run with 8 users and let it run throughout the following steps. Assuming this is the current setup where a customer has databases running on their current storage, we moved ahead with sequence #8, where we added the second volume as RDM disk to the VM as /dev/sdd to mimic adding new volume from Pure storage. Followed the same procedure with creating a partition and invoked oracleasm to stamp the header of the disk.

At this time, we went ahead and issued the alter diskgroup command to add the new disk DATA2 and drop the old disk DATA1 along with rebalance option.

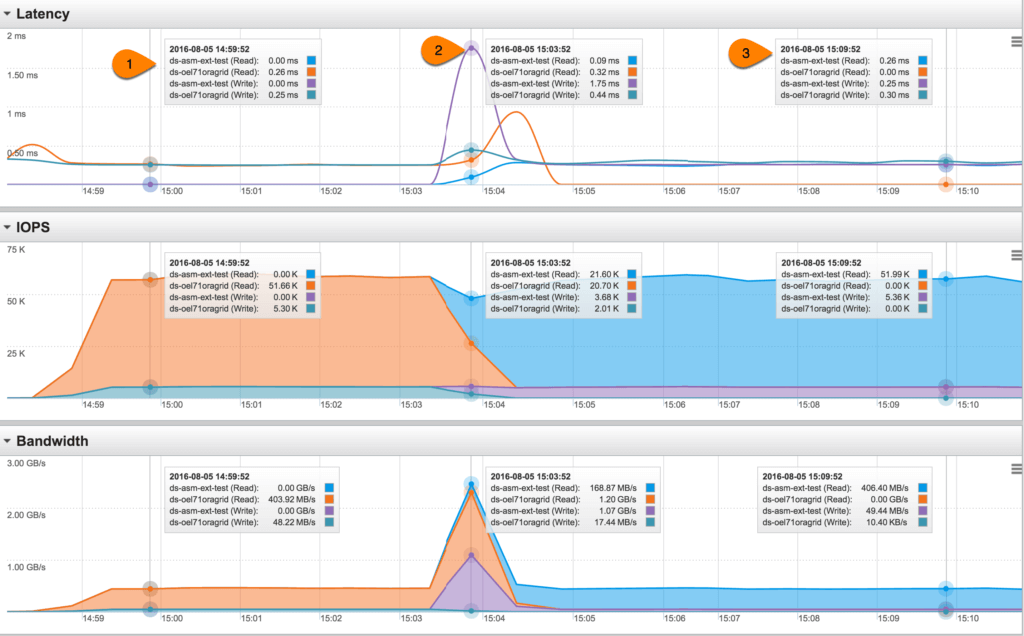

All this time the SLOB kept running without any issues. As a matter of fact the Pure Storage GUI below shows the best part of how the performance remained same before and after the disk addition/removal.

1) SLOB was running on the diskgroup with DISK1 from the VMFS (ds-oel71oragrid) and performing over 51K read IOPS, 5K write IOPS with latencies under 0.26ms and total bandwidth over 450MBps.

2) This is the time when the alter diskgroup command was executed where the new RDM disk (ds-asm-ext-test) was added to the diskgroup while the prior disk was removed and rebalance performed. As you can see on the Bandwidth section, more data was read from the old disk and they were written onto the new disk (in the order of 1 GBps).

3) At this time, SLOB is running on the diskgroup with DISK2 from the RDM and performing at similar performance metrics as it was earlier.

ASM is one of the best feature Oracle has ever provided (at no cost) and it enables seamless migration whether it is NORMAL or EXTERNAL redundancy.

ASM Migration Scripts

Conclusion

Oracle ASM’s flexibility and robustness shine when it comes to seamless storage migrations, as demonstrated by the process outlined in this blog. Migrating an ASM disk group with EXTERNAL redundancy to Pure Storage volumes is not only possible but can also be performed online with minimal disruption. This process ensures continuity of database operations, as evidenced by SLOB’s uninterrupted performance during migration, with consistent IOPS and low latency metrics.

Pure Storage further complements ASM’s capabilities by delivering high-performance storage with exceptional reliability and efficiency. The ability to handle demanding workloads while maintaining consistent performance during migration highlights the synergy between Oracle ASM and Pure Storage. This partnership empowers businesses to achieve seamless migrations, enhanced operational efficiency, and improved scalability in their storage environments, whether for development, testing, or production systems.

Applications and Databases