Most of Pure FlashArray customers are used to the snapshot functionality that helps them in taking either a crash-consistent or application-consistent snapshot in a flash (literally and figuratively) irrespective of the database size. While this helps them in protecting the database from any accidental changes or creating clones for development and test usage but cannot be treated as a backup. By definition, the backup has to reside on a second medium and the local snapshots do not meet this requirement. Certainly, customers can extend the snapshots to a second FlashArray using asynchronous replication and can treat them as a backup. Alternatively, they can offload the snapshots using Snap to NFS to NFS targets like FlashBlade or to the cloud using CloudSnap.

Meanwhile, there are a lot of Oracle customers who are still using RMAN backups specifically to validate the backup before offloading them to a secondary medium and did not want to solely rely on the snapshot functionality. They generally run the RMAN backups out of their production database to the secondary medium (disk or sbt). This process becomes a challenge when the size of the database is huge and a full backup takes a long time to complete. One option is to increase the number of RMAN channels if the production system has enough bandwidth for the data transfer but adding more channels can introduce performance overhead to the production system.

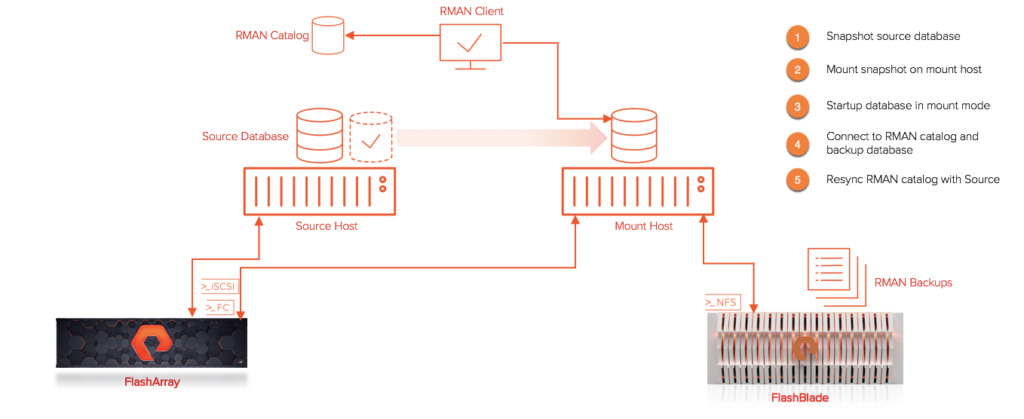

This blog covers the process to take a full database backup of a huge database using Pure FlashArray snapshots as a source and backing it up using Oracle RMAN from a mount host (secondary host; some backup vendors refer them as proxy host) into the secondary medium.

The following picture shows the overall process for backing up an Oracle database using RMAN from a secondary host. In our case, for rapid backup and restore, we are using FlashBlade as the target medium for the RMAN backup.

Prerequisites

- Mount host to be configured same as production host.

- The mount host should have the same Oracle binary code path as that of the production host.

- The mount host should have same oracle (or grid) userid and groupid as production.

- The mount host should have the same OS level as production.

- RMAN recovery catalog is required to store backup and recovery details of Oracle databases

- Production and mount host should have SQL*Net connectivity to RMAN catalog

- Mount host should have a copy of database init.ora and password file (if exists)

- Datafiles should be placed in separate volume(s).

- The backupsets from mount host should be made accessible at the production host for recovery.

- ASM instance is setup on the mount host.

- Empty volumes with the same size as that of production volumes (for data & fra) are created and mounted on the mount host, discovered.

Sample Diskgroups

Production Host

Mount Host

Note: If you have different LUN designs, adjust the script accordingly.

Full backup – Steps

1. On the production host take a snapshot of the datafile volume(s). If the datafiles are spread across multiple volumes, please use the protection group to group them together so the snapshot can be taken at the pgroup level.

2. On production host force current log to be archived.

3. On the production host take two backups of the control file. One will be used to open the database in the mount host and the other will be part of the RMAN backupset. (The CONTROL_FILES parameter in the mount host init.ora will point to the control_start control file).

4. On production host resynchronize RMAN catalog with the production database. This adds the most recent archive log info into the RMAN catalog.

5. On production host take a snapshot of the FRA volume.

6. On the mount host, shutdown the prod database if it is mounted.

7. On the mount host, dismount the +DATA and +FRA diskgroup from ASM if it was mounted.

8. On the mount host, force copy the snapshot of +DATA that was taken in step 1. Pass the full volume name with snapshot details to overwrite the volume in mount host.

9. On the mount host, force copy the snapshot of _FRA that was taken in step 5. Pass the full volume name with snapshot details to overwrite the volume in mount host.

10. On the mount host, mount the diskgroups +DATA and +FRA.

11. On the mount host, start the database in mount mode. Make sure the init.ora file points to +FRA/PROD/CONTROLFILE/control_start as specified in step 3.

12. On the mount host, backup the database using RMAN including the archived logs and backup control file. Connect to the RMAN recovery catalog and backup the database. This example shows target media as disk. In your case, this may be of type sbt. Hence the locations like /x01/backup should be updated accordingly.

13. On the production host, delete obsolete archived log files from the +FRA area. Make sure to update the device type here as for testing we used ‘disk’.

Periodic archived logs backup – FlashArray Steps

Perform the following steps periodically as per your requirement. The only difference between this and the full database is, we don’t take a snapshot of the +DATA volume.

1. On production host force current log to be archived.

2. On production host take two backups of the control file. One will be used to open the database in the mount host and the other will be part of the RMAN backupset. (The CONTROL_FILES parameter in the mount host init.ora will point to the control_start control file).

3. On the production host resynchronize RMAN catalog with the production database. This adds the most recent archive log info into the RMAN catalog.

4. On the production host take a snapshot of the FRA volume.

5. On the FlashArray mount host, shutdown the prod database if it is mounted.

6. On the mount host, dismount the +DATA and +FRA diskgroup from ASM if it was mounted.

7. On the mount host, force copy the snapshot of _FRA that was taken in step 5. Pass the full volume name with snapshot details to overwrite the volume in mount host.

8. On the mount host, mount the diskgroups +DATA and +FRA.

9. On the mount host, start the database in mount mode. Make sure the init.ora file points to +FRA/PROD/CONTROLFILE/control_start as specified in step 3.

10. On the mount host, backup the archived logs and control files using RMAN. Connect to the RMAN recovery catalog and perform the backup. This example shows target media as disk but this can be of type sbt. Hence the locations like /x01/backup should be updated accordingly with FlashArray.

11. On the production host, delete obsolete archived log files from the +FRA area. Make sure to update the device type here as for testing we used ‘disk’. (Note: Follow whatever is your standard operating procedure in deleting the archived logs rather than using the example below where we delete the archived logs after it is backed up twice).

export ORACLE_SID=prod

rman target / catalog user/pwd@rmancatalogdb << EOF

delete archivelog backed up 2 times to device type ‘disk’;

exit

EOF

Conclusion

Pure FlashArray enhances backup and recovery processes by providing a flexible, efficient, and scalable platform for managing large Oracle databases. Its snapshot functionality allows for rapid creation of crash-consistent or application-consistent snapshots, making it ideal for protecting databases and enabling quick cloning for development and testing. While snapshots alone may not meet traditional backup requirements, extending them to secondary storage using features like asynchronous replication, Snap to NFS, or CloudSnap ensures robust backup solutions.

For Oracle RMAN users, leveraging FlashArray snapshots as a backup source minimizes production system overhead, significantly reduces backup times, and enhances data recovery capabilities. Combined with FlashBlade as the target medium, FlashArray supports rapid data access, ensuring reliable performance during backup and restore operations. This streamlined integration not only accelerates database management workflows but also strengthens disaster recovery strategies, making it an invaluable tool for enterprises handling critical data.

Free Test Drive

Try FlashArray

Explore our unified block and file storage platform.