Summary

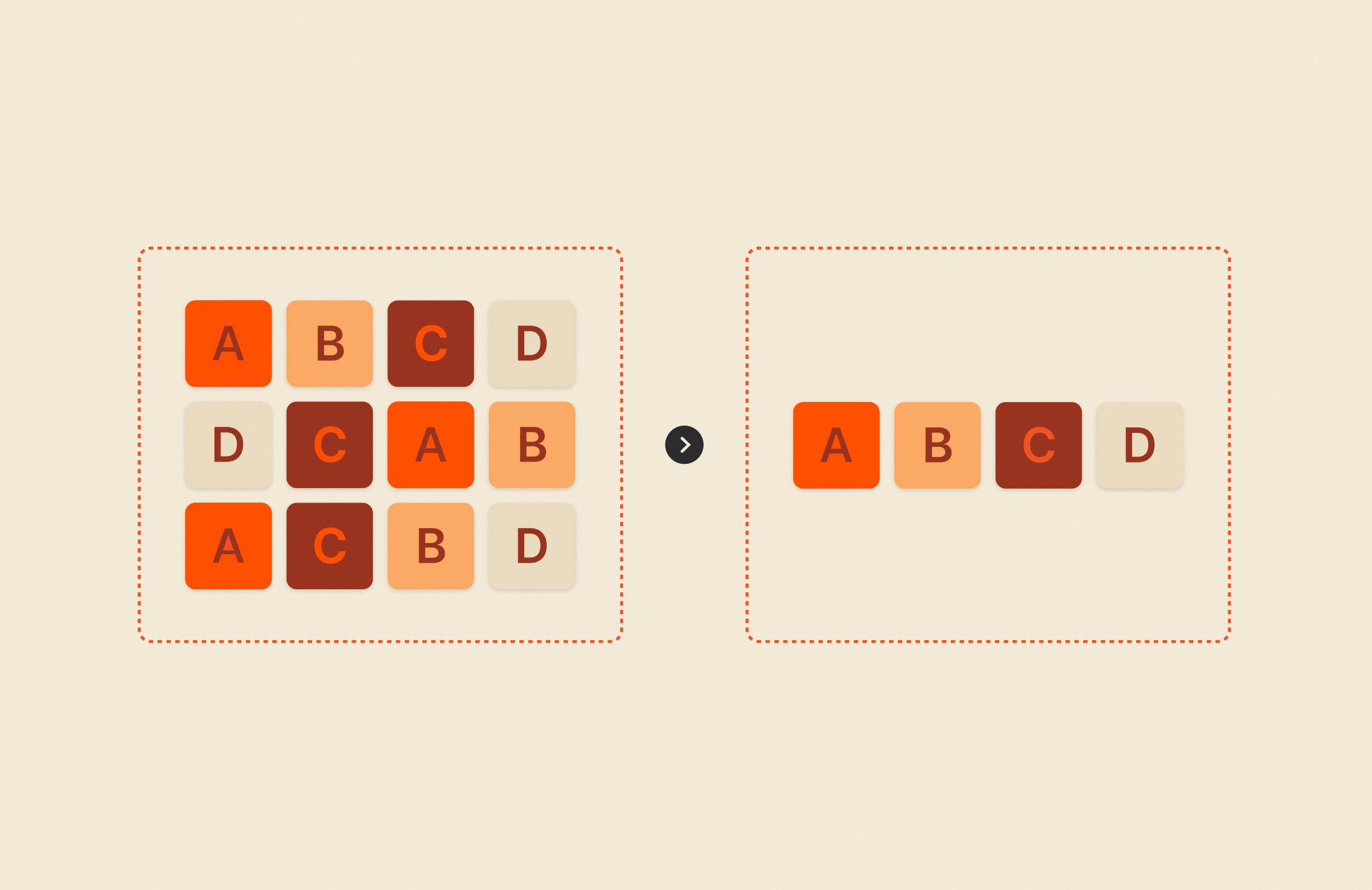

Deduplication is a process that prevents the same data from being stored again. Deduplication ratios are an important calculation that can help you size the expected data footprint.

It’s super important to understand where deduplication ratios, in relation to backup applications and data storage, come from.

Deduplication prevents the same data from being stored again, lowering the data storage footprint. In terms of hosting virtual environments, like FlashArray//X™ and FlashArray//C™, you can see tremendous amounts of native deduplication due to the repetitive nature of these environments.

Backup applications and targets have a different makeup. Even still, deduplication ratios have long been a talking point in the data storage industry and continue to be a decision point and factor in buying cycles. Data Domain pioneered this tactic to overstate its effectiveness, leaving customers thinking the vendor’s appliance must have a magic wand to reduce data by 40:1.

I wanted to take the time to explain how deduplication ratios are derived in this industry and the variables to look for in figuring out exactly what to expect in terms of deduplication and data footprint.

Built to Scale

with Evergreen

See how Evergreen and Pure Storage simplify

storage management across any environment.

Let’s look at a simple example of a data protection scenario.

Example:

A company has 100TB of assorted data it wants to protect with its backup application. The necessary and configured agents go about doing the intelligent data collection and send the data to the target.

Initially, and typically, the application will leverage both software compression and deduplication.

Compression by itself will almost always yield a decent amount of data reduction. In this example, we’ll assume 2:1, which would mean the first data set goes from 100TB to 50TB.

Deduplication doesn’t usually do much data reduction on the first baseline backup. Sometimes there are some efficiencies, like the repetitive data in virtual machines, but for the sake of this generic example scenario, we’ll leave it at 50TB total.

So, full backup 1 (baseline): 50TB

Now, there are scheduled incremental backups that occur daily from Monday to Friday. Let’s say these daily changes are 1% of the aforementioned data set.

Each day, then, there would be 1TB of additional data stored. 5 days at 1TB = 5TB.

Let’s add the compression in to reduce that 2:1, and you have an additional 2.5TB added.

50TB baseline plus 2.5TB of unique blocks means a total of 52.5TB of data stored.

Let’s check the deduplication rate now. 105TB/52.5TB = 2x

You may ask: “Wait, that 2:1 is really just the compression? Where is the deduplication?”

Great question and the reason why I’m writing this blog. Deduplication prevents the same data from being stored again. With a single full backup and incremental backups, you wouldn’t see much more than just the compression.

Where deduplication measures impact is in the assumption that you would be sending duplicate data to your target. This is usually discussed as data under management.

Data under management is the logical data footprint of your backup data, as if you were regularly backing up the entire data set, not just changes, without deduplication or compression.

For example, let’s say we didn’t schedule incremental backups but scheduled full backups every day instead.

Without compression/deduplication, the data load would be 100TB for the initial baseline and then the same 100TB plus the daily growth.

Day 0 (baseline): 100TB

Day 1 (baseline+changes): 101TB

Day 2 (baseline+changes): 102TB

Day 3 (baseline+changes): 103TB

Day 4 (baseline+changes): 104TB

Day 5 (baseline+changes): 105TB

Total, if no compression/deduplication: 615TB

This 615TB total is data under management.

Now, if we looked at our actual, post-compression/post-dedupe number from before (52.5TB), we can figure out the deduplication impact:

615/52.5 = 11.714x

Looking at this over a 30-day period, you can see how the dedupe ratios can get really aggressive.

For example: 100TB x 30 days = 3,000TB + (1TB x 30 days) = 3,030TB

3,030TB/65TB (actual data stored) = 46.62x dedupe ratio

In summary:

100TB, 1% change rate, 1 week:

Full backup + daily incremental backups = 52.5TB stored, and a 2x DRR

Full daily backups = 52.5TB stored, and an 11.7x DRR

That is how PBBA deduplication ratios really work—it’s a fictional function of “what if dedupe didn’t exist, but you stored everything on the disk anyway” scenarios. They’re a math exercise, not a reality exercise.

Front-end data size, daily change rate, and retention are the biggest variables to look at when sizing or understanding the expected data footprint and the related data reduction/deduplication impact.

In our scenario, we’re looking at one particular data set. Most companies will have multiple data types, and there can be even greater redundancy when accounting for full backups across those as well. So while it matters, consider that a bonus.

White Paper, 7 pages

Learn What’s Helping CISOs Sleep Better at Night

And how you can too.

Dynamic Storage

Learn more about our high-performance, all-flash storage systems.

![]()