vSphere 6.7 core storage “what’s new” series:

- What’s New in Core Storage in vSphere 6.7 Part I: In-Guest UNMAP and Snapshots

- What’s New in Core Storage in vSphere 6.7 Part II: Sector Size and VMFS-6

- What’s New in Core Storage in vSphere 6.7 Part III: Increased Storage Limits

- What’s New in Core Storage in vSphere 6.7 Part IV: NVMe Controller In-Guest UNMAP Support

- More to come…

Another feature added in vSphere 6.7 is support for a guest being able to issue UNMAP to a virtual disk when presented through the NVMe controller.

The NVMe controller doesn’t use traditional UNMAP–it isn’t SCSI, it uses NVMe of course. If you want to learn more about NVMe, this is my favorite post that explains it:

In the first release of this controller, VMware only supported the basic mandatory admin and I/O command sets. UNMAP is referred to as DEALLOCATE in NVMe (see the SCSI translation reference here and a deep dive here and the full spec here). VMware did not support the dataset management that includes DEALLOCATE.

Let’s walk through this.

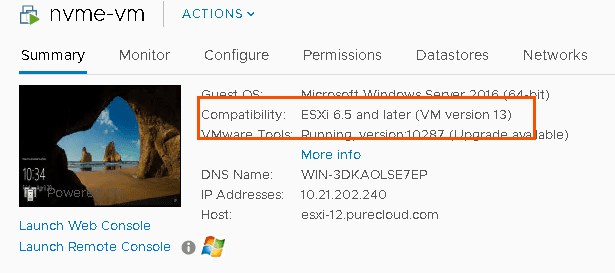

I have a Windows 2016 Server virtual machine:

You will notice that my VM is currently hardware version 13, this is not the latest version, which is 14. But let’s not upgrade yet.

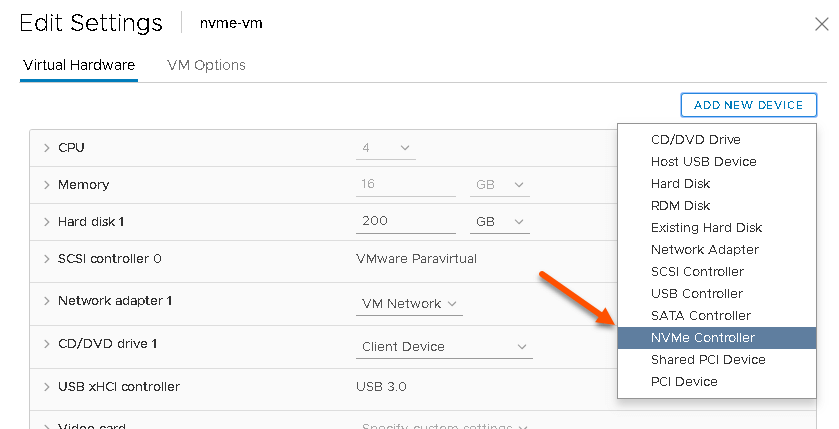

First, I will add an NVMe controller:

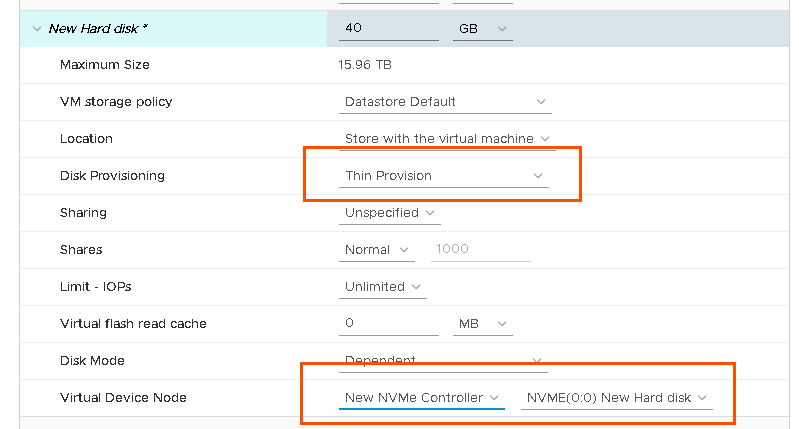

Then I will add a new virtual disk. It must be:

- Thin

- Added to the NVMe adapter

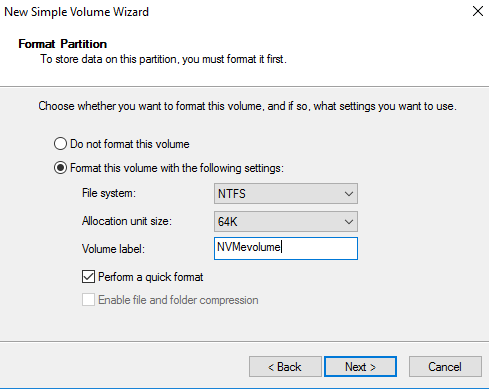

I will now format it with NTFS:

I will use the Windows -based tool SCSI Explorer to look at the device inquiry information. As you can see, in the LBPV page, UNMAP support is listed as 0, which means it is not supported:

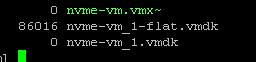

Furthermore, if you use the Optimize Drives tool, which allows you to manually run UNMAP/TRIM/DEALLOCATE in Windows, the drive is listed as no optimization available.

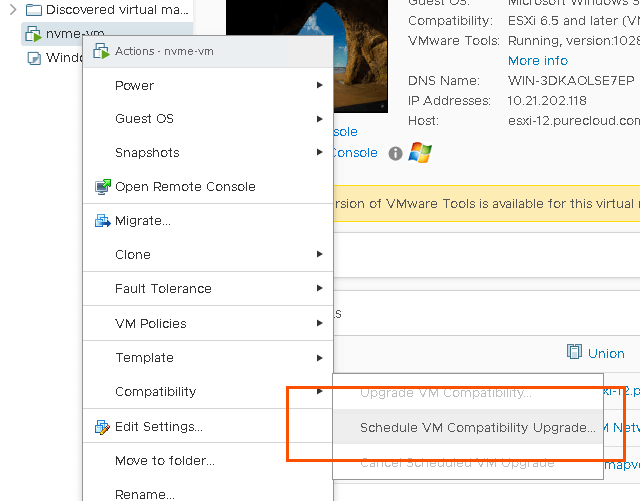

Now, let’s upgrade to VM hardware version 14.

Now we are upgraded:

Note that this is a VM hardware version upgrade–this does not require upgrading the VM tools software.

When back in Windows, we can see UNMAP support is now listed as 1, meaning it is supported.

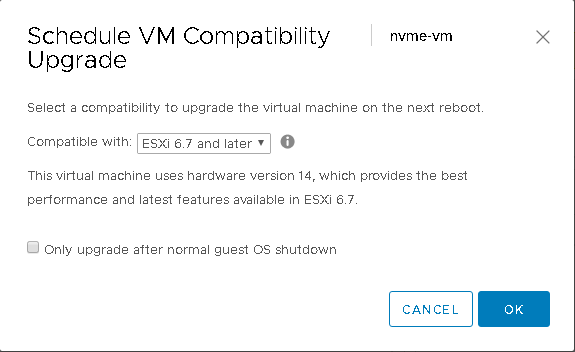

Optimize drives also reports it works:

So let’s actually test it.

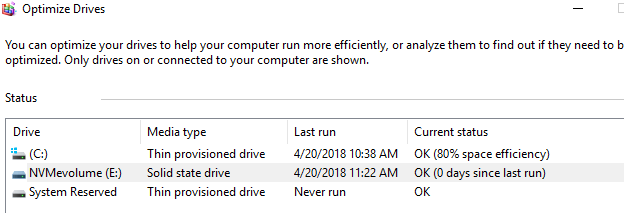

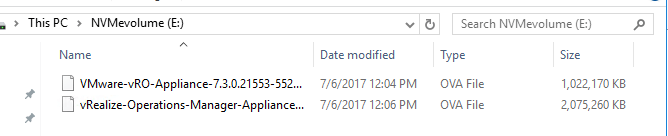

My virtual disk is currently 80 MB in size:

Now I will put some data on it:

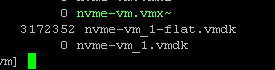

And my VMDK is now 3 GB:

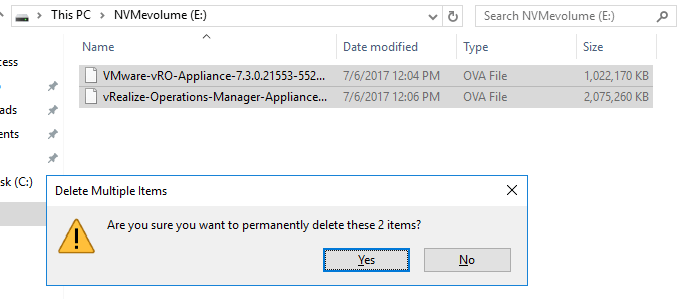

Now to delete the files from the volume:

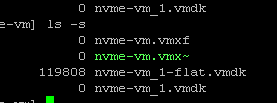

The VMDK shrinks accordingly about 10 seconds later down to about the original size 120 MB:

So it works!

Conclusion

So what is the final requirements?

In my testing Windows 2012 R2 and 2016 both work. I have not tested Linux yet, so I will try it out and report back. But to confirm:

- ESXi 6.7

- VM hardware 14

- Thin virtual disk

So what does this mean? Should you start using this instead of PVSCSI? Maybe. There are potential performance benefits, but VMware does warn it might actually not help in some places too. This doc says:

“Because the vNVMe virtual storage adapter has been designed for extreme low latency flash and non-volatile memory based storage, it isn’t best suited for highly parallel I/O workloads and slow disk storage. For workloads that primarily have low outstanding I/O, especially latency-sensitive workloads, vNVMe will typically perform quite well. “

This seems a bit strange to me, but I need to look into this more. The introduction of reclamation support opens it up to be a contender over PVSCSI, but I am not quite willing to give it the champion title until I know more and do more testing.

In short though, keep it on your radar. VMware introduced a lot more NVMe support into 6.7 and my guess is this is just the start. So I imagine this controller will only continue to become more important moving forward. Stay tuned for more.