In ESXi 6.0 and earlier, a total of 256 devices and 1,024 logical paths to those devices were supported. While this may seem like a tremendous amount of devices to some, there were plenty who hit it. In vSphere 6.5, ESXi was enhanced to support double both of those numbers. Moving to 512 devices and 2,048 logical paths.

In vSphere 6.7, those numbers have doubled again to 1,024 and 4,096 logical paths. See the limits here:

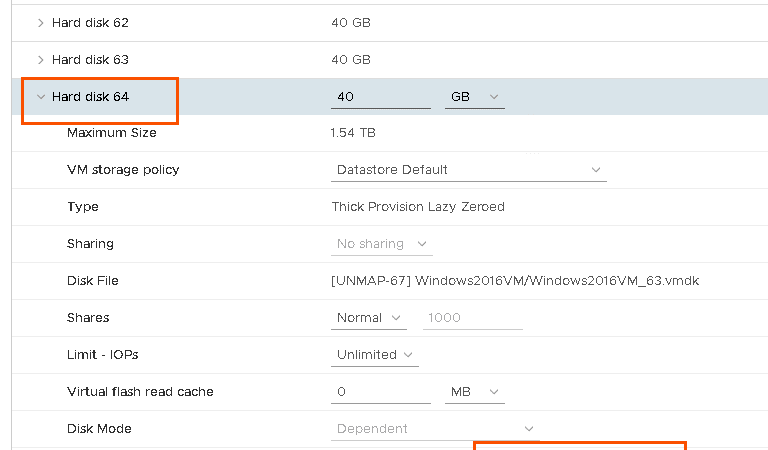

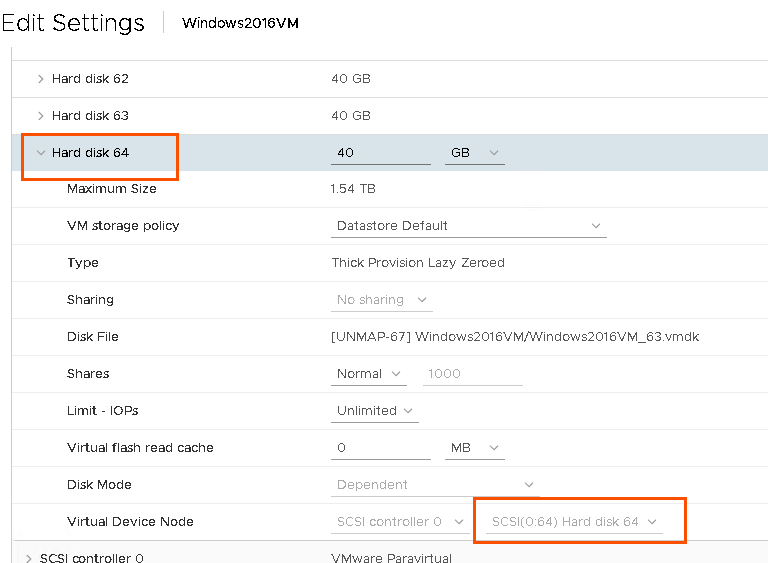

That is all pretty straight forward. Another limit that has be introduced is number of virtual disks per VM. For as long as I can remember, a VM could have up to 4 vSCSI adapters and each could have up to 15 virtual disks. So a total of 60 virtual disks per VM. Slot 7 was reserved, so only 15 of the 16 slots (0:0 through 0:15) were usable by virtual disks.

In vSphere 6.7 the limit has been enhanced to allow 64 virtual disks per adapter. There are some requirements though:

- ESXi 6.5

- Virtual Machine HW version 14

- ParavirtualSCSI adapter

So, in other words, this is not supported with the LSI adapters, the NVMe adapter or others.

Note that slot 7 is still reserved, that does not change. But the rest are free 0:0 – 0:6 and then 0:8 through 0:64.

So should you consolidate your virtual disks to one adapter if you have them spread out over more than 1? Well I guess that’s up to you-there isn’t really any technical reason to do so. As far as I can tell, the maximum queue depth limit of the PVSCSI adapter has not changed (it is still at 256 per disk and 1,024 for the whole adapter). So if you have them separated out because of performance reasons, then I would keep it that way. Though we are talking about a pretty serious workload to eat all of that up.

If you have virtual disks spread out due to running out of slots, then it would be okay.