This is the first post in a three-post series on VM performance on Flash storage, where I explore various provisioning scenarios for VMs on Flash. This first post discusses the impact of VM/array block alignment, the second post will explore the different performance of various VM types, and the third post will explore the performance impacts of different LUN and datastore sizes. Enjoy!

As most VM admins have learned from painful experience, ensuring proper block alignment between your VMs, vmfs, and disk array is critical to getting the best possible virtual machine performance. This issue has indeed been well-documented by virtualization bloggers and storage bloggers, you may have already read some details at Yellow Bricks, Virtual Geek, or Virtual Storage Guy. VM misalignment is not a virtualization problem or a specific storage vendor problem per se, as all vendors acknowledge and have best practices to align the virtual machine disks with the storage LUNs. There are even third party tools to detect and correct mis-alignment. Alignment is a mandatory extra step in VM provisioning, as a misaligned volume leads to noticeable performance degradation (50%+ for large I/O sizes) and higher storage capacity utilization.

In this post we will examine this problem and talk through why disk alignment is a non-issue on Pure Storage FlashArray. That’s right, Pure Storage allows you to haphazardly and recklessly pick any VM alignment you want – they all perform the same…read on to understand why.

VM–to–Storage Block Alignment

VM/disk misalignment results when a file system block is not aligned to a storage array block. This would mean every random read or write from the VM would require accessing more than one block on the array. This in turn causes performance degradation in terms of queries taking longer time and higher response times due to unnecessary and unintended read/writes.

This is a common problem in both virtualized and non-virtualized environments. In virtualized environments there is an additional layer of file system that needs to be aligned along with the guest file system alignment. For example, in VMware vSphere, the vmfs volume (or datastore) has to be correctly aligned to both the storage array blocks and the guest file system. Provisioning using VMware virtual client or vCenter solves the problem of vmfs alignment but the guest OS alignment is still an issue. Note that Windows 2008 and Windows 7 guests are aligned by default (default value is 1024KB) but Windows 2003 and Windows XP MBR partitions are not aligned automatically.

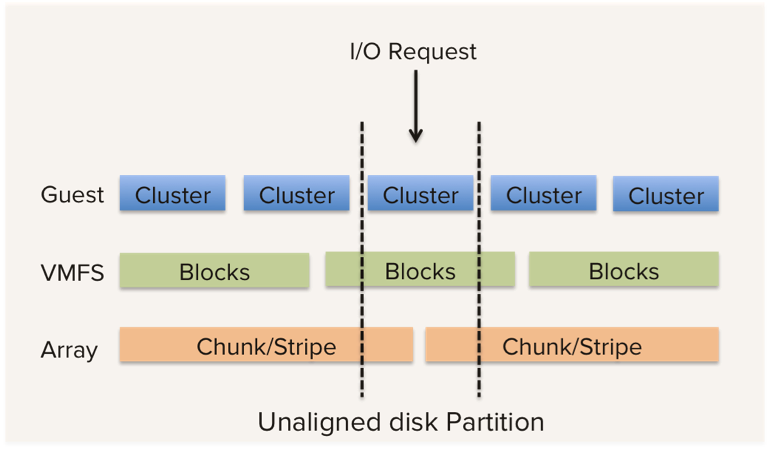

Figure 1: Unaligned partitions resulting in accessing multiple chunks/blocks on the storage array for a single I/O request

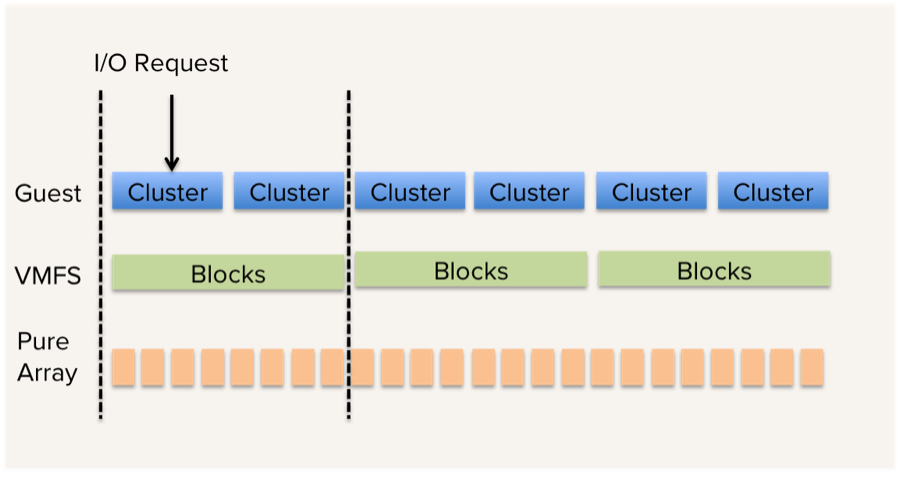

Figure 2: Aligned partitions resulting in accessing single chunk/block on the storage array

Figures 1 and 2 above illustrate the need for alignment. A single read will result in reading two chunks on an unaligned partition (figure 1) and every I/O on such a guest will result in extra I/Os. In a disk-aligned guest, a single block read results in one vmfs block read and only one array block read (as shown in figure 2).

The problem gets exacerbated when customer tries to do a physical to virtual machine conversion (P2V) of an existing application server and ends up with misaligned volumes. Fixing this involves recreating the data with the proper alignment, which is a huge pain and most often not undertaken.

VM Alignment on the Pure Storage FlashArray

As stated earlier, none of these alignment issues matter at all in the Pure Storage FlashArray, you can deploy VMs with any block geometry. There are three key architectural reasons that make this possible:

1. 512-byte Virtualization Geometry

The FlashArray is internally virtualized down to a 512-byte chunk size, significantly smaller than most traditional disk arrays, which typically virtualize at the multi-KB to MB chunk size. Host geometries are always a multiple of 512-bytes, meaning that any host geometry will overlap perfectly with some number of 512-byte chunks on the FlashArray, so the FlashArray will never read extra blocks due to misalignment.

Figure 3: I/O request from guest always aligned regardless of the guest alignment

All VM IOs are sector aligned (512 byte boundary) whether it is 32,256 bytes as in Windows 2003 MBR formatted NTFS volumes or any other geometry. This essentially eliminates all alignment requirements on the guest or the virtualization layer.

2. No Performance Contention Between Chunks

In traditional disk arrays, not only does misalignment have the potential to cause additional chunk reads, but the problem is worsened by the fact that reading the chunks can interfere with one another from a performance perspective. The Pure Storage FlashArray is a purpose-built all flash storage array that is not constrained by spindle-based hard drive rotational latencies. Every block of random read or write anywhere on the physical SSD can be retrieved in equal time. So the FlashArray not only minimizes the “additional chunks” required by any read, but the chunks that are read are done so without interfering with one another.

3. Perfect Write Allocation

On the write side the FlashArray has an additional advantage. In traditional disk arrays, host I/O blocks map directly to array chunks, so a given write if not aligned has the same problem in that it might cross chunk boundaries and double the performance hit of the write. In the Pure Storage FlashArray, all writes are virtualized and packed into write segments, which are virtualized and stored in exactly the amount of space required, totally independent of alignment. This means that no changes to host alignment will impact or cause an increase in write workload on the FlashArray.

Given these fundamental differences in the way we have architected our storage array we do not have any penalty from misaligned storage block access. Or in short, data alignment is fundamentally a non-issue on the Pure Storage FlashArray.

Proving It in the Lab and with Customers

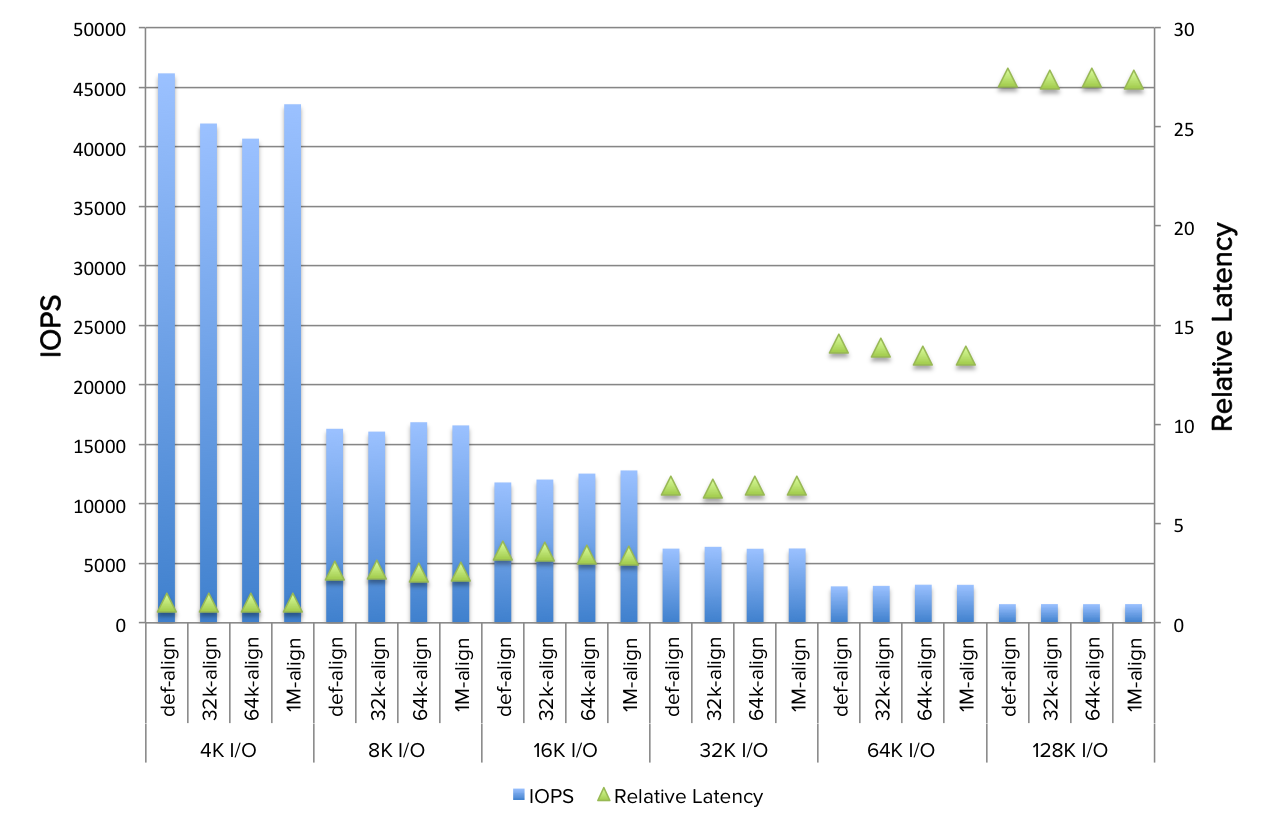

These claims have been validated extensively, both in the Pure Storage labs, and in customer environments. In one such lab validation test, which we summarize below, we created variable partition offsets on NTFS on Windows 2003 and Windows 2008 VMs, running IOmeter with different block sizes.

Test Overview:

- Windows 2003 Enterprise (x64) R2 SP2 VM

- 4vCPU, 8 GB memory, 4 virtual disks exposed via a paravirtual driver

- IOmeter (with 4 workers, one worker and one volume mapping)

- IOmeter IO sizes varied from 4K to 128K

- NTFS volumes manually aligned at default (32,256 bytes), 32K, 64K and 1024k.

Figure 4 below shows the IOPS and latency on different test runs, varying the alignment and I/O size conditions as summarized above. Notice there is essentially no change in IOPS numbers or the latency number on each of the volumes for the same workload.

Figure 4: IOPS and relative latency graph from IOmeter, run on four different volumes of varied host block alignment

The results confirm our argument that partition alignment or misalignment has no effect on the performance both in terms of latency and IOPS on our system.

We also did validation on Windows server 2008 R2 SP1 VM running IOmeter (with 4 workers, one worker and one volume mapping) with different IO sizes and read/write mix on NTFS volumes manually aligned at 32K, 64K, 128K and 1024K and verified similar results.

Conclusion

Data alignment is not an issue on Pure Storage FlashArray. In our opinion this has major implication in VM IO performance, Storage vMotion, rapid provisioning of virtual machines from templates, and physical to virtual (P2V) conversions. This is not only one less thing to worry about in the provisioning process from the customer perspective, but can also deliver a substantial performance improvement in moving to the Pure Storage FlashArray.

Pure Storage Virtual Lab

Sign up to experience the future of data storage with Pure Storage FlashArray™.

Written By:

Disk is Done for

When it comes to backups, disk has gone the way of the dodo. Take a look at how flash is taking center stage!