Organizations with critical applications, databases, virtual environments, and data analytics are always looking for ways to improve performance. And that includes speeding access, reducing latency, and saving power.

Purity 6.1 for FlashArray™ added support for NVMe over Fibre Channel (FC-NVMe) networks, complementing the existing support for NVMe over RoCE. If you’re using either storage network, you can take advantage of higher-performance NVMe protocol to modernize your infrastructure, consolidate applications, and lower compute and storage requirements.

To quantify the performance benefit of FC-NVMe, Pure worked with networking solutions provider Brocade to test two commonly used databases: Oracle and SQL Server. We compared FC-NVMe to FC-SCSI. For load testing, we used HammerDB, the leading benchmarking testing software tool for databases. For CPU utilization, we used the Linux sar command to compare SCSI process overhead to NVMe.

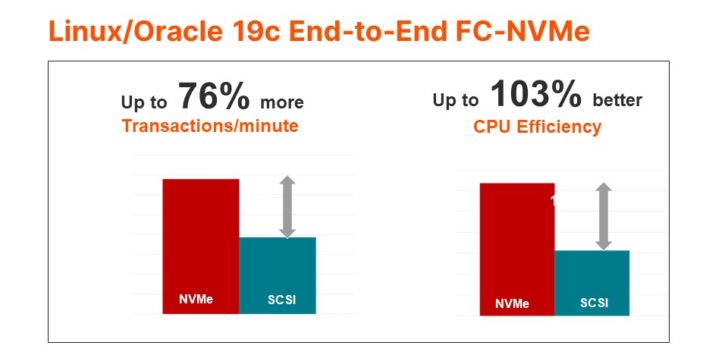

Test 1: Oracle Performance Benchmarks

We performed the first test with a single-node Oracle Database 19c instance on a server running the Red Hat RHEL 8.3 operating system. We utilized HammerDB’s TPROC-C profile for which the database performance metric is transactions per minute (TPM).

The purpose of our test was only to evaluate I/O performance. It didn’t determine database performance limits or make database comparisons.

We tested to see if the Oracle database performs the TPROC-C test profile better when using classic FC-SCSI for connecting the SAN storage compared to using the FC-NVMe protocol on Pure Storage® FlashArray//X.

We used the configuration and test processes described above to find the maximum Oracle TPM while measuring the server processor utilization when connected to the storage array using FC-NVMe. We ran the test again using FC-SCSI without making any other configuration changes between the two test runs.

Using FC-NVMe achieved the maximum Oracle transaction per minute—76% greater than that achieved using FC-SCSI.

The Oracle CPU efficiency better when using FC-NVMe and 103% better than FC-SCSI’s CPU efficiency. We used the Linux sar command to check CPU-related statistics. %sys is the percentage of time the processor needs for OS tasks. To calculate the CPU efficiency, we used TPM / sar %sys.

These improvements in existing FC components and networks could provide significant CAPEX and OPEX savings, without ripping and replacing infrastructure. Also, reducing the number of CPU core counts could lower Oracle license costs.

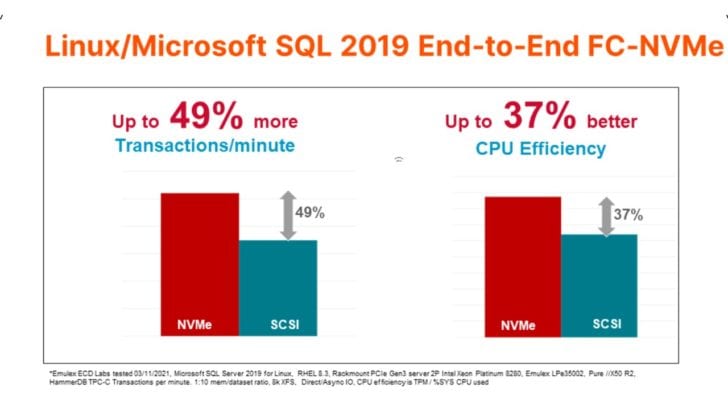

Test 2: SQL Server Performance Benchmarks

For the second test, we used Microsoft SQL Server 2019 for Linux running on Red Hat RHEL 8.3 Linux. The server configuration, host bus adapter (HBA) parameters, and storage configuration were the same as those used for the Oracle test. The test processes used for SQL Server are somewhat different, however. As a result, a direct comparison of Oracle and SQL Server shouldn’t be made and wasn’t the objective of this exercise.

We again used the HammerDB TPROC-C profile but with configuration parameters designed for Microsoft SQL Server.

Our goal was to see if the SQL Server database performs the TPROC-C test profile better when using classic FC-SCSI for connecting the SAN storage or when using the FC-NVMe protocol on FlashArray.

We achieved the maximum SQL Server TPM using FC-NVMe, which was 49% greater than the maximum TPM achieved using traditional FC-SCSI. We found that the SQL Server CPU efficiency was better using FC-NVMe: 37% better than using FC-SCSI.

These improvements in existing Fibre Channel components and networks could provide significant CAPEX and OPEX savings, without ripping and replacing infrastructure. Also, reducing the number of CPU core counts could lower SQL Server license costs.

Test Environment Details

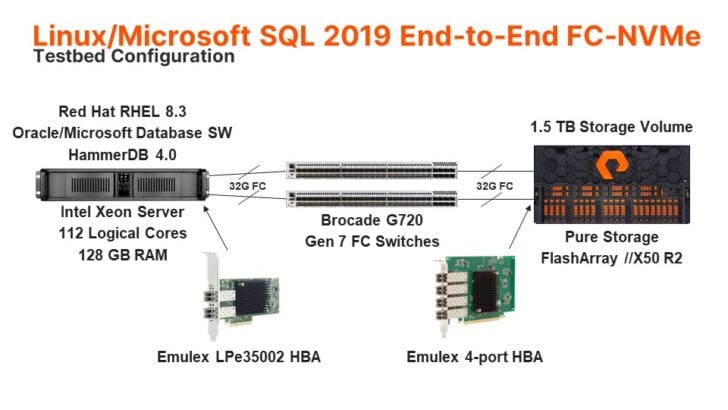

Our test environment had:

- A single database server, SAN-connected using the latest Emulex LPe35002 Gen7 HBA to a Brocade Gen7 G720 switch at 32G.

- A Pure FlashArray//X50 R2 array running Purity//FA 6.1.0, which was also connected to the SAN via 32G port connections. FlashArray//X50 R2 is a mid-range FlashArray and R2 is one generation old. Current-generation FlashArray//X70 and //X90 R3 will deliver higher-performance results.

- A storage volume provisioned on the array and mapped to the server. The volume was partitioned and mounted as a single XFS disk to be used as the storage volume for the database instance.

- A database scaled in size so that the size of the data set is 10x the size of available server memory.

We’re Continuing to Listen to DBAs

Business-critical database application workloads continue to grow while IT budgets shrink. Pure and Brocade are working closely with DBAs to understand that changing mission-critical needs for 99.9999% uptime, business continuity, and global disaster recovery. When your applications run faster, users are more productive.

The combination of Pure FlashArray, Emulex Fibre Channel adapters, and Brocade Fibre Channel switches simplifies operations for DBAs, maximizes performance, and unlocks the full value of your data.

Put the “Me” in “NVMe”

Learn more about NVMe and how it can boost the performance of your critical enterprise applications.