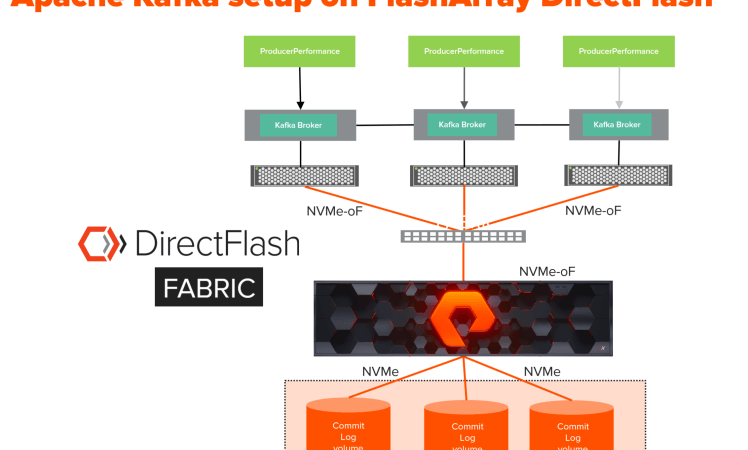

In this blog, using Apache Kafka I will showcase the sheer performance advantage of Pure Storage FlashArray//X DirectFlash Fabric compared to the SAS-SSD-DAS deployment. Apache Kafka is the epitome of what fast is. Apache Kafka is commonly deployed in DAS landscape. The best way to debunk DAS performance advantages and challenge typical DAS deployments is to show the Apache Kafka cluster performance and scale on Pure Storage FlashArray//X with DirectFlash Fabric.

In February we announced the DirectFlash™ Fabric which uses NVMe-oF RoCE protocol. Our NVMe-oF initial protocol implementation uses RoCE which stands for RDMA over Converged Ethernet (RDMA = Remote Direct Memory Access). Our tests showed that FlashArray™//X with DirectFlash technology delivers better performance compared to SAS and SATA SSDs.

What is Apache Kafka? It is a distributed, highly scalable, fast messaging application used for streaming applications. Apache Kafka is based on the commit log, and it allows users to subscribe to it and publish data to any number of systems or real-time applications.

In order to test the performance of Apache Kafka, I built two 3 node clusters. One cluster was deployed on FlashArray//X DirectFlash Fabric and the other using DAS SAS SSDs. Both clusters were deployed using completely identical compute nodes. Some of our competitors have tested Kafka with low end, low-performance SAS disks even without RAID or striping. In our testing we made sure that DAS setup is with SSDs is configured for performance with RAID 0 for striping and with optimal batch.size parameter in Kafka producer to deliver the best possible DAS IO results.

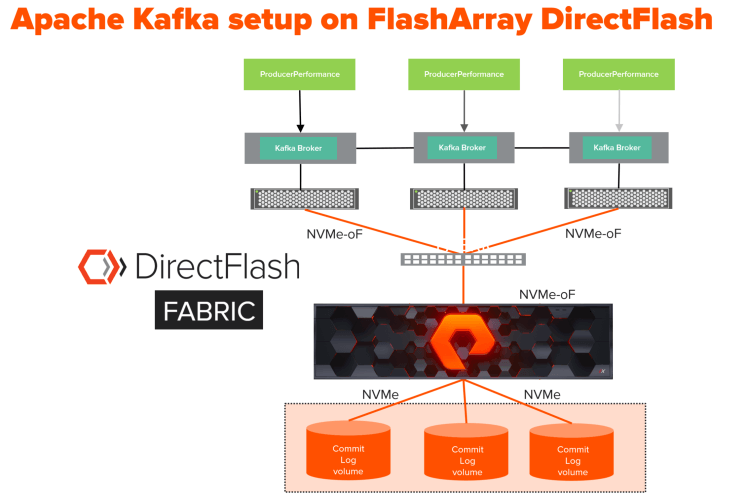

Configuration for FlashArray DirectFlash NVMe-oF based Apache Kafka cluster setup:

This cluster consists of three broker nodes. The (kafka-logs) commit logs were mounted on FlashArray//X volumes formatted with the XFS file system. FlashArray//X was configured with NVMe-oF protocol for the server (host) to FlashArray connectivity. Furthermore, FlashArray//X also provides internally disk to host bus adapter (HBA) NVMe connectivity assuring complete end-to-end, host to FlashArray to the disk full NVMe connectivity.

Here are the specifications of each host(x86) for the Apache Kafka cluster on FlashArray//X DirectFlash Fabric setup:

- Operating System: CentOS Linux 7.6

- Server Host Bus Adapter ( host to Pure Storage FlashArray //X90R2 connectivity): Mellanox ConnectX-4 Lx (Dual Port 25 GbE)

- Apache Kafka version: 2.12-2.2.0

- CPU:Intel(R) Xeon(R) Platinum 8160 CPU @ 2.10GHz(48 cores)

- Memory: 512GiB System Memory, DDR4 Synchronous 2666 MHz (0.4 ns)

- Server: Cisco UCSC-C220-M5SX

The configuration for SAS/DAS based Apache Kafka cluster setup:

This cluster consists of three broker nodes. The commit logs are mounted using SAS/DAS SSD drives with the XFS file system.

Here are the specifications of each host(x86) for the Apache Kafka cluster using SAS SSD DAS:

- Operating System: CentOS Linux 7.6

- DAS: 2x 1.6TB 12Gb/s SAS SSD (RAID 0)

- Apache Kafka version: 2.12-2.2.0

- CPU:Intel(R) Xeon(R) Platinum 8160 CPU @ 2.10GHz(48 cores)

- Memory: 512GiB System Memory, DDR4 Synchronous 2666 MHz (0.4 ns)

- System: UCSC-C220-M5SX

Performance test setup and Results:

The Kafka producer performance tool(org.apache.kafka.tools.ProducerPerformance) was used to generate load on to the three nodes cluster. The topics used by Kafka producer performance have replication set to 1, 3 and partitions set to 3. The testing consisted of only writes which are basically Kafka producer writing to these topics.

Here is the information on the topics used by Kafka Producer performance:

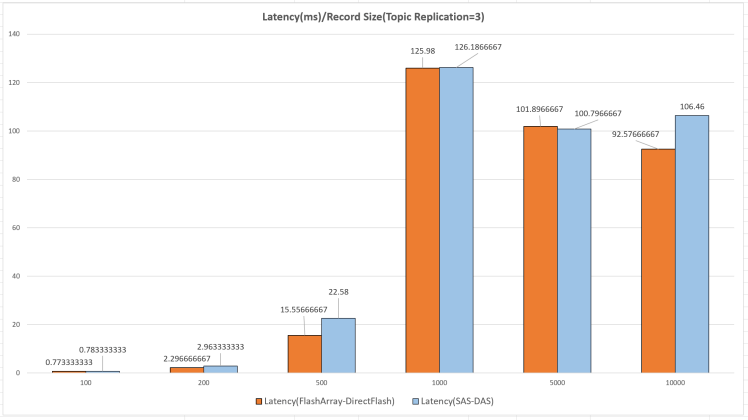

Replication Factor = 3: Performance testing

Topic:hello333 PartitionCount:3 ReplicationFactor:3

The chart below shows the latency in ms versus Record size of the message on the Apache Kafka Cluster based on NVMe-oF FlashArray cluster, and a SAS-DAS cluster using the topic shown above hello333. It clearly illustrates that write latency of Apache Kafka cluster on NVMe-oF FlashArray is lower ( up to a 13 % lower) than Apache cluster running on SAS-DAS.

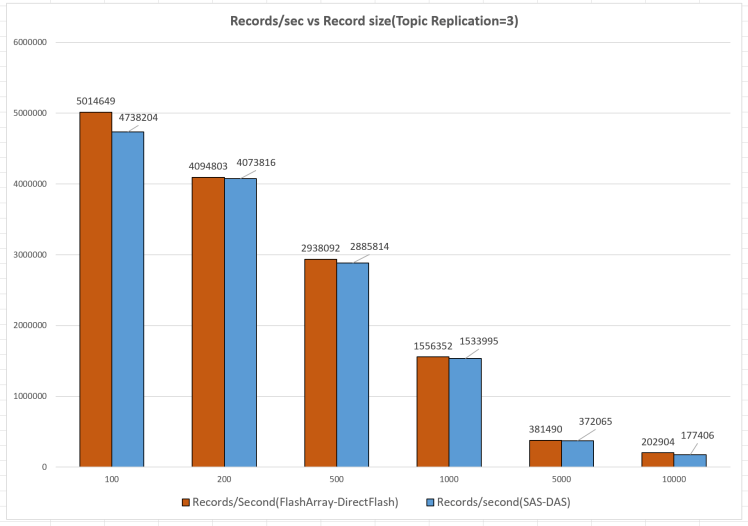

Next chart shows the Records/second versus message size for writes between Apache Kafka cluster based on NVMe-oF FlashArray and Apache Kafka cluster based on SAS-DAS using the topic hello333.

Here also, it shows that the Apache Kafka cluster based on NVMe-oF FlashArray delivers higher (up to 6.3%) performance than SAS-DAS even when the record/message size was increased. For the 100-byte record size, we crossed the 5 million records/second for 3 producers and even with a replication factor of 3!

This was done with acks=1 which is: This will mean the leader will write the record to its local log but will respond without awaiting full acknowledgment from all followers. Even with acks=-1 the throughput significantly decreases for both Apache Kafka cluster based on FlashArray with DirectFlash and SAS-DAS. But, the relative performance difference was still very similar to what we have seen above where FlashArray with DirectFlash performed overall better than SAS-DAS setup.

Summary

Apache Kafka performance on FlashArray with DirectFlash is in the same range or greater than DAS. Moreover, FlashArray data services such as data reduction and data protection, Pure Storage FlashArray NVMe-oF is a better choice than DAS for Apache Kafka deployments. I would highly recommend testing and deploying this architecture based on the FlashArray with DirectFlash and NVMe-oF with DirectFlash to take advantage of modern, high performant shared storage provided by Pure Storage FlashArray.

Pure Storage Virtual Lab

Sign up to experience the future of data storage with Pure Storage FlashArray™.

Written By: