Summary

New startup storage solutions are promising SDS-like benefits with extensive features, simple operations, and unlimited scale. But the complex initial implementations and ongoing stability challenges tell a different story.

This is Part 3 of a five-part series diving into the claims of new data storage platforms. Read Part 1 and Part 2.

For over a decade, software-defined storage (SDS) claimed to be a compelling alternative for complex and expensive enterprise data storage. Among its claims: unparalleled flexibility, hardware independence, and cost savings from commodity hardware.

Unfortunately, real-world implementations proved impractical. Even public clouds that initially built their infrastructure on commodity hardware components realized that efficiencies at scale could only be realized from engineering hardware and software together.

Fast-forward to today, and many new, unproven startup storage solutions are marketing themselves as “software-only” and “hardware agnostic,” promising SDS-like benefits with extensive features, simple operations, and unlimited scale. Having said that, can this be true?

The Hype: New SDS Solutions, Same Old Complexity

SDS-based startups promise seamless storage solutions, but they’re marred with complex initial implementations, ongoing stability challenges, and regret. More specifically, deploying these systems often results in non-stop troubleshooting, patching, and experimentation with different storage configurations, causing frustration and loss of valuable resources.

These SDS solutions also struggle with inefficiencies. Commodity components are attractive due to their low acquisition cost; however, the lack of optimization and efficiency between hardware and software shows up in the energy efficiency of these systems. With the world on the verge of an energy crisis, every watt saved is a watt that can be provisioned to new workloads. And with AI investments ramping up, these watts will be precious! Let’s look at some of the enduring storage management burdens that tend to follow the implementation of these solutions.

1. Initial Deployment Is a Headache

The challenges start right at deployment. Installing these systems can feel a little like attempting to solve a Millennium Prize Problem. The hardware is a complicated concoction of compute boxes, storage boxes, switching fabric, and a lot of cables. Despite claims of being “hardware agnostic,” these solutions actually have a narrow set of specific components from select “certified or qualified vendors.” With all of this variety, the initial configuration is complex, with variation across components, often taking organizations weeks of working with vendor-trained engineers to get the system up and running.

Unfortunately, the time to first I/O is only the beginning.

While these new storage solutions claim to be feature-rich, many features draw from various open-source software. Many of these features are not enterprise-ready and correctly configuring them proves to be complex. Some of these key features are turned off by default, causing operational headaches down the road. For example, one vendor turns off Data-at-Rest Encryption (D@RE) by default, which is crucial to keeping customer data secure. While D@RE can be enabled after the system is up and running, this can impact ongoing performance unpredictably, perhaps even resulting in a system not being able to meet its performance SLAs. Self-encrypting drives (SEDs) can minimize the impact on system performance during encryption, but tend to be significantly more expensive than regular drives. Contrary to what the performance benchmark results from this vendor with encryption off may convey, customers deploying this solution must choose between accepting a performance impact or paying additional costs to acquire SEDs.

2. Day-to-day Operations Are Challenging

Unfortunately, operating these new storage solutions doesn’t get any easier after the initial deployment is complete. Customers have to remain vigilant to ensure that their storage system is running optimally. That means dealing with a variety of third-party patches and even custom software fixes, which can increase downtime and risk and extend resolution times. Many have limited monitoring capabilities that provide some reactive information about the array and user statistics and error alerts, but they cannot auto-detect problems, offer self-service solutions, or forecast future requirements.

These new storage solutions exhibit all the hallmark SDS issues with meeting performance, reliability, efficiency, and scaling objectives. In theory, you can scale compute and storage nodes independently in different quantities as needed. In reality, the compute and storage nodes are scaled together, often leading to overprovisioning of resources and workload balancing. Even when scaled as suggested, these solutions often fail to deliver consistent performance in all scenarios, particularly with small I/O. As the clusters scale and become asymmetric, there’s even more chance for unpredictability and imbalance in performance.

It is not a surprise that some of these new storage vendors have to hide under the guise of a dedicated Slack channel and assign dedicated engineering experts to provide ongoing support for each of their installations. While we understand the necessity of these misguided efforts to mask their instability and complexity, it does not benefit customers in the long term.

3. Long-term Ramifications Can Be Severe

These SDS-based storage solutions add unforeseen risk to their customers’ storage environments—both from a hardware and software perspective. From a software perspective, these storage startups claim a rich feature set and all-inclusive support. Many of these features are even included at the time of purchase for no additional cost, but with a disclaimer that existing or future features may be licensed separately. From a hardware perspective, we discussed the complexity of deploying various specialized components. Some of these components have already proven not to be viable in the market long term and impact an organization’s ability to protect their investment by scaling and leveraging their architecture long term. Forklift upgrades and wholesale migrations are painful, so long-term viability of any architecture is essential.

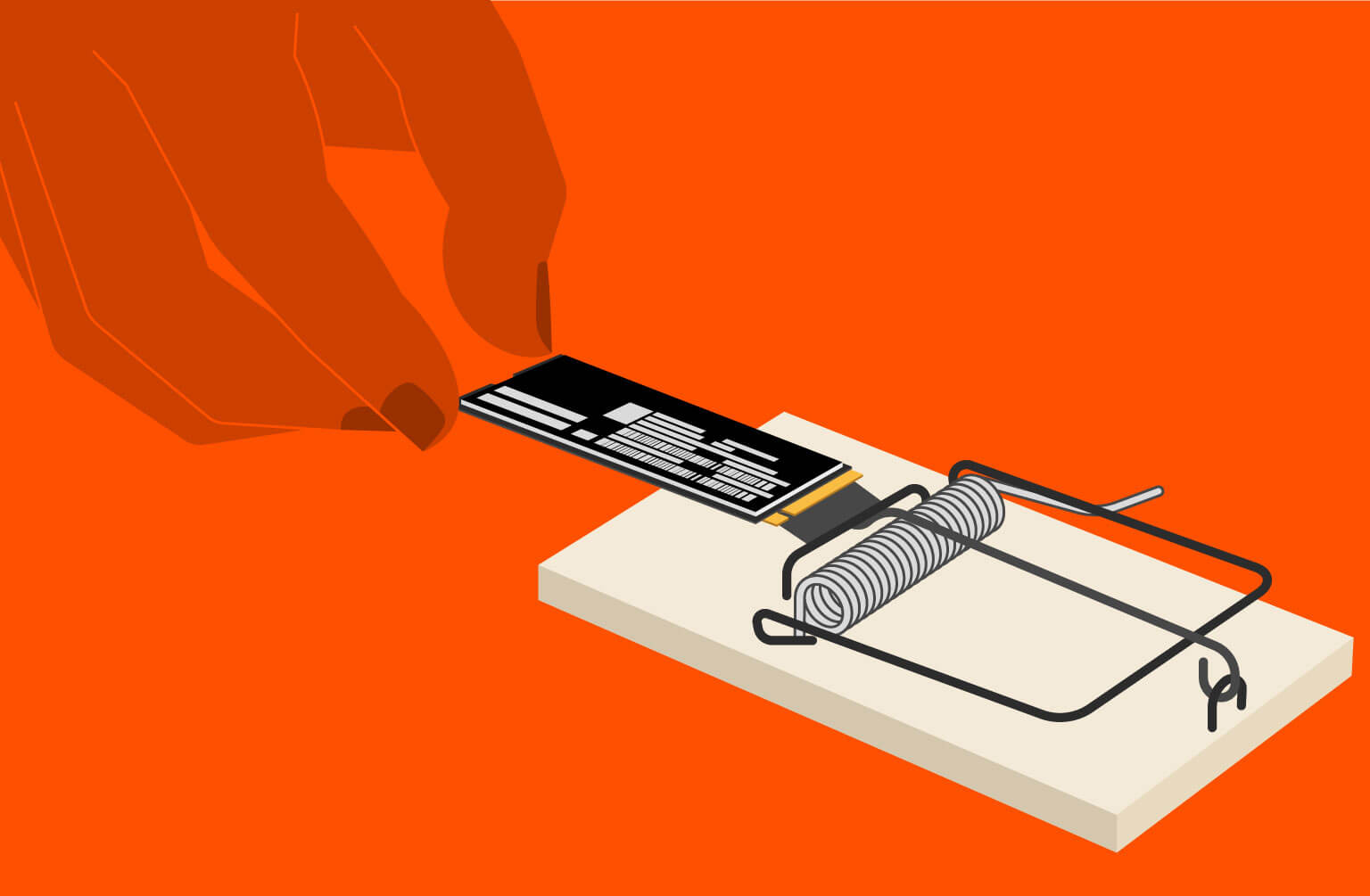

Here’s an example: One specific SDS storage solution uses “Optane” storage class memory (SCM) in its architecture as a critical enabler of performance. The SCM is utilized as a landing spot for writes, read backs, and metadata. With Intel discontinuing the Optane program, the storage startup claims that enhanced SLC can replace SCM without issue. The truth is that SLC has only one-third of the write performance and inconsistent latency, adversely impacting the cluster performance and adding unforeseen costs. Lastly, the proprietary networking architecture used in these SDS solutions makes seamlessly scaling to large capacities complex and time-consuming to operate.

Read More from This Series

The SSD Trap:

Demystifying Storage Complexity:

The Storage Architecture Spectrum:

Beyond the Hype:

Escaping the SSD Trap:

Is Your Storage Platform Really Modern?

Simplicity as a Core Tenet

As modern workloads such as large language models (LLMs) and retrieval-augmented generation (RAG) take center stage, organizations need a performant data platform—not a storage solution that claims to be a modern SDS.

At Pure Storage, we handle these challenges by engineering simplicity into our products from day one. The Pure Storage Platform delivers an intuitive design and simplicity during setup, management, and scale, enabling organizations to get the most from their data. We also offer industry-leading density and energy efficiency driven by the tight integration between our Purity operating system and our DirectFlash® Modules.

Go beyond the hype. Discover how the Pure Storage Platform can future-proof your infrastructure and unlock the full potential of your AI initiatives.

Eliminate Complexity

Learn 10 ways Pure Storage helps you uncomplicate data storage, forever.