Summary

Pure Storage builds its own flash storage devices that are purpose-built for enterprise workloads and requirements. Our DirectFlash Modules are two to five times more efficient than COTS SSDs and deliver a much lower $/GB cost.

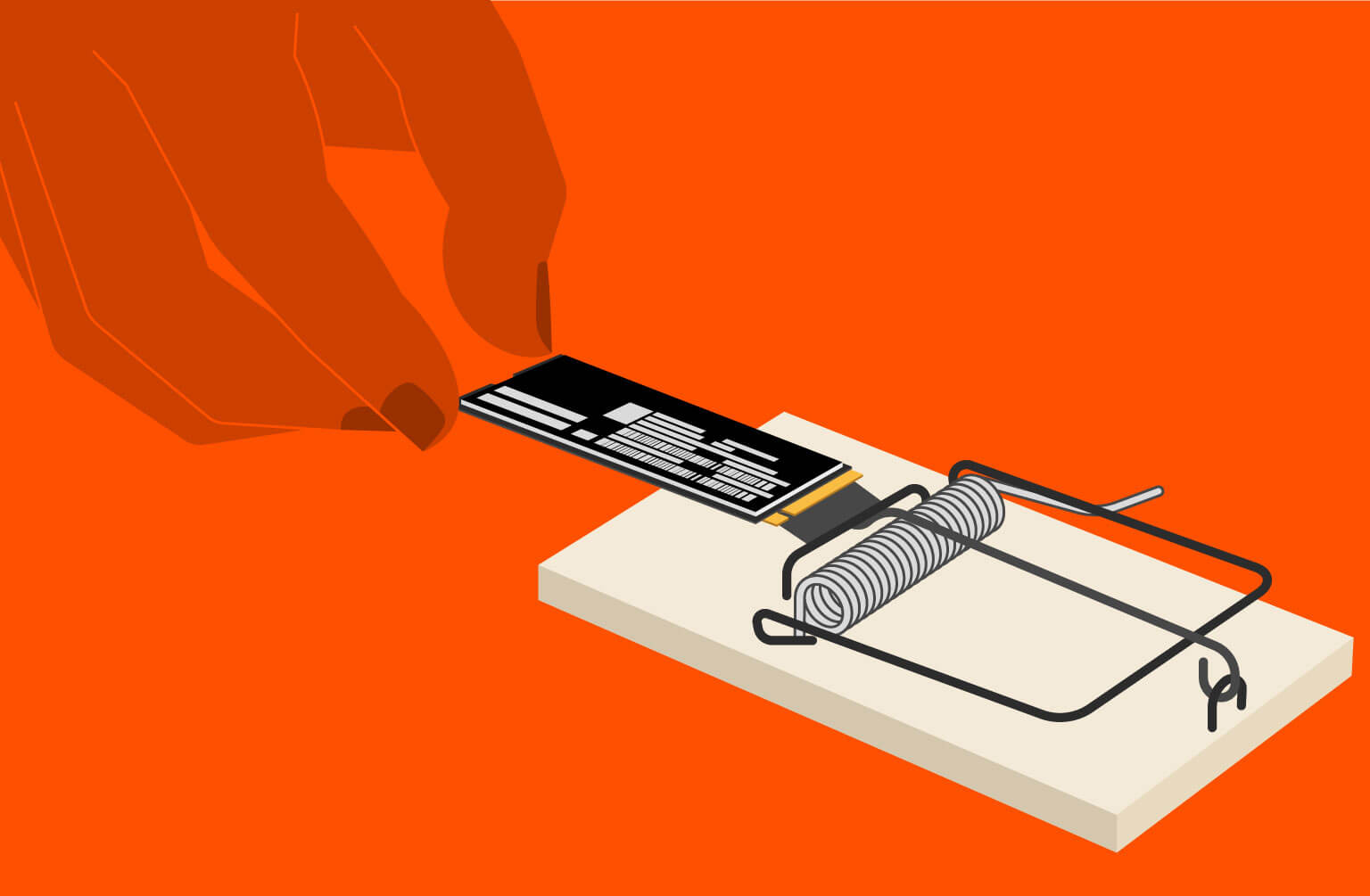

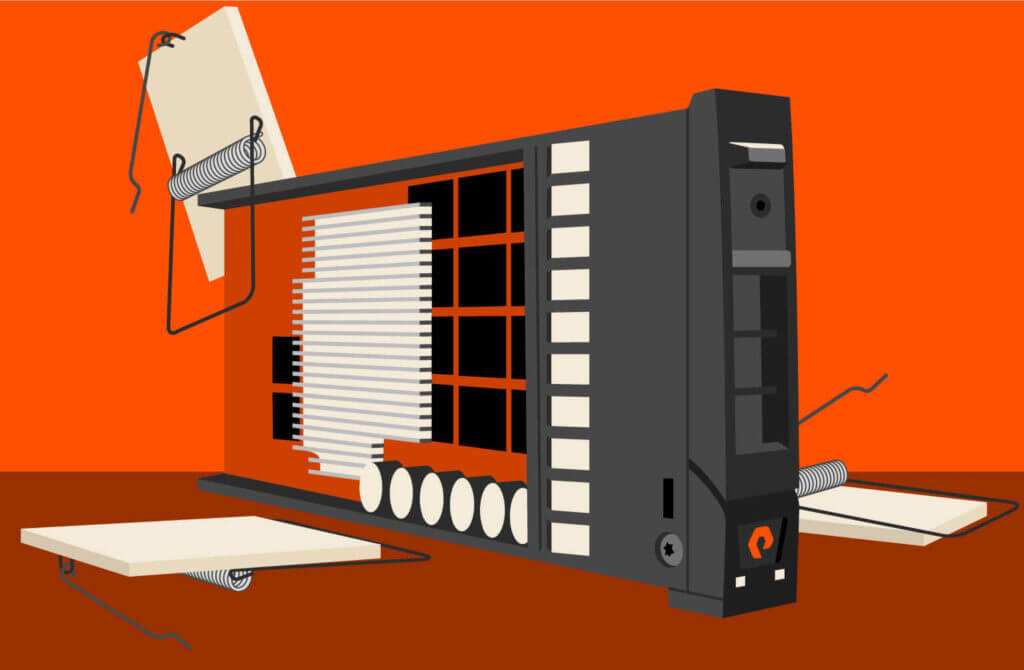

When it comes to the storage devices at the heart of all-flash array (AFA) systems, there are two product strategies: Vendors use either commodity off the shelf (COTS) SSDs sourced from disk vendors, or they build their own devices. We’ve seen the implications of going down the COTS SSD path in Part 1 of this “Beyond the Hype” blog on “The SSD Trap.” How do these two approaches compare? We’ll look at that in more detail in Part 2 of this blog, but in a nutshell, purpose-built flash storage devices for enterprise use from Pure Storage, called DirectFlash® Modules (DFMs), are two to five times more efficient than COTS SSDs (and 10 times more efficient than HDDs, but we’re not going to spend any more time on that in this blog). That improved efficiency translates to more compact, less costly systems that present a variety of advantages for enterprises and effectively sounds the death knell for HDDs in mainstream enterprise workloads.

Pure Storage builds its own flash storage devices primarily to overcome the limitations of COTS SSDs to enable the deployment of significantly more efficient storage infrastructure. Today, while disk vendors are shipping 15.72TB SSDs in volume, we’re shipping 75TB and 150TB DFMs, and 300TB DFMs by the end of 2025. Those large device sizes allow us to deliver a $/GB cost much lower than COTS SSDs, but because of the way we build and manage them, we also beat disk vendor products in a number of other ways:

- We deliver better performance consistency at scale, particularly as our storage devices fill up.

- We guarantee our flash media endurance for twice the extended warranty period available on COTS SSDs.

- Our reliability at the device level is two to five times better than enterprise COTS SSDs.

- Our AFAs today have the highest TB/watt metrics¹, and we guarantee that our systems are the most energy efficient in the industry.

- Our AFAs today have the highest TB/U (600TB/U with 75TB DFMs) in the industry and that lead will be extended when we hit 2.4PB/U at the end of 2025.

- Our capacity utilization at the device level is higher (we’re achieving better than 82% in customer production environments today and expect to increase that to the mid to high 80s by the end of this year).

How can we do this and make these devices viable for enterprise storage system use? Let’s take a look at that.t.

Read More from This Series

The SSD Trap:

Demystifying Storage Complexity:

The Storage Architecture Spectrum:

Beyond the Hype:

Escaping the SSD Trap:

Is Your Storage Platform Really Modern?

Designed for Enterprise Workloads

Pure Storage doesn’t sell consumer products, we only sell enterprise storage solutions. So when we designed our DFMs, we didn’t have to worry about consumer features and capabilities that block our ability to build the best devices for enterprise use. This is a major reason why we achieve the performance, endurance, reliability, low energy consumption, storage density, and overall efficiency for enterprise workloads. The power and thermal management challenges of creating even a 61.44TB device while constrained by the limits of 2.5” COTS SSD packaging are daunting. Our DFM packaging offers significantly more capability to pack, power, and cool much higher densities than could fit into SSD packaging.

When we say “purpose-built” for enterprise workloads and requirements, we really mean it.

Optimal Flash Media Utilization

Our devices are not limited by HDD form factors. We can configure up to four DFMs on each blade and can insert up to 10 blades into a 5U chassis (that’s on our FlashBlade//S™ systems). Unlike COTS SSDs, which have a controller, DRAM, and flash media in each device, our DFMs hold only flash media. This allows us to pack more flash media into a device, which allows us to deliver a lower $/GB cost for flash storage devices than our competitors.

Our DFMs do not need embedded controllers. Our AFA design moves most of the tasks done in firmware on systems that use COTS SSDs into our Purity storage operating system software, which runs on our controllers. Each of our DFMs still has a NAND flash controller with firmware that provides direct memory access to the NAND. Purity maps and presents the flash media in each DFM, giving us the ability to manage it directly with software (not firmware) and the global visibility to manage the flash media across the entire system optimally. Our significantly reduced firmware content relative to systems using COTS SSDs is a major contributor to our six times better reliability at the device level.

Our DFMs also don’t need on-board DRAM. We use DRAM on our controllers, which is shared between all devices, allowing us to make much more efficient use of expensive memory capacity. This means each of our systems needs far less DRAM, saving on both energy consumption and cost and resulting in improved reliability.²

The ability to schedule all I/O operations at the system level allows us to manage the flash media to deliver more consistent performance, higher endurance and reliability, and higher capacity utilization than COTS SSDs. For example, COTS SSDs start garbage collection when they need it. Any I/Os to that SSD are delayed until garbage collection is complete, and the system has no view into when garbage collection might start or end—they only know that I/O requests to that device are delayed. In our case, garbage collection is scheduled to occur in each DFM based on what is happening in the entire system so we don’t have cases where read or write I/O is unexpectedly blocked.

Because we can manage the flash media more efficiently, we need far less overprovisioning. Our DFMs have 3%-5% media overprovisioning compared to the 15%-20% in COTS SSDs. Our design increases storage density and saves on flash $/GB costs per DFM.

In enterprise storage systems in general, the two most common sources of failures are the firmware (distinct from the software) and the storage devices themselves. Systems that use COTS SSDs have a lot of firmware, both in the SSD itself and in the flash translation layer (FTL). Our DFMs, on the other hand, have very little firmware. The flash media is managed directly by the Purity storage operating system software, and we don’t have any controller or DRAM on the DFMs that require firmware (although we do have a NAND flash media controller). This is one contributing factor to our reliability, which based on our monitoring of DFMs in the field since 2017 is on average six times better than COTS SSDs. The annual failure rate (AFR) on our DFMs is 0.15%, while it’s 0.90% on COTS SSDs.³ So we have far fewer cases where a storage device would fail and need to be replaced.

So we’ve seen how we address the capacity utilization issue through our direct and global management of the flash media and our DFM design, but what about rebuild times? How long does it take to rebuild a 75TB DFM? While that is a critical question for COTS SSDs, it is not the right question for DFMs. As part of our direct flash management, we have significant visibility into exactly what is happening within a DFM, covering everything from I/O load to an evolving need for garbage collection. This includes a much more granular ability to diagnose failures.

On a flash device, an I/O error most often occurs because of a failure at the block, page, or die level. COTS SSDs cannot diagnose the error and communicate it to the system at that level—the system just sees that there was a device error. After a certain number of device errors, a system will recommend that a device be replaced, which for a COTS SSD would require a rebuild of the entire device. In our case, we can isolate the failure to the specific component within a DFM where all the other components are known to be good. The only data at risk is the data on the component that failed so we focus on moving only that data to a safe location. On our 75TB device, a die would at most have a couple of terabits of data. Moving that data to a known good location (either in that device or to another device) would take on the order of tens of minutes. Likewise with a 150TB DFM. So it’s our ability to diagnose and respond to failures much more granularly and understand what components are still operating properly within a device that allows us to remove rebuild times as a reason why enterprises can’t deploy our larger devices.

When RAID-protected COTS SSDs need to be replaced and their data rebuilt, there is both computational and storage work to recreate the data and write it to new devices. This must be done because these devices do not have the diagnostic granularity to know which data is good and which is not within the device itself. In our case, we know which data is still good (which in most cases is almost all of the data) and we can evacuate instead of rebuild that data onto a new device in the event we actually need to replace a DFM. In those rare cases where we actually have to replace a DFM, this cuts the time to restore the system to a fully protected state by 95% or more.

Both of these capabilities are critical because it’s our ability to create usable, high-density flash storage devices that drives down the $/GB cost of DFMs. And it’s the low cost, combined with all the performance, reliability, and energy and floorspace consumption advantages of flash, that enables the comprehensive replacement of HDDs. And there is one more point to make here as well about extremely high-density flash devices. In 2025, when the industry may be shipping a 61.44TB SSD in volume, we’ll be shipping a 300TB DFM (which, by the way, will enable 12PB in 5U). That means that an enterprise would need to buy roughly five times as many COTS SSDs as DFMs, along with all the energy-sucking, rack space-consuming supporting infrastructure, to meet their performance and capacity requirements. If you run that comparison using 30TB HDDs, you would have to buy 10 times as many. A flash storage device twice as large as the largest usable HDD isn’t enough to cause an extinction-level event for HDDs, but one that is 10 times as large is.

This is why Pure Storage characterizes enterprise storage vendors who base their systems on COTS SSDs as having—to quote the decrepit crusader from Indiana Jones and the Last Crusade—“chosen poorly.” And why we’re all in with a product strategy based on flash storage devices that are purpose-built for enterprise workloads.

¹ A 30TB QLC SSD consumes around 25 watts, driving a TB/watt of 1.2. A Pure Storage 75TB DFM consumes around 15 watts, driving a TB/watt of 4.88. Source: https://www.storagereview.com/news/pure-storage-adds-75tb-flash-module-flasharray-e-and-new-flasharray-x-c-r4-nodes

² A paper published by Facebook (“Revisiting Memory Errors in Large-Scale Production Data Centers: Analysis and Modeling of New Trends from the Field,” Justin Meza et al, June 2015) indicated that higher DIMM capacity in servers in Facebook’s IT infrastructure was correlated with significantly higher server failure rates.

³ https://www.backblaze.com/blog/ssd-edition-2023-mid-year-drive-stats-review