Summary

Some newer NVMe-based, software-defined storage (SDS) startups are leveraging commodity off-the-shelf (COTS) SSDs in their solutions. However, using COTS SSDs poses limitations for these vendors and for customers who choose these systems.

As quad-level cell (QLC) NAND flash media continues to expand its prevalence into storage systems, we’re seeing increased cost-effectiveness of SSDs and with that, a drop in the deployment of traditional HDDs. All of the same advantages that drove the historic displacement of HDDs for more performance-intensive workloads are now being applied to all workloads, driven by the dropping $/GB costs of QLC media. At the same time, the AI opportunity has brought urgency to enterprises that want to activate and monetize their data in an operationally efficient way.

The swan song of HDDs has begun, but is it realistic at this point to schedule the wake?

In this two-part installment of our “Beyond the Hype” blog series, we’ll discuss why using commodity off-the-shelf (COTS) SSDs, proposed by some newer NVMe-based, software-defined storage (SDS) startups, is a suboptimal alternative to all-flash arrays (AFAs) that use purpose-built flash storage devices to replace HDDs. In Part 2, we’ll get to more of a direct comparison between COTS SSDs and Pure Storage® DirectFlash® Modules.

Sustainability Concerns Will Hasten the Demise of HDDs

When compared to legacy HDD storage systems, flash-based storage offers overwhelming benefits for all workloads: superior performance, higher reliability, increased storage density, lower power consumption, and lower operational overhead. From 2012 to 2019, AFAs have risen in popularity and now drive approximately 80% or more of all storage shipments for performant application environments.

HDDs have essentially been left in the magnetic dust.

Why? As more enterprises prioritize sustainability as a key criteria for new AFA purchases, metrics like energy efficiency (TB/watt) and storage density (TB/U) become critical in evaluating the cost of new systems. SSD’s higher density (relative to HDDs) and significantly higher performance are key contributing factors resulting in better efficiency and ultimately lower cost because far fewer media devices (as well as far less supporting infrastructure for those devices like controllers, enclosures, fans, power supplies, cables, switches, etc.) are needed to build a system to meet any given performance and capacity requirements.

At Pure//Accelerate® 2023, we put a stake in the ground that 2028 would be the last year when new storage systems built around HDDs would be sold for enterprise use. What was lost amidst the hullabaloo generated by that statement was this: Pure Storage’s aggressive prediction was based on enterprises using our flash devices, not commodity off-the-shelf (COTS) SSDs. We’re bullish on flash, but even we don’t think COTS SSDs are going to drive out HDDs this decade.

There’s something very different and innovative about how we, at Pure Storage, deploy flash in our enterprise storage systems. We know this because Pure Storage DirectFlash Modules (DFMs) are proven to be two to five times better on every meaningful metric than those based on COTS SSDs. And, they’re 10 times better than HDDs on every metric except one: the raw acquisition cost.

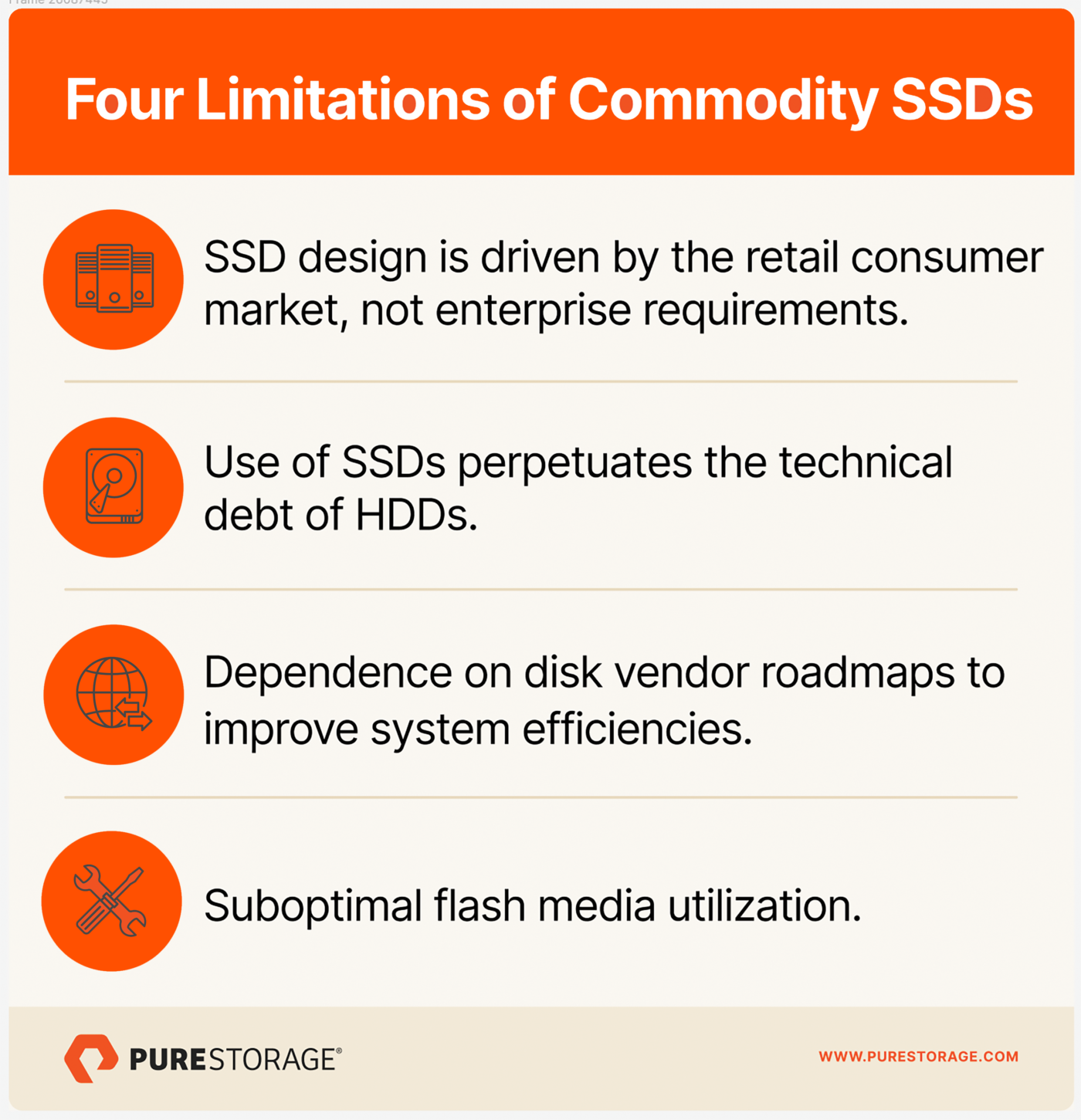

Limitations of Vendors Who Leverage COTS SSDs

Four things work against vendors using COTS SSDs, even when those are NVMe-based. Let’s take a look at each of them:

- SSD design is driven by the volume of the retail consumer markets rather than enterprise requirements.

- Use of COTS SSDs perpetuates the technical debt of HDDs.

- It creates dependence on disk vendor roadmaps to improve system efficiencies.

- It results in suboptimal flash media utilization.

SSD Design Is Driven by the Volume of the Retail Consumer Markets Rather than Enterprise Requirements

In the world of storage media, manufacturing cost efficiencies are directly correlated to production volumes. Consumer (PCs and mobile), not enterprise, SSDs comprise the volume market. In fact, enterprise SSDs comprise roughly only 15% of the overall SSD market. Following the volume, key innovations for COTS SSD technology are driven by the consumer market, which values low cost and lower capacities, not enterprise requirements. Conversely, enterprises value performance, endurance, reliability, and data integrity—all characteristics that require deep engineering beyond the core consumer SSDs. This dichotomy continues to hamstring the innovation cycle for COTS SSDs and their application to enterprise markets.

Use of COTS SSDs Perpetuates the Technical Debt of HDDs

SSDs were designed so that they could be easily inserted into storage systems that had been designed for HDDs. Specifically, COTS SSDs are largely designed to fit into 2.5” Small Form Factor (SFF) drive enclosures. Most enterprise AFAs were developed specifically to use these COTS SSDs. Enterprise AFAs using devices that have to fit into 2.5” SFF footprints are constrained in their ability to increase density, deliver lower watts/TB, and use flash media optimally.

Just what are those limitations? COTS SSDs include an internal controller, DRAM for caching to help improve performance, and the flash media itself. Architecturally, a COTS SSD needs 1GB of DRAM for every 1TB of flash capacity, primarily to drive the flash translation layer (FTL). Creating a larger capacity SSD means you have to find the space within the device for the controller, the DRAM, and the flash media, as well as cool the device. It’s becoming increasingly difficult to be able to do that and stay within the confines of 2.5” packaging while driving density increases.

First, let’s look at the controller and its resulting firmware requirements. The SSDs themselves have embedded controllers that handle logical block addressing, free space management, and background maintenance tasks, as well as the FTL. They also include embedded NAND flash controllers which handle the direct memory access to the NAND. These controllers all have firmware, and each enterprise storage system can easily have hundreds of SSDs so there is a lot of firmware. Firmware is one of the top two causes of failure in AFAs (with device failures themselves being the most common). This maze of firmware complexity poses reliability risks.

If you’ve ever wondered why most system vendors don’t recommend upgrading SSD-level firmware online, it’s because of the risk associated with that given the firmware complexity. The net result of this is that it is rare for an enterprise to even attempt a disk firmware upgrade during the useful life of a system, effectively relegating the system to using older, less efficient disk firmware that initially shipped with the system. It would be nice to be able to non-disruptively upgrade firmware over the useful life of a system to improve performance, capacity utilization, endurance, power efficiency, and other metrics, but on systems using COTS SSDs, that rarely happens unless forced by some sort of data integrity issue.

Next, let’s look at DRAM. DRAM is much more expensive than NAND flash media, draws a lot of power relative to the flash media itself, and takes up space in the device that does not directly contribute to its usable capacity. The amount of DRAM required in larger capacity SSDs is a limiting factor when you need to fit into a 2.5” form factor. And in systems with hundreds of SSDs, that’s a lot of DRAM—all of which is drawing far more energy on a per GB basis than the NAND flash media itself. DRAM is also subject to failures which affect device reliability. Larger SSDs requiring more DRAM increase DRAM reliability concerns.

While a new disk packaging approach referred to as Enterprise and Data Center Standard Form Factor (EDSFF) has emerged and does allow for slightly denser storage capacity, it’s still limited by the same pesky “HDD packaging” factors and currently challenged to go beyond 30.72TB in size while maintaining reliability.

But wasn’t NVMe developed specifically for flash devices? Yes, but it was primarily engineered to accelerate the latency and increase the bandwidth on flash systems. On those metrics, it clearly outperforms SAS, but it has far less impact on endurance, reliability, capacity utilization, or energy efficiency when used with COTS SSDs that are still managed by individual controllers working in isolation.

Dependence on Disk Vendor Roadmaps to Improve Efficiencies

A storage appliance architecture based around COTS SSDs means the storage system vendor is reliant on the SSD roadmaps of the disk vendors for improvements in energy efficiency and storage density. At first blush, this might sound good—the media vendors can focus on making the “best” SSDs and the storage appliance vendors using COTS SSDs in their systems can focus on making the best systems, right? Let’s take a closer look.

Today, 15.36TB SSDs are available in volume, 30.72TB SSDs are available (but not in volume yet so the prices are still relatively high), and 61.44TB SSDs are on the price list of at least one disk vendor (although availability seems limited and prices are sky high). At a market level, questions about whether 30.72TB SSDs will achieve the price drop associated with high volume shipments arise. And there are even more questions about whether the 61.44TB devices will ever achieve that price drop. There is no doubt that larger capacity flash devices make a huge difference in the energy efficiency of a storage platform as they lower power and rack space consumption on a per terabyte basis, as well as open up the opportunity to buy fewer devices to hit a target performance and capacity goal at the system level. In theory, this improves reliability as well since a system with fewer devices also requires far less supporting infrastructure (controllers, enclosures, fans, power supplies, cables, switches, etc.).

However, creating a larger capacity SSD is not just a simple matter of fitting more flash media into the device. Depending on form factor, you have to fit the controller, DRAM (1GB per TB), and flash media into the package. Assuming a vendor can achieve that, there are valid enterprise concerns about SSDs beyond 15.36TB in size, specifically capacity utilization and disk rebuild times.

Regardless of disk vendors’ claims about capacity utilization at the device level, most storage system vendors don’t recommend that you fill SSDs in a storage system more than 60%-70% full. (It’s even worse with HDDs, however, since system vendors suggest not filling those devices more than 50%-60% full.) This inability to fully utilize all the capacity of those SSDs means that you have to buy more of them to meet any given performance and capacity goals.

To increase the capacity utilization of the flash media in each device, vendors can add a large cache layer. This cache acts as a higher-performance write buffer and its large size allows it to perform write coalescing to increase the endurance and capacity utilization of the backing flash better than conventional systems. But this approach has its risks. The cache usually consists of extremely high-performance storage (i.e., Optane or SLC NAND flash) which is significantly more expensive on a $/GB basis and draws far more power than QLC NAND flash. The write performance of that cache must be high enough to keep the system from hitting a write cliff as it simultaneously ingests new writes, services metadata requests, handles any read traffic, and manages how data is de-staged to lower-cost flash media. What exactly is that high-performance media, what does it cost, and where is the write cliff experienced with different workloads?

The ratio of reads to writes the high-performance media can deliver is a critical success factor in successfully handling high-performance workloads, and the ratio of the cache to the flash media will vary with the write intensity of the workload. There are performance impacts from not getting the ratio right and cost impacts from the large cache size.

Now let’s look at the rebuild time issue. Most storage system vendors implement some form of in-line, on-disk data protection like RAID or erasure coding (EC) to protect data from individual SSD failures. In the wake of failures, enterprises are concerned about a second disk failure that would result in data unavailability or loss before the rebuild of the first failed disk is complete and for that reason, are very concerned about disk rebuild times. When an SSD fails and must be replaced, all of that data on that device must be rebuilt. COTS SSDs are much faster than HDDs so enterprises were more comfortable with deploying larger SSDs. Storage system vendors commonly quote an 8- to 12-hour rebuild time for a 15.36TB SSD, whereas the estimate would be 25 to 30 hours for an HDD of that size. But this assumes nothing else is going on in the system. In actual practice, the rebuild rate for an HDD using a 20-stripe EC approach that continues to service normal I/O is about 1TB per day, which means that rebuilding a 24TB HDD could take over three weeks. SSDs can rebuild much faster, but for larger capacity devices, we’re still talking about potentially many days.

Due to concerns about rebuild times, enterprises tend to want to deploy devices of smaller capacities in systems that have any data availability sensitivity. That has in particular impacted disk vendors’ ability to sell 30.72TB devices. In fact, I’ve seen customers deploy 30.72TB devices in systems and then later decide to move to 15.72TB or 7.68TB devices because they weren’t able to utilize enough of the capacity while meeting their performance requirements to make them economically attractive.¹ I’ve also seen vendors bid 30.72TB SSDs to win a deal on cost but then move the customer to smaller capacity SSDs at install for similar reasons.

So with COTS SSDs, a smaller device size allays concerns about rebuild times but leads to a more expensive and potentially less reliable system. Larger device sizes lead to a more energy- and space-efficient system but raise usability concerns. In fact, for performance at scale and capacity utilization reasons, disk vendors may not even be willing to produce the extremely large capacity (75TB+) SSDs that will be required for flash to replace HDDs in enterprise workloads. That’s because even if vendors can make the larger capacity devices, there’s still a question of whether enterprises are going to actually use them in production systems. And if customers aren’t buying them very often, vendors can’t get to the high volume manufacturing necessary to drive prices down. So that makes enterprise customers even less likely to buy them.

That leads to what our brethren across the pond might refer to as “batting a sticky wicket” for disk vendors and enterprises depending on COTS SSDs.

Suboptimal Flash Media Utilization

What does it mean to design a system to use flash media optimally? It means dispensing with HDD baggage, optimizing system design around a protocol that was built specifically for flash (NVMe), and mapping and managing all the flash media in a system both globally and directly.

Enterprise SSDs employ a small controller in each disk that manages the media in just that device, coordinating the read and write I/O with free space management and dealing with I/O errors. This was basically the way HDDs were built and COTS SSDs are built the same way. In making decisions about media utilization, the disk controller has no visibility of what else is happening in the system. In optimizing media management within a single disk, it ends up managing it suboptimally from a systems point of view. The result? Higher write amplification, an issue because of flash endurance concerns and less efficient garbage collection that has an impact on performance consistency, particularly as SSDs fill up. To try and offset this, flash is “over provisioned” (generally by 15%-20%) in the COTS SSD itself to help improve performance and increase endurance. This overprovisioning of course increases cost and takes away from the “usable capacity” that a drive can actually provide (since the overprovisioned capacity takes up space but is only visible to the controller of that disk, not the system).

There are still storage systems on the market that are basically just updated versions of systems designed in the 1990s and 2000s for HDDs that are now running SSDs. There are also newer systems, some of which were designed around NVMe, that have less HDD baggage and are more efficient. The “HDD baggage” that is still there with these NVMe systems, though, is that they still use devices built around the original HDD design with an internal controller, DRAM, and media that all have to fit within an HDD form factor. And accessing disk media through internal disk controllers that don’t have a global view of what the system is doing presents challenges when trying to get the most out of the flash media across many metrics: performance consistency, endurance, reliability, energy consumption, density, and capacity utilization.

In SSDs, the internal controller maps and presents the media to the FTL, which then presents it to a storage controller that presents it to servers running various applications. A 15TB SSD that operates with a 70% capacity utilization rate presents just under 11TB of usable capacity. That is better than an HDD with a 60% capacity utilization rate that would only present 9.2TB of usable capacity from a 15TB device. But how does this compare to a system using flash storage devices (not COTS SSDs) that have no consumer heritage and were built specifically to drive performance and efficiency in enterprise environments? That is exactly what we have done at Pure Storage with our DirectFlash Modules (DFMs), and we will answer this question in Part 2 of this blog.

In summary, investing in an enterprise storage system built around COTS SSDs forces customers to accept inefficient infrastructure that negatively impacts them in terms of performance consistency, media endurance, device reliability, energy consumption, storage density, capacity utilization, and ultimately system cost. But since the points of comparison for so many of them are all-HDD systems, COTS SSD-based systems appear attractive.

Read More from This Series

The SSD Trap:

Demystifying Storage Complexity:

The Storage Architecture Spectrum:

Beyond the Hype:

Escaping the SSD Trap:

Is Your Storage Platform Really Modern?

Preparing for Part 2

We’ve seen the implications of the use of COTS SSDs. For so many enterprise workloads, they are much better than HDDs. But what if a storage vendor could build flash storage devices that were two to five times better than COTS SSDs in performance consistency, endurance, reliability, energy consumption, density, and capacity utilization, and lower in $/GB cost? We’ll take a look at that in Part 2.

¹Paying for the 30% of a 30TB SSD that you can’t use is more expensive than paying for the 30% of a 7.68TB SSD you can’t use, a factor discouraging the purchase of larger capacity COTS SSDs.

Written By: