What are the top 10 reasons why flash changes everything? Read on to find out.

(10) Parallelism rules. Disks are serial devices in that you read and write one bit at a time, and parallelization is achieved by pooling disks together. Algorithms for optimizing disk performance, then, strive to balance contiguity (within disks) and parallelization (across disks). Flash memory affords a much higher degree of parallelization within a device – the flash storage equivalent to a sequential disk contains more than 100 dies that can work in parallel. For flash, then, the trick is to maximize parallelization at all levels.

(9) Don’t optimize for locality. For disk, the goal is to read or write a significant amount of data when the head reaches the right spot on the platter, typically 16KB to 128KB. The reason for this is to optimize the productive transfer time (time spent reading or writing) versus the wasted seek time (moving the head) and rotational latency (spinning the platter). Since seek times are typically measured in milliseconds and transfer times in microseconds, performance is optimized by contiguous reads/writes (think thicker stripe sizes), and sophisticated queuing and scheduling to minimize seek and rotational latency. For flash, all these optimizations add unnecessary overhead and complexity, since sequential and random access perform the same.

(8) No need to manage volume layout and workload isolation. For traditional disk storage, the systems software and the storage administrator generally need to carefully keep track of how logical volumes are mapped to physical disks. The issue, of course, is that a physical disk can only do one thing at a time, and so the way to minimize contention (spikes in latency, drops in throughput) is to isolate workloads as much as possible across different disks. With flash memory, all volumes are fast. After all, do you care to which addresses your application is mapped in DRAM?

(7) No costly RAID geometry trade-offs. Of course, flash still requires that additional parity be maintained across devices to ensure data is not lost in the face of hardware failures. In traditional disk RAID, there is an essential trade-off between performance, cost, and reliability. Wide RAID geometries leveraging several spindles and dual-parity are the “safest.” They reduce the chance of data loss with low space overhead, but performance suffers because of the extra parity calculations. Higher-performance schemes involve narrower stripes and more mirroring, but achieve this performance at the cost of more wasted space. Flash’s performance enables the best of both worlds: ultra-wide striping for protection with multiple parity levels at very low overhead, while maintaining the highest levels of performance. And of course, dramatically faster re-build times when there is a failure.

(6) No update in place. Traditional storage solutions often strive to keep data in the same place; then there’s no need to update the map defining the location of that data, and it makes for easier management of concurrent reads and writes. For flash, such a strategy is the worst possible, because a dataset’s natural hot spots would burn out the associated flash as the data is repeatedly rewritten. Since any flash solution will need to move data around for such wear leveling, why not take advantage of this within the storage software stack to more broadly amortize the write workload across the available flash?

(5) Forget about misalignment. All existing disk arrays implement a virtualization scheme with sector/block geometry that is tied to their architecture born out of rotating disks. There is a substantial performance penalty within virtualized disk-based storage if the file system, LUNs, and physical disks are not optimally aligned: what could have been single reads/writes are compounded because they cross underlying boundaries, leading to performance degradations of 10-30%. With flash, the additional I/O capacity and the potential for much thinner stripe sizes (the amount of data read or written per disk access) render administrative overhead to avoid misalignment unnecessary.

(4) Fine-grain virtualization and forget the in-memory map. With disk, stripe sizes tend to be large in order to be able to keep the entire virtualization map from logical data to physical location in the array controller’s memory. This is simply because you do not want to face the latency of an additional disk read to find out where to read the data you are looking for. With flash, metadata reads are extremely low latency (10s to 100s of microseconds), and so you can afford very thin stripe sizes—i.e., virtualizing your storage into trillions of chunks—and still guarantee sub-millisecond reads.

(3) Managing garbage collection, the TRIM command, and write amplification. Flash is necessarily erased in blocks all at once rather than rewritten incrementally like disk. Thus the controller software managing updates to flash must be careful to avoid write amplification, wherein a single update cascades to other updates because of the need to move and update both the data and metadata. This problem is exacerbated when the underlying flash is unaware that the data being moved to enable a rewrite may already have been deleted (see the TRIM command). The result is that entirely different algorithms are necessary to optimize the writing of flash versus the writing of disk.

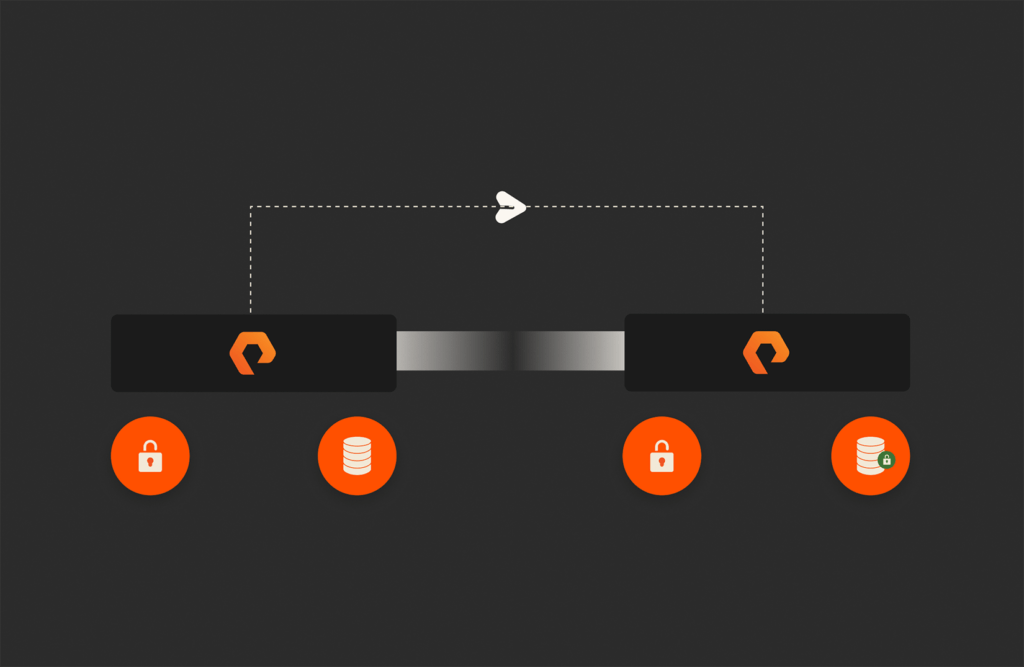

(2) Managing data retention and recovering from failures. Unlike disk, flash exhibits data fade, so an optimal storage controller needs to keep track of when data is written and how often it is read to ensure that it is refreshed (rewritten) periodically (and that this is done carefully so as to avoid write amplification). And unlike disk, there is no penalty for non-sequential writes, so the restoration of parity after losing an SSD can be efficiently amortized across all available flash rather than trying to rebuild a single hard drive in its entirety after a disk failure.

(1) High-performance data reduction. Data reduction techniques like deduplication and compression have been around disk storage for many years, but so far have failed to get traction for performance-intensive workloads.

Deduplication effectively entails replacing a contiguous block of data with a series of pointers to pre-existing segments stored elsewhere. On read, each of those pointers represents a random I/O, requiring many disks to spin to fetch the randomly-located pieces of data to construct the original data. Disk is hugely inefficient on random I/O (wasting >95% of time and energy on seeks and rotations rather than data transfers). Better to keep the data more contiguous, so that disk can stream larger blocks on/off once the head is in the right place.

There is also the challenge of validating duplicates on write. If hashing alone is used to verify duplicate segments, then a hash collision, albeit very low probability, could cause data corruption. For backup and archive datasets, such risk is more acceptable than it is for primary storage. In our view, primary storage should never rely on probabilistic correctness. This is why Pure Storage always compares candidate dedupe segments byte for byte before reducing them, but this too leads to random I/O.

Since deduplication depends upon random I/O, with disk it inevitably leads to spindle contention, driving down throughput and driving up latency. No wonder dedupe success in disk-centric arrays has been limited to non-performance intensive workloads like backup and archiving.

With flash there is no random access penalty. In fact, random I/O may be even faster as it enlists more parallel I/O paths. And with flash writes more expensive than reads, dedupe can actually accelerate performance and extend flash life by eliminating writes.

Compression presents different challenges. For a disk-array that does not use an append-only data layout (e.g., the majority of SANs rely on update in place), compression complicates updates: read, decompress, modify, recompress, but now the result may no longer fit back into the original block. In addition to accommodating compression, append-only data layouts (in which data is always written to a new place) are generally much friendlier to flash as they help avoid flash cell burn-out by amortizing I/O more evenly across all of the flash. (Placing this burden instead on an individual SSD controller leads to reduced flash life for those SSDs with hot data.)

Finally, flash is both substantially faster and more expensive than disk. For backup, data reduction led to a media change—swapping disk for tape—by making disk cost-effective. Over the next decade the same thing is going to happen in primary storage. Pure Storage is routinely achieving 5-20X data reduction on performance workloads like virtualization and databases (all without compromising submillisecond latency). At 5X data reduction, the data center MLC flash we use hits price parity with performance disk (think 15K Fibre Channel or SAS drives). At 10X, which we routinely approach for our customer database workloads, we are about half the price of disk. 15X, a third. And at 20X, which we typically approach for our customer’s virtualization workloads, we are roughly one quarter the cost!