Whilst we await the official Pure Storage FlashBlade™ OpenStack® drivers to be released I thought it might be interesting to show you how you can use your FlashBlade system in an existing OpenStack environment, leveraging drivers that already exist.

The cool thing is that even though the FlashBlade is a scale-out NAS system, offering NFS connectivity, I’m going to show you how to use the system under Cinder, the block storage project within OpenStack. I’m also going to show you how you can use the same FlashBlade system to hold your Glance images.

This blog is actually based on a 2-node Devstack deployment of OpenStack Newton; a controller node (running Nova) and a compute-only node, so some of the directory names mentioned are Devstack specific. Where appropriate I will give the directory name for a full OpenStack deployment.

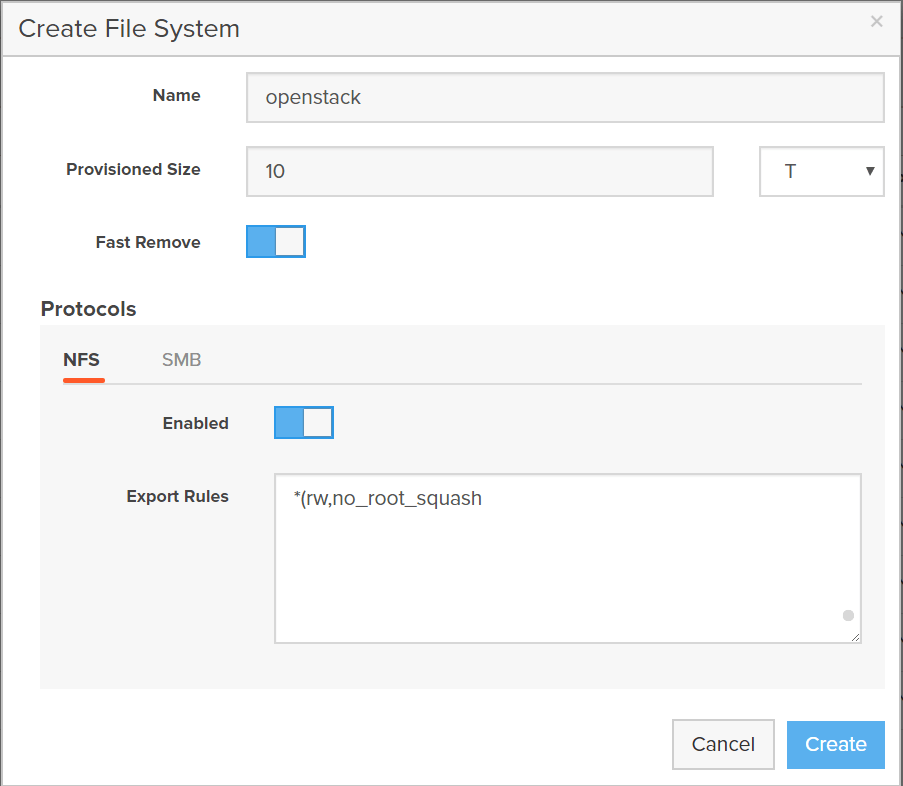

The first thing we are going to do is setup an NFS share on the FlashBlade. From the FlashBlade GUI select the Storage tab in the left pane and then the + symbol top right. This will open the Create File System window which we are going to complete as below.

Here I’m creating a 10TB volume called openstack and allowing anyone to access the export. I could add a specific IP address, or series of address, for OpenStack controller nodes that are running the cinder-volume service, but for ease of description, I’ll stick with a wildcard.

I could create two separate shares, one for Cinder and one for Glance, but I’m going to only have one share and create a couple of directories in there and use those as mount points.

The first thing I need to do is mount the newly created NFS share – this can be done from any host – and create two subdirectories in this share called cinder and glance, ensuring that there are full read/write permissions for these directories by running the following commands:

Now we have our NFS share ready we can configure our OpenStack controller nodes.

For the controller node running Glance, issue the following command:

Remembering to add an appropriate entry to your /etc/fstab file.

As far as Cinder is concerned we don’t need to do anything as the cinder-volume service will handle the mounting automatically based on the changes we are going to make to the cinder configuration file now.

To allow Cinder to use the FlashBlade as a backend we need to implement the Cinder NFS (Reference) driver, more details of which can be found here. In my Cinder configuration file (/etc/cinder/cinder.conf) I’m going to add a backend stanza for the FlashBlade and ensure that Cinder enables this backend as follows:

[fb-1]

volume_driver = cinder.volume.drivers.nfs.NfsDriver nfs_mount_options = vers=3 nas_host = 10.21.97.47 nas_share_path = /openstack/cinder volume_backend_name = fb-1

I will also create an appropriate volume type that points to the FlashBlade backend to ensure the default setting is supported. By restarting the Cinder services (API, Scheduler and Volume) the FlashBlade mountpoint is automatically created with no requirement for you to add this to your /etc/fstab file. We can see this by looking at the controller filesystem:

In fact Cinder will ensure that this mountpoint is made available across ever Nova compute node in your OpenStack deployment, even without the Cinder services being deployed on those nodes. All that is required is to ensure the nfs-common package has been installed on all your controller and compute nodes.

So now we are done and FlashBlade is integrated into your OpenStack environment providing a Cinder backend and a Glance image store.

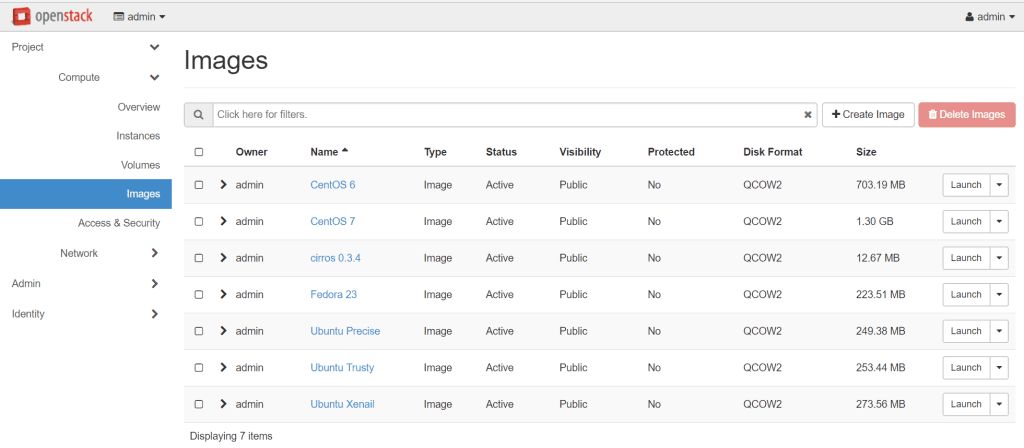

Just so we can see the integrated FlashBlade and OpenStack environment, I have uploaded a number of Glance images using the standard glance image-upload command, as you can see in this screenshot of Horizon:

and on the Glance controller node this is the Glance image store directory that we created on the FlashBlade NFS mountpoint:

Now let’s now create couple of Cinder volumes, one an image volume and the other an empty volume:

We can see that these two volumes have been created as files on the NFS that Cinder and any Nova instances that use them, will see as block devices.

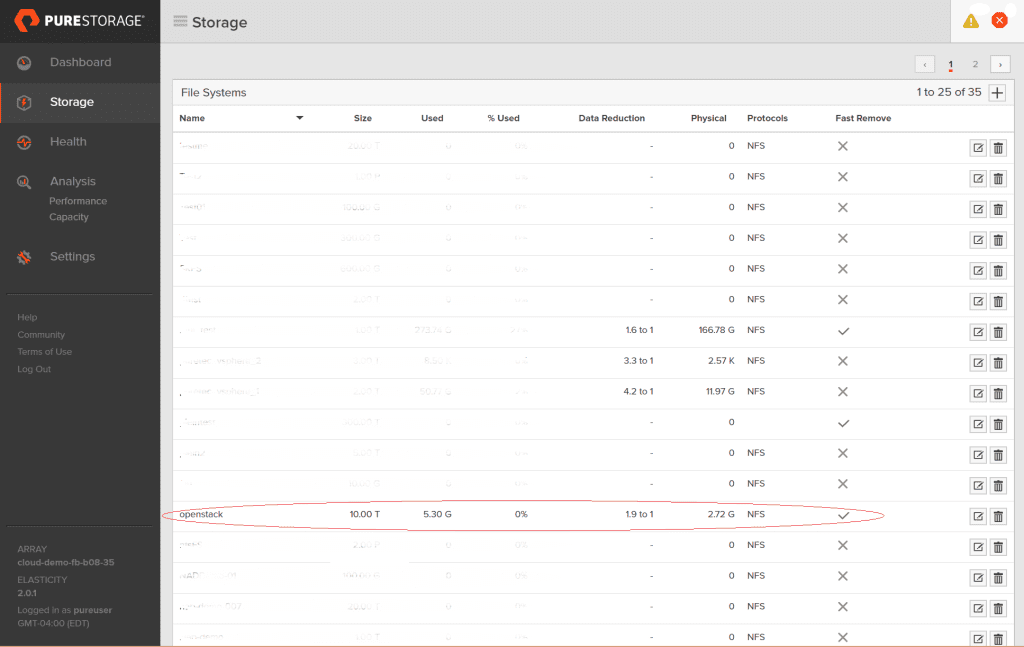

Now all we need to do now is look at what the FlashBlade GUI is showing for the openstack share that now has these Glance images and Cinder volumes.

We can see that the share is giving a compression rate of around 1.9:1 for the small amount of data we have in that share.