DAS has been used for personal computers and servers for decades. As a result of innovations in data-transfer protocols and data-protection methods such as RAID, DAS had seen an increase in lifespan on servers. But those life extensions are now reaching their limits. DAS is beginning to show its age.

As you try to scale your workload, DAS boundaries are more apparent. The IOPs decreases and latency increases. SSDs have helped, but do they handle these issues are they good enough at handling these issues? Installing another RAID controller or adding more disks will only mask the problem. Adding another server is not always possible, not to mention that the administration of hundreds of servers is difficult. So how do you handle these challenges with an elegant and manageable solution? The answer: Shared storage from Pure Storage® provides a stable, predictable, scalable, and reliable place to store your data.

But what about performance? DAS performance has been typically better than storage arrays, but that has changed as all-flash storage has evolved. And we have data to prove it. Pure has run many tests with MySQL 8 to compare the performance of DAS and Pure FlashArray™//X90 R3.

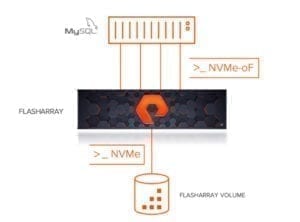

To no surprise, in the majority of cases in our testing, the FlashArray//X90 outperformed DAS. Before exploring the results, let’s take a look at the specifics of the testbed (Figure 1).

Figure 1

FlashArray vs. DAS Test Bed

The test environment included the following:

Single server with:

- Two Intel® Xeon® Platinum 8160 CPU @ 2.10GHz processors with 24 cores each and hyperthreading enabled

- 512GB of memory

- 12G Modular RAID controller with 2GB cache

- 2 x 800GB SAS SSDs – RAID 0

- Mellanox ConnectX-4 Lx dual-port NIC for NVMe-oF RoCE

Software:

- CentOS 7.7

- Sysbench 1.0.19

- MySQL 8.0.19 Community Edition with InnoDB engine

Shared Storage:

- FlashArray//X90 R3 with four 50Gb/s NVMe-oF RoCE ports (two per controller)

- Purity 5.3.2

- Four paths to the volume

FlashArray vs. DAS Test Setup

Storage

For DAS and FlashArray, we built the database on a single volume formatted with XFS. For DAS, we had two SAS SSDs configured as RAID 0 (stripe). RAID 0 has excellent performance characteristics, which makes it a preferred choice for testing. However, the lack of fault tolerance makes it unsuitable for production databases. Fortunately, storage administrators don’t need to choose between availability and performance when creating volumes on FlashArray. Data is always protected with RAID-HA.

Workload

To generate the workload, we installed sysbench version 1.0.19 on the MySQL host. Sysbench is a freely available performance benchmarking tool with a MySQL driver already compiled-in. The tool is also scriptable with many database workloads available. For this set of tests we used, a TPCC-like set of scripts from Percona.

Database (MySQL)

We configured the database server with a 32GB buffer pool. Limiting the size of the buffer pool increased the IO pressure.

Test Execution

Running the benchmark consists of the following steps:

- Create database

- From mysql prompt run ‘create database <database_name>;’ command

- Prepare tables and data

- From shell run

‘sysbench <path_to_tpcc.lua>/tppc.lua –threads=<threads> –tables=10 –scale=100 –db-driver=mysql –mysql-db=<database_name> –mysql-user=<mysql_user> –mysql-password=<password> prepare

-

- For example:

/usr/bin/sysbench /usr/share/sysbench/tpcc.lua –threads=48 –tables=10 –scale=100 –db-driver=mysql –mysql-db=sysbench10x100W –mysql-user=admin –mysql-password=’password’ prepare

- Execute benchmark

- From shell run

‘sysbench <path_to_tpcc.lua>/tppc.lua –threads=<threads> –time=<run_time[s]> –tables=<number_of_tables> –scale=<number_of_warehouses> –db-driver=mysql –mysql-db=<database_name> –mysql-user=<mysql_user> –mysql-password=<password> run > <output_file>

-

- For example:

/usr/bin/sysbench /usr/share/sysbench/tpcc.lua –threads=48 –time=300 –tables=10 –scale=100 –db-driver=mysql –mysql-db=sysbench10x100W –mysql-user=admin –mysql-password=’password’ run > ~/results/5min/r3/32GBP10x100WFA48.out

Our test database had 100 warehouses with 10 datasets. The test set included eight runs for 8, 16, 24, 32, 48, 64, 96, 128 threads (users) to better estimate the impact of configuration changes. Each sysbench test ran for five minutes on the MySQL server with a 30-second sleep time between each test.

We repeated the steps for the DAS configuration but modified the MySQL datadir parameter to reflect the DAS database volume mount point.

Appendix A shows sample output of the sysbench.

Results

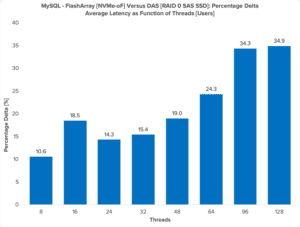

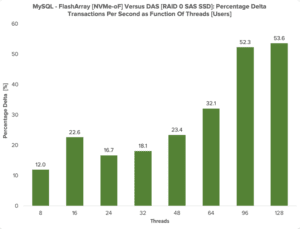

It is quite simple: FlashArray//X outperforms DAS! As shown in Figures 2 and 3, MySQL with database volume on Pure’s latest generation FlashArray//X R3 has outperformed a performance-optimized DAS configuration using a TPCC-like workload. The performance delta became even more apparent as the number of threads representing active database users increased. In fact, we achieved more than 50% more transactions per second with up to 34% lower latency.

Figure 2: Performance difference in percentages between FlashArray//X R3 and DAS on the same host.

FlashArray//X with end-to-end NVMe and MySQL performs better than standard SAS SSD DAS storage. If you also consider that Purity//FA delivers a rich set of data services including proven high availability, effortless business continuity, and disaster recovery options, and awesome industry-leading data reduction, choosing to deploy production MySQL on a FlashArray//X is a no-brainer. Consider moving your MySQL databases from DAS to Pure to discover all these benefits.

More About FlashArray//X R3

- Product page: Learn more about how FlashArray//X can help simplify your work life.

- Blog post: Improving SAP HANA Performance—Again

- Blog Post: Pure FlashArray Outperforms DAS!

- Blog Post: Comparing Customer Experiences with FlashArray//X Competitors

- Blog Post: Accelerate Oracle Database with the Next-Gen FlashArray//X

Further Reading

Appendix A

# For advice on how to change settings please see

# https://dev.mysql.com/doc/refman/8.0/en/server-configuration-defaults.html

[mysqld]

default-authentication-plugin=mysql_native_password

datadir=/r3data/mysql

# datadir=/das/mysql

socket=/var/lib/mysql/mysql.sock

bind-address=0.0.0.0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

# general

max_connections=4000

table_open_cache=8000

table_open_cache_instances=16

back_log=1500

default_password_lifetime=0

ssl=0 performance_schema=OFF

# binlog_row_image=minimal

# max_prepared_stmt_count=128000

max_prepared_stmt_count=512000

skip_log_bin=1

character_set_server=latin1

collation_server=latin1_swedish_ci

skip-character-set-client-handshake

transaction_isolation=REPEATABLE-READ

# innodb_page_size=32768

# files

innodb_file_per_table

innodb_log_file_size=1024M

innodb_log_files_in_group=32

innodb_open_files=4000

innodb_log_compressed_pages=off

# buffers

innodb_buffer_pool_size=32G

innodb_buffer_pool_instances=16

innodb_log_buffer_size=32M

# tune

innodb_doublewrite=0

innodb_thread_concurrency=0

innodb_flush_log_at_trx_commit=1

innodb_max_dirty_pages_pct=90

innodb_max_dirty_pages_pct_lwm=10

join_buffer_size=32K

sort_buffer_size=32K

innodb_use_native_aio=1

innodb_stats_persistent=1

innodb_spin_wait_delay=6

innodb_max_purge_lag_delay=300000

innodb_max_purge_lag=0

innodb_flush_method=O_DIRECT_NO_FSYNC

innodb_checksum_algorithm=none

innodb_io_capacity=10000

innodb_io_capacity_max=30000

innodb_lru_scan_depth=9000

innodb_change_buffering=none

innodb_read_only=0

innodb_page_cleaners=4

innodb_undo_log_truncate=off

# perf special

innodb_adaptive_flushing=1

innodb_flush_neighbors=0

innodb_read_io_threads=16

innodb_write_io_threads=16

innodb_purge_threads=4

innodb_adaptive_hash_index=0

# monitoring

innodb_monitor_enable=’%’

Free Test Drive

Try FlashArray

Explore our unified block and file storage platform.