For healthcare providers, applications that keep pace with the velocity of care are a must! Storage latency is critical to performance and efficiency of mission critical healthcare applications. With thousands of patients and healthcare providers simultaneously accessing and updating EMR information 24/7, whether it’s inside the hospital campus or across the community, database response times determine how quickly the data arrives in surgery or customer support. Few industries outside of the financial sector may be as storage-latency sensitive as healthcare.

We’re confident that no storage array delivers lower latency at a lower overall cost and with higher reliability and efficiency than the Pure Storage FlashArray//M. Yet the recently introduced Pure Storage FlashArray//X is taking the response times and availability of flash even further.

Epic is one of the main electronic health records (EHRs) in use at U.S. healthcare organizations today. Epic’s production OLTP database server — also known as the Operational DataBase, or ODB — has well-defined storage response requirements for both reads and writes in order to ensure low response times for high performing interactive user workflows, to meet the needs of providers and patients in the health system.

The underlying InterSystems Caché database on which the Epic application runs has a write cycle of 80 seconds. To keep things humming, the flush of writes from the database must be completed within 45 seconds, well before the next write cycle begins, even under the heaviest of workloads. And, in order to maintain great response times and user experience, the ongoing voluminous random application reads must be reliably fast. The existing FlashArray//M, when configured properly, meets and exceeds Epic’s requirements, but we wanted to see how the FlashArray//X compared to the //M.

//M vs //X: Performance test with Epic’s IO Simulator

For this test we compared a FlashArray//M70 R2 and a FlashArray//X70, both with 10 Flash Modules of the same capacity, 2 TB. For the //M70 this means 20 SSDs were used (there are 2 SSDs inside each Flash Module), for the //X70 this translates to 10 DirectFlash modules. The server we used for both tests was a Cisco UCS Blade B200M4 running Red Hat Enterprise Linux RHEL 7.2 over VMware ESXi 6.5.

Epic is using its “Epic IO Simulator tool” (aka GenerateIO) to validate the performance of a new storage configurations at the customer site, and we are using it to evaluate the performance of the Storage array. Pure Storage FlashArray//M shows exceptional results with Epic’s IO Simulator, meeting the service-level agreement (SLA) requirements for write I/Os and exceeding the read latency expectations:

- For Write IO : Longest write cycle must complete within 45 seconds

- For Read IO: Random Read latencies (aka RandRead) must average below 2 ms

The “Total IOPS” number is the number of Read and Write IO (8K IO at 75/25 Read/Write ratio) generated by the tool before it reached the either of the read or write SLA limit . However, we found that the new FlashArray//X offers a significant improvement, even when compared to the already exceptional performance of the FlashArray//M.

These results demonstrate that the //X70 reached Epic’s SLA for write cycles (45s) much later than the //M70R2 at 175,000 Total IOPS. The read latency with the //X was 2.9 x less than on the //M70R2. This demonstrate the improvements in density performance, meaning that the //X70 accomplishes more with the same amount of flash as the //M70R2.

//M vs //X: Performance test : Data generation

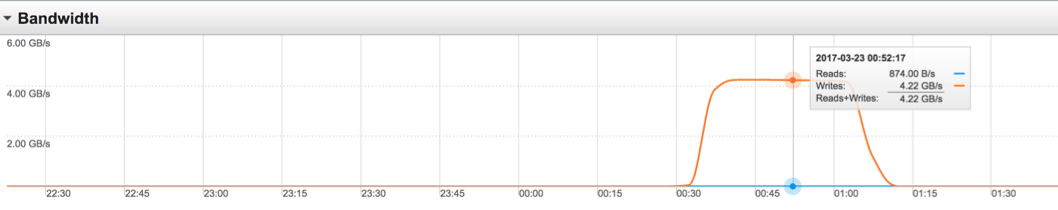

Dgen.pl is a perl script data Generator for Epic’s IO Simulator, and a test with this tool demonstrated the improvements that //X offers for writes against the database. The goal of Dgen.pl is to generate data with the desired compressibility. In this case we’ve simulated a 3:1 data reduction for the ODB that is common at existing Epic customer sites, and tested the time it takes to write the same amount of data to the two arrays. Figure 2 describes the //M testing results, while Figure 3 covers the //X.

Write test //M70R2 with dgen.pl:

Test Started 0:33:12 and finished at 2:03:12 = 90 minutes

Running at 1.5GB/s Write Average

Write test //X70 with dgen.pl:

Test Started 0:33:12 and finished at 1:12:16 = 39 minutes

Running at 4.2GB/s Write Average

For writes, the benefits of the new FlashArray//X with NVMe and DirectFlash are even more apparent. In this test, the load time which is a function of the latency, is 2.3x faster on the //X70 than the //M70. But the new array really shines on throughput, where the NVMe technology gives it an even bigger advantage, maintaining 4.2 GB/s Write IOs, 2.8x improvement.

Conclusion

These two test scenarios and their results demonstrate that the new FlashArray//X70 is setting a new bar in density and performance for Epic workloads, delivering higher performance with less flash than before. For most Epic customers seeking to migrate their workloads to all flash to meet SLA requirements, both the //M and //X arrays are great solutions. The new //X provides the greatest density and cutting edge performance for the largest Epic customers, while the //M provides a great option that exceeds Epic’s SLAs for most health systems today. Pure’s Evergreen Storage program means existing customers on //M arrays can upgrade non-disruptively to the //X, and avoid re-buying the same TBs twice. New customers have even more options for configuration and performance with the //X when sourcing storage from Pure, allowing them to take advantage of the next step in technology with NVMe and DirectFlash.