Continuous Integration (CI) is a process to identify (not fix) bugs in the code very early in the development lifecycle. This allows developers to fix the bugs quickly and test the code iteratively thus improving the code quality. Development is the head of the continuous pipeline where the changes are checked in and integrated to the main code base after rigorous testing. The final build from the CI process is always ready to be deployed into production.

More and more businesses are transitioning quickly to a “shift-left” testing practice where code changes and integrations are automated and iteratively tested before deploying the final application build. Running appropriate and specific tests (not the entire test suite) on every code change during the CI process, allows the developer to be more creative and innovative while making changes or adding new features to the application. This process saves time, cost and reduces bugs introduced from human errors.

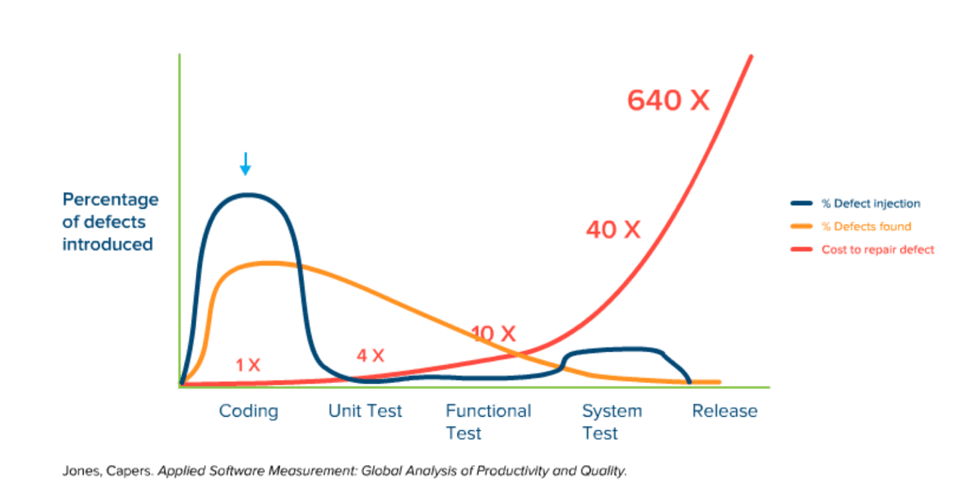

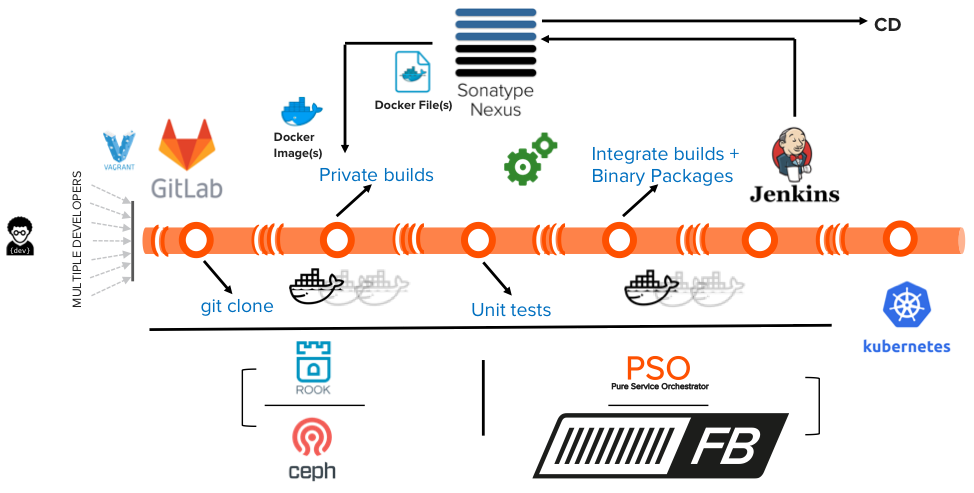

Figure 1) Shift-left testing process

Source: What is shift left testing?

The “shift-left” process does not happen overnight. The software development practice has to transition into more automated and efficient development pipeline. As you would see in Figure 1) above, the testing that was happening on the right is now moving to the left for the developers to run more unit tests and private builds in their workspaces while coding and later checking into the main code base. Final integrate builds should minimum failures from the CI tests before is promoted to the Continuous Delivery (CD) phase.

Apart from optimizing and automating the CI pipeline, the underlying platform and infrastructure play an important role. In many development environments- design, code, test, and build is all done in silos. A siloed development environment leads to the following challenges:

- Concurrency and reliability

- Performance and capacity scaling

- Manageability overhead with high server sprawl

- Heterogeneous platforms – Linux, Windows, Bare Metal, VMs

- Unpredictable Service level offerings (SLO) – IOPs, throughput, latency

- Data portability across different silos leads to higher resource utilization and time.

In today’s distributed software development, developers pull the source code from Gitlab to their respective mobile devices (like laptops). In a small development team with <25 members, this may be acceptable. However, as the teams grow in size, business owners are more concerned with protecting the proprietary source code roaming around in the public without any security.

Leveraging containers in the CI process in a Kubernetes environment allows the developers to proceed through the different stages of the CI pipeline – git clone, private builds, unit tests, integrate builds, and final binary packages. The containers can be stateless or stateful depending on development phase. Running different unit tests on code changes can be stateless but the user workspaces and CI build environments has to be stateful. Applications and workloads using stateful containers provision persistent storage on demand and continuously moving forward in the CI pipeline.

Pure Storage® FlashBlade™ provides a standard data platform that supports various different workloads during the CI process. This removes the silos and eliminates the possibility of moving data between the different phases of the CI pipeline. Various development and delivery tools like Gitlab, Jenkins, and Nexus can be configured over NFS on a FlashBlade array from the Kubernetes cluster to provide resiliency and scalability for the automated jobs in the CI pipeline.

FlashBlade also provides data dis-aggregation for applications running in containers with Pure Service Orchestrator™ (PSO). PSO provides different storage classes (pure-file is used in the CI pipeline) for the pods running the development and delivery tools and Jenkins jobs to provision persistent storage on-demand for every Persistent Volume Claim (PVC) created during the CI process, over Network File-system (NFS).

More information visit, the White paper: A Modern CI/Cd Pipeline on Pure Continuous Integration/Continuous Delivery (Ci/Cd) on Pure Storage.

As an example of a CI process, we took Pure’s internal blog publishing development pipeline and used “Wordpress” source code to build a new version of our blog. For the purpose of validation, “Wordpress” source code was used to build a new version of the application.

The objective was to change the welcome page with a Pure Storage logo and include a comment section for every blog posted. The automated jobs performed during the CI process include –

- Create user workspaces

- Sync source code from GitLab to user workspaces

- Pull the dependencies from Nexus to complete a private build

- Perform unit tests

- Merge the changes to the main code base in GitLab

- Trigger an automated integrate build and finally push the binary package to Nexus repository.

As illustrated in Figure 2 below, all jobs for the above sequence are automated in the CI pipeline using Jenkins. Automated tests were performed in the CI pipeline with PSO and FlashBlade and compared with Rook and Ceph (block) on local SSDs in the Kubernetes cluster. However, tests indicated that the CI phases performed better on local SSDs with 50 concurrent jobs. As the number of CI jobs scale from 100-200 and beyond, the local SSDs did not exhibit concurrency and reliability, resulting in a high job failure. Performance scaling and predictable SLO was also a challenge. The builds and unit tests took longer time to complete as the number of CI jobs in the pipeline started to scale beyond 50.

Figure 2) CI pipeline validation with Kubernetes on comparable storage backend

FlashBlade as external shared storage provides better concurrency and the reliability with no failures as the number of Jenkins jobs scale in the CI pipeline. The Jenkins jobs complete a lot faster than with PSO and FlashBlade than Rook and Ceph on local SSDs. The private builds triggered from “git clone” in the user workspaces completed 45% faster on FlashBlade. The integrate builds, packaging and pushing to final binary package into Nexus at scale from 200 jobs was 25% faster than local SSDs. The unit tests were 13% faster on FlashBlade.

From the test results, it looks like running a smaller number of jobs on Local SSDs is the sweet spot. At scale, FlashBlade with PSO provides better performance. There was a 27% overall improvement in the CI pipeline on FlashBlade with a 2:1 data reduction. This means a greater number of workloads can scale on FlashBlade with less storage and management overhead. With Shift-left becoming more popular, these performance improvements and data space savings on FlashBlade accelerates the CI pipeline and shortens the development cycle with a quick feedback loop.

Once the final binary package is pushed into Nexus, the next blog will highlight the benefit of setting up the Continuous Delivery (CD) on flash again to release the application into production.