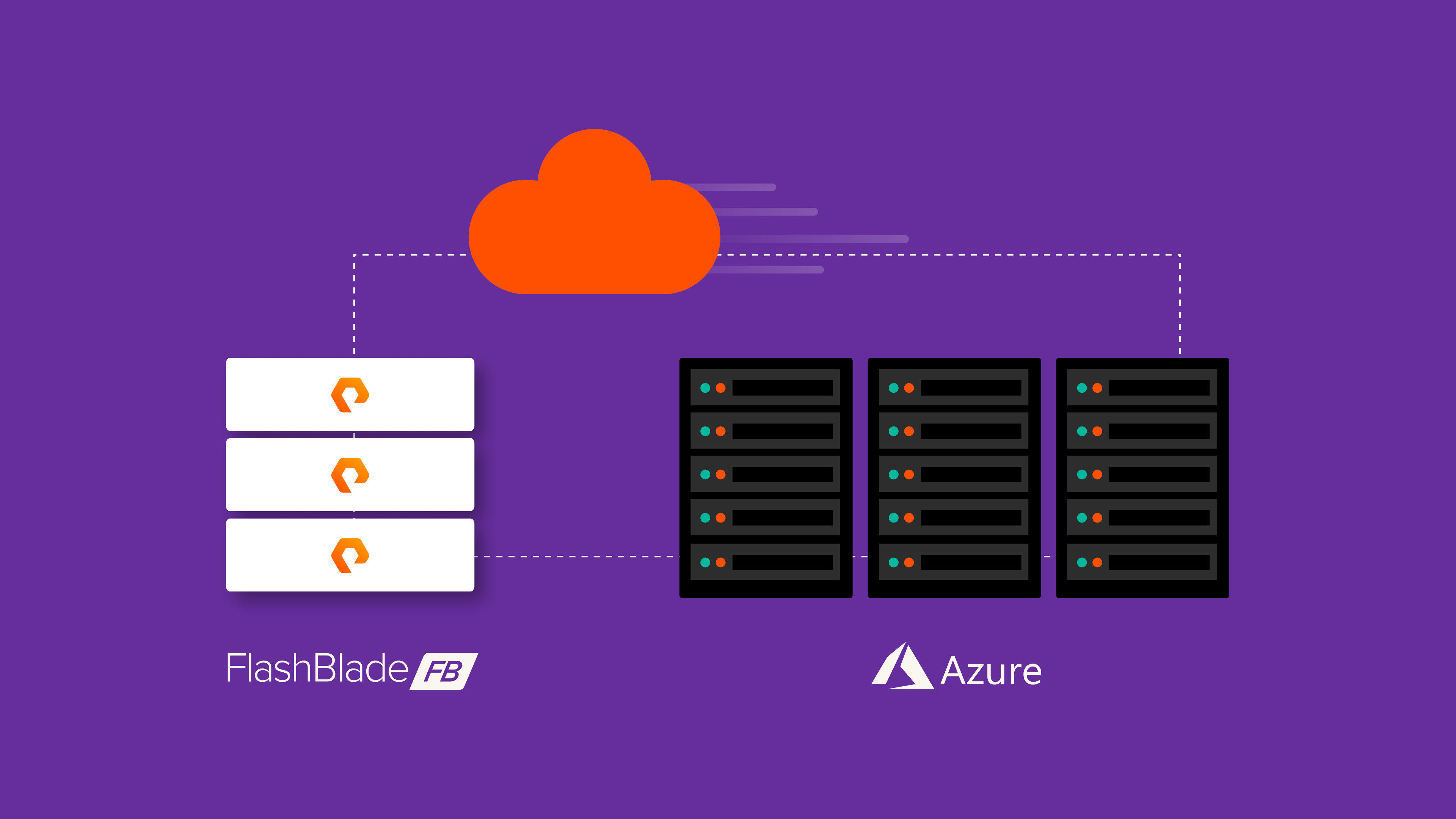

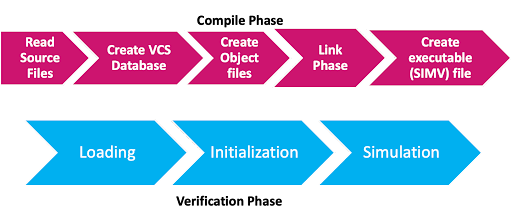

Logical verification is a critical part of the digital chip design process. Synopsys VCS is a high-performance verification tool used by semiconductor companies. In the workflow diagram below, Synopsys VCS compiles a set of Verilog files and generates an executable simulation file. The simulation file is used to validate the IC design and performance of the system on a chip (SoC).

Figure 1: Synopsys VCS workflow.

The constant increase in design complexity for sub-10nm SoCs is leading to more compute requirements and data storage at scale where a high volume of data is created, processed and eventually stored. Modern engineering owners are challenged with providing more compute resources on demand for many jobs running in the queue for long durations. This leads to crossing the data center boundaries into hyperscalers like Azure to provision Azure VMs on demand for the design process. Synopsys allows customers to run VCS in the Azure cloud.

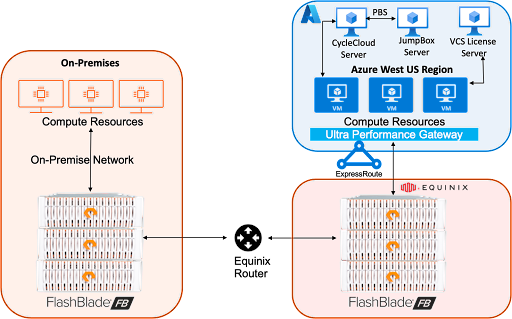

A hybrid cloud architecture to dynamically extend to Azure cloud from the local data center is augmented by moving data to Pure Storage® FlashBlade® in an Azure-adjacent Equinix data center. The FlashBlade device in the Equinix location provides the business owner more control over data location for data sovereignty and security, as well as increased performance at the data layer while connecting to AzureVMs. Customers can leverage the extensive compute resources on Azure while keeping their data on customer-owned storage resources. The diagram below shows how the compute resources can be provisioned in Azure on demand while design data can be moved and shared from a local data center to a cloud-connected environment for performance and capacity scalability.

Testing Synopsys VCS performance at scale with a 2ms round-trip time between the Azure VMs and the FlashBlade device validates the viability of the Azure cloud-connected architecture with FlashBlade. This blog post highlights the performance of Synopsys VCS in Azure VM while accessing the data set from a customer-owned and managed FlashBlade device over NFSv3.

Figure 2: Azure cloud connected on FlashBlade architecture.

Pure FlashBlade is offered as a CAPEX purchase or as a Pure as-a-Service™ offering in an Equinix colocation data center or without a customer-managed data center through Pure Storage® on Equinix Metal™, which is a fully hosted offering delivered in a cloud consumption offering. With Pure as-a-Service or Pure Storage on Equinix Metal, end users and business owners pay only for the capacity consumed on FlashBlade with limited term and capacity commitments.

During our testing, two filesystems were configured on the FlashBlade device to run the SIMV and compile tests respectively. Each of these filesystems was mounted on multiple Azure E64ds_v5 VMs to scale the number of cores. The FlashBlade device was connected via 10Gb/sec ExpressRoute and an Ultra Performance Gateway to the Azure VMs.

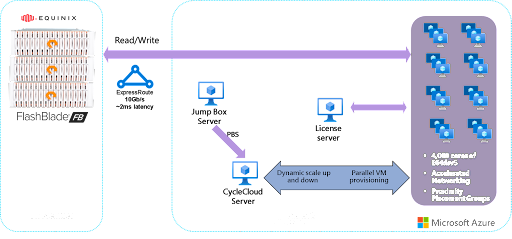

Figure 3: VCS test environment details.

Users connect to the jump box server through SSH or Bastion and submit VCS jobs through OpenPBS. Azure CycleCloud then provisions sufficient compute VMs in parallel and scales VMs up and down automatically. Compute VMs access FlashBlade storage on premises across the 10Gb/s ExpressRoute.

We used VCS version 2021.09 SP2 and a UVM testbench suite to generate random seeds of SIMV and compile jobs for the scale test. Compute nodes are E64dsv5 SKU, which are equipped with 64 virtual cores of Intel 8370C CPUs, 512GB memory, and 2.4TB SSD local storage. All VCS tools, libraries, scratch, and output files were stored and accessed from FlashBlade filesystems over NFSv3 in the Equinix location with the 10Gb/sec ExpressRoute connected to Azure. The VCS tests used Centos 7.9 on all virtual machines with the OpenPBS job scheduler.

We validated the following key data points during the VCS test:

- Measure the job completion time at scale.

- Test SIMV simulation and compile run jobs while scaling to thousands of cores during expected completion time.

- Validate if the 10Gb/s ExpressRoute can handle the performance requirements for the VCS tests at scale in the hybrid model without impacting the total completion time.

- Identify FlashBlade performance with respect to IOPS, throughput, and capacity as patterns of VCS run scales during the tests.

Below is how Azure CycleCloud scales up to 63 VMs for 4,000 cores, which will scale down to zero automatically when jobs are finished.

Figure 4: Azure CycleCloud dashboard.

Both SIMV and compile tests ran successfully without any error while scaling. You can see both runs scaled linearly through thousands of cores, which shows VCS workloads can scale properly with the hybrid implementation. As a result, it will be easier for designers and engineers to estimate the completion time. It will also be easier for the financial and management team to forecast the total cost of a burst run.

In addition, the total runtime still scaled linearly as ExpressRoute’s 10Gb/s was saturated. The average CPU time on the Azure VMs remained consistent at scale, which implies both tests were IO-bound.

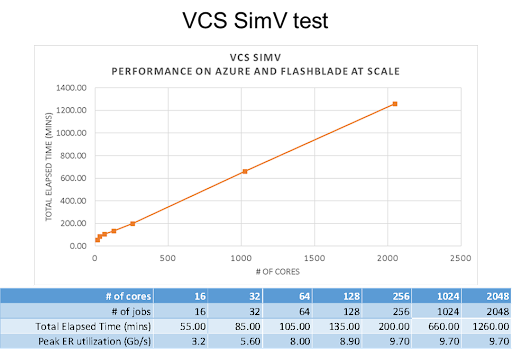

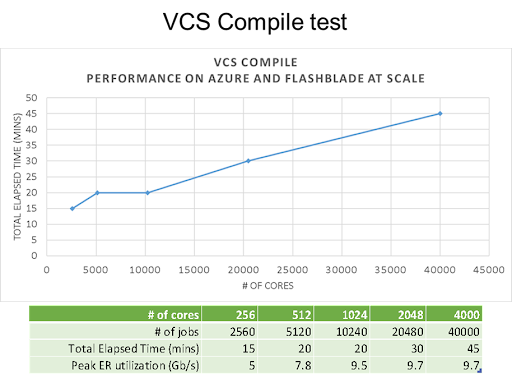

The following tables show how the SIMV and compile test runs scaled linearly with the cores with respect to job completion time and the ExpressRoute utilization.

Figure 5: Results of VCS SIMV test.

Figure 6: Results of VCS compile test.

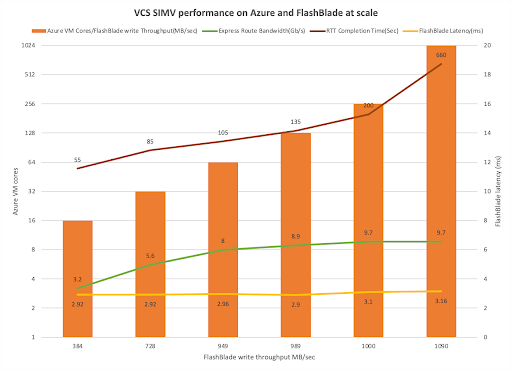

The following graph illustrates the full-stack validation that includes the job completion time and ExpressRoute utilization as illustrated in the tables above, along with the throughput and IOPS from the FlashBlade device.

Figure 7: SIMV workload performance at scale.

The SIMV test scaled from 16 to 1,024 cores until the ExpressRoute reached saturation. The job completion time scaled linearly until the network saturation was reached. The latency on the FlashBlade device was averaging around 3ms for a write throughput up to 1GB/sec during this scale test. The FlashBlade device reported a data reduction ratio of 5.2:1 for the sample SIMV data set.

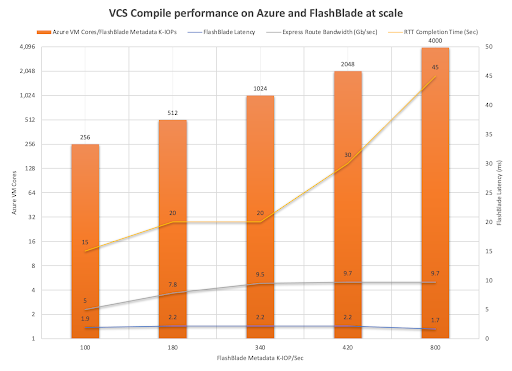

The following graph illustrates that compile tests scaled from 256 to 4,000 cores. However, the ExpressRoute saturated after 2,048 cores. The job completion time scaled with the number of cores. The FlashBlade device reported an average latency of ~2ms peaking at 800K IOPS for this sample data set. The FlashBlade device reported a 2.3:1 data reduction ratio for this sample data set.

Figure 8: Compile workload performance at scale.

To conclude, the Azure cloud connected with FlashBlade provides the cloud bursting capability for VCS workloads to scale logic simulation to the cloud. The VCS workload scale test on Azure cloud connected with FlashBlade over NFSv3 validated the following:

- There were no job failures as the VCS workload scaled from 16 to 256 to 4,000 cores for both of the tests.

- Apart from consistent and scalable performance, the FlashBlade device also provided a minimum of 2:1 and more data reduction ratio for the different tests performed.

- The FlashBlade device itself had headroom to accommodate additional scalable workload if the ExpressRoute speed would have been increased beyond 10Gb/sec.