Summary

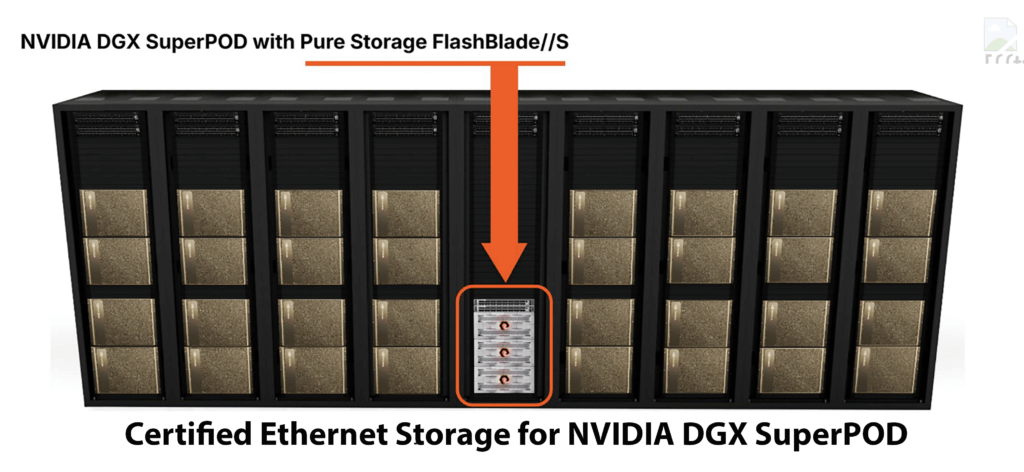

Pure Storage FlashBlade is now a certified storage solution for NVIDIA DGX SuperPOD. Together, they provide powerful accelerated compute, a high-performance and efficient storage platform, and AI software and tools to address the most demanding AI and HPC workloads.

The promise of faster innovation, improved operational efficiency, and increased competitive edge comes with getting AI data outcomes right. According to Gartner, “More than 60% of CIOs say AI is part of their innovation plan, yet fewer than half feel the organization can manage its risks.” Successful deployment of AI means cleansing and curating data properly; building high-quality code; providing high-performance, redundant and secure infrastructure; and maintaining an effective data pipeline and DevOps practice algorithms.

To achieve all of this, an AI-ready infrastructure that has been tested, benchmarked, and validated by industry leaders gives enterprises confidence that what they’re building will support their AI-powered business requirements immediately and in the future.

As of November 2024, Pure Storage® FlashBlade® is a certified storage solution for NVIDIA DGX SuperPOD, giving customers a simplified, quick-to-deploy, and efficient infrastructure offering. Rather than tackling the complexity of building everything on your own, fast-track your success and reduce risk with a fully validated, turnkey enterprise AI solution with Pure Storage and NVIDIA.

FlashBlade//S™ with NVIDIA DGX SuperPOD: Enterprise AI-Ready

FlashBlade with NVIDIA DGX SuperPOD represents an ideal combination of powerful NVIDIA accelerated compute, a high-performance and efficient storage platform, and NVIDIA AI software and tools to address the most demanding AI and HPC workloads. This certification enables enterprise customers to harness best-in-class performance, power and space density, and simplicity in data management, while leveraging enterprise investments in Ethernet networking infrastructure that are ubiquitous, without compromising on performance, interoperability, or ease of operation.

What Exactly Makes Pure Storage Stand Out in the Crowd?

Let’s look at some of the capabilities and features that set Pure Storage apart.

Reduced Training Time at Scale

Teams of AI engineers and data scientists strive to reduce time to insight. To do that, they require consistent performance that reduces training time at scale, allows them to iterate more frequently, and shortens time to high-quality results. FlashBlade//S, powered by the Purity operating system, provides multi-dimensional performance well-suited for AI training and inference workloads, including small and sequential scenarios and high-throughput workloads with sequential or random access.

Enterprise AI Requires Enterprise Data Services

When scaling from pilot to production, mature and proven data services are critical. To ensure success, AI teams require guaranteed uptime, security, and data protection. Purity provides a rich set of capabilities for production AI that include compression, global erasure coding, always-on encryption, immutable SafeMode™ Snapshots, file replication, object replication, and other enterprise data features.

The Pure Storage Platform

A platform that grows

with you, forever.

Simple. Reliable. Agile. Efficient. All as-a-service.

Parallel Architecture Benefits Multi-stream AI Workloads

Building foundational models with complex data input requires powerful, scale-out accelerated compute. With the parallel architecture of FlashBlade//S, the entire DGX SuperPOD can process multiple data streams simultaneously, reducing the time spent waiting for data to load and accelerating the entire model training process.

This is thanks to a distributed transactional database, the heart of FlashBlade//S software innovation, which enables powerful parallelism to eliminate bottlenecks on AI training workflows. The Purity OS can speak to each flash chip directly, using a massively parallel data path to accelerate any data access for AI training.

AI Performance, Power, and Space Efficiency

For customers running hundreds to thousands of GPUs to power AI in large-scale training clusters, it can be challenging to optimize utilization while also offsetting energy costs and data center real estate. FlashBlade//S couples high-throughput, low-latency performance with industry-leading energy efficiency of 1.4TB effective capacity per watt. This enables customers to eliminate storage congestion that slows compute while reducing power consumption and space two to five times versus comparable storage systems. Less cost, fewer watts, and less space required for storage enables customers to invest in more accelerated compute.

Scale Compute and Storage, Independently

When you need the flexibility to easily adapt to your AI initiative growth and evolving storage needs, a modular architecture is key to easily increasing capacity or performance. FlashBlade//S is a customizable platform that gives you the ability to expand your configuration for specific workload requirements non-disruptively. Whether you need more performance or capacity, when your AI and data science teams aren’t hampered by IT upgrades, they can deliver AI results faster!

Ethernet-networked Storage for High Throughput AI and HPC

Ethernet networking is a cornerstone of enterprise data centers due to its low total cost of ownership (TCO), extensive interoperability, and proven reliability. FlashBlade//S connected via Ethernet and NVIDIA ConnectX NICs to the DGX SuperPOD storage fabric delivers exceptional performance for larger AI clusters. The use of RDMA over Converged Ethernet (RoCE) further enhances this setup by minimizing latency and offloading CPU and GPU data movement workloads, resulting in faster data transfers and improved overall efficiency for demanding AI applications. Ethernet networking connectivity between AI compute and FlashBlade//S represents a massive advantage for high-performance AI and HPC workloads that is cost-effective and simplified at scale.

Eliminate the Unpredictability of AI

Pure Storage offers Evergreen//One™, a storage-as-a-service (STaaS) subscription that transforms how organizations consume and manage their AI data storage. This innovative consumption model lets companies pay only for what they use, making it easier to scale their AI projects up or down based on actual needs. This STaaS from Pure Storage also provides future-proof capability for AI workloads that are unpredictable and inconsistent.

Evergreen//One for AI adds to our comprehensive set of SLAs with guaranteed bandwidth based on maximum requirements for GPUs. This ensures valuable GPUs and data scientists aren’t left idle waiting for storage as they train models and accelerate iterations that deliver higher-quality results without delays.

Built-in Enterprise Reliability and Guaranteed Uptime

With all Pure Storage systems, organizations receive automatic updates and performance improvements without any disruption. This enables your team to continue focusing on developing the right AI solution rather than worrying about planned downtime or managing upgrades. Evergreen//One offers a 99.9999% uptime guarantee so your critical AI environment is always on and always delivering insight.

Fast-track AI Initiatives Today with Pure Storage and NVIDIA DGX SuperPOD

The NVIDIA DGX SuperPOD architecture is designed on a 32-node NVIDIA DGX system cluster with the ability to scale up from there. If your AI infrastructure doesn’t require that large of a deployment, Pure Storage has been certified with smaller designs that can grow as your requirements grow. These include NVIDIA DGX BasePOD, NVIDIA OVX systems, and Cisco FlashStack® for AI and AI Pod options.

All of these solutions have validated, full-stack reference architectures that reduce deployment risk and fast-track your AI infrastructure needs for your AI journey. We’ve also built reference architectures for retrieval-augmented generation and vertical solutions for drug discovery, medical imaging, and financial services applications that go well beyond core hardware and software.

Additionally, available in the first half of 2025, we’re also offering full-stack, turnkey Pure Storage GenAI Pods that offer single-click deployment and streamlined Day 2 operations for vector databases and foundation models. With the integration of Portworx® Data Services, these services provide automated deployments of NVIDIA NeMo and NVIDIA NIM microservices, part of the NVIDIA AI Enterprise software platform, as well as the Milvus vector database, while further simplifying Day 2 operations. Future blogs will dive into this new set of solutions in much more detail.

Reach out to your Pure Storage representative and get in touch with one of our experts to see how we can help you simplify and accelerate your AI initiatives.

Learn more about the Pure Storage platform for AI.

Check out FlashBlade//S—unified fast file and object storage optimized for AI.

Download the FlashBlade//S with NVIDIA DGX SuperPOD reference architecture.

Power AI Success

Learn more about how Pure Storage can help you thrive in the AI era.