We live in an age where new tech toys are abundant, yet somehow LEGO®, an 80-year-old toy, is still as popular as ever. One of the key enduring appeals is that it fires the imagination of kids and adults alike. As a fiery LEGO lover once said: “I am still amazed by some of the things that are made, and these people clearly didn’t follow the instruction in a set..”

But how does LEGO enjoy this timeless success? We can narrow it down to three key attributes of LEGO bricks:

- They are made of simple shapes

- They have a well-defined interface so that they can combine easily

- They require a very basic skillset, everything else is your imagination

This is exactly the direction we believe that enterprise AI needs to head too. We at Pure, together with NVIDIA and Mellanox, have architected hyperscale AIRI with the same basic principles of LEGO.

But first, let’s cover some background and the current status of the real-world AI landscape. In March 2018 we announced AIRI, the industry’s first comprehensive AI-Ready Infrastructure, architected by NVIDIA and Pure Storage. Since the announcement of AIRI, it has been widely deployed across the globe in many industries, including healthcare, financial services, automotive, tech companies, higher education and research. Since AIRI paved the way for these organizations to turn data into innovation at an unprecedented pace, it also created the necessity for a highly scalable solution that spans multiple racks of DGX servers and FlashBlades storage. However, such rack-scale infrastructure resulted in additional complexities

Hyperscale AIRI: A LEGO-like AI Infrastructure built for Pioneering Enterprises of AI

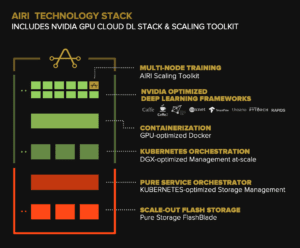

Hyperscale AIRI is the industry’s first AI -ready infrastructure architecture to deliver supercomputing capabilities for enterprises pioneering real-world AI. The three key components of hyperscale AIRI are:

- Simple, modular components of compute devices (NVIDIA DGX-1 & DGX-2 servers), storage system (Pure Storage FlashBlade) and network architecture (Mellanox Ethernet Fabrics)

- Well defined software stack with Kubernetes integration for end-to-end AI pipeline to keep data teams productive at any scale

- A streamlined approach to building multi-rack AI infrastructure scalable to 64 racks to support any AI initiatives

Hyperscale AIRI is already validated and deployed in production by some of the pioneering enterprises of real-world AI including a large security company deploying multiple racks of DGX-2 and FlashBlades interconnected by Mellanox InfiniBand network.

Hyperscale AIRI: Designed to scale to 64 racks of DGX servers and FlashBlades

Hyperscale AIRI brings together all the benefits of the DGX-1 and DGX-2 servers, and Pure Storage FlashBlade, wrapping them in a high-bandwidth, low-latency Ethernet or InfiniBand fabric by Mellanox, unifying storage and compute with a RDMA-capable 100Gb/s network. AIRI enables seamless scaling for both GPU servers and storage systems. As computational demands grow, additional DGX-1 and DGX-2 servers can be provisioned in the high-performance fabric, allowing for instant access to all available datasets. Similarly, as storage capacity or performance demands grow, additional blades can be added to the FlashBlade systems, addressing Petabytes of data in a single namespace with zero downtime or re-configuration.

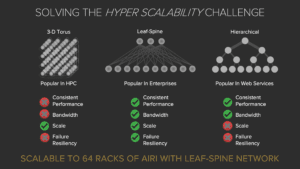

One of the biggest challenges in building a multi-rack AI platform is architecting the network design and topology. Engineers at Pure, Mellanox and NVIDIA have worked to solve the challenges by understanding the communication patterns in multi-node GPU training across various neural network models as well as storage patterns for the entire AI pipeline. What we discovered was while the Deep Learning compute share the same characteristics of HPC applications, the same cannot be stated for storage and network architecture. In fact, NERSC’s data team have already uncovered the inefficiencies of HPC storage and their inability to support large-scale AI initiatives.

Specifically, on network design we compared the three most popular networking topologies: 3-D Torus (popular in large HPC clusters), Hierarchical (popular among large web-scale companies), and leaf-spine (popular among enterprise IT). While one might think that 3-D Torus or Hierarchical is the most probable choices, our study found that leaf-spine is the only one that can address all the challenges faced in deploying a large-scale AI initiative.

[Download the reference architecture to learn more]

Hyperscale AIRI can be configured to support up to 64 racks of DGX-1 and DGX-2 servers and FlashBlade storage in leaf-spine topology: that’s >500PetaFlops of DL Performance and >100PetaBytes of data storage.

Hyperscale AIRI: Keeping Data Teams Productive At-any scale

The strength to Hyperscale AIRI is that it is a complete solution with software and hardware integration, designed to keep the data science team productive at any scale. With the easy to use API, data scientists can easily train, test and deploy their AI projects on a cluster of any size. With NVIDIA’s NGC container registry, all popular AI frameworks like TensorFlow, PyTorch, and open-source RAPIDS are containerized and optimized to work out-of-box. The AIRI scaling toolkit allows data scientists to train ML models on multiple-DGX servers out-of-box with simple steps. Finally, with Kubernetes integration with DGX and FlashBlade, data teams can quickly automate the deployment and management of containerized AI workflows for the entire pipeline including single node jobs like feature extraction, and data collection verification and analysis and multi-node model training jobs across large datasets.

Start from AIRI “Mini” and be hyperscale-ready!

While many enterprises want to jumpstart their AI initiatives, challenges in building a scalable and an AI-optimized infrastructure often hold them back. Engineers at NVIDIA, Mellanox and Pure Storage partnered to architect a scalable and powerful infrastructure for enterprises pushing the boundaries of AI innovation. With AIRI’s modularity and simplicity, just like LEGOs, enterprises can start with AIRI “Mini”, and easily hyperscale as their teams and projects start to grow. In addition, AIRI aims to solve infrastructure complexities, providing pioneering enterprises of AI a solution with supercomputing capabilities. Now, every enterprise can focus on developing powerful new insights with AI by deploying the simple, scalable and robust AIRI system.

To learn more, visit our booth #1217 at GTC San Jose or visit us at www.purestorage.com/airi