Apache Kafka is synonymous with fast because it is a distributed, highly scalable, fast messaging application used for streaming applications. However, Apache Kafka is not fast in one aspect as it takes a long time to sync the in-sync-replicas using the Kafka’s native replication protocol. These replicas fall out of sync which could be due to a failed broker and then this could slow down or pause producer writes. It is also a huge maintenance task for Kafka administrators to bring the replicas back to sync and if not done this may lead to eventual data loss. Sometimes it can even take days for the replicas to catch up with the leader.

In such cases, the replica.lag.time.max.ms has to be set to a very high value.

In this blog, I am going to show you how to bring up a new broker and sync it instantly using FlashArray//X snapshots. Pure storage FlashArray//X snapshots are instantaneous and initially do not consume any additional disk space. So our snapshots make Apache Kafka faster with replica sync as well.

Fortify Your Data

with Confidence

See how Pure Storage helps secure your infrastructure—

watch the full Securing the Pure Storage Platform demo series now.

Why use FlashArray//X snapshots to Replace or Recover Kafka broker?

There are two ways we can do the Apache Kafka broker replacement:

- Using Apache Kafka Replication: This is a slow process where Kafka administrators should set the configuration replica.lag.time.max.ms to a very high value. This will make sure that in-replica syncs using Replica Fetcher threads.

- Pure Storage FlashArray//X’s instant snapshots: As explained above , this is the most efficient method or fastest method for replacing kafka brokers.

Using 1 TB of messages,let us see the time comparison between these two different methodologies. We find that Kafka replication takes 181 minutes to sync 1TB of messages whereas using FlashArray snapshots it takes less than a minute to sync the replicas.

Apache Kafka broker replacement/recovery process:

To test the rapid broker replacement from the failed node, I have created a three-node Apache Kafka cluster.

As seen above, the kafka cluster has three brokers 0,1,2. I failed Broker 2 and brought up another healthy node and installed kafka with Broker 2. There is a topic with replication factor 3 on the cluster and it is populated with data.

Here are the steps for node replacement

- On all the broker nodes freeze the filesystem for Kafka commit logs

xfs_freeze -f /kafka/logs

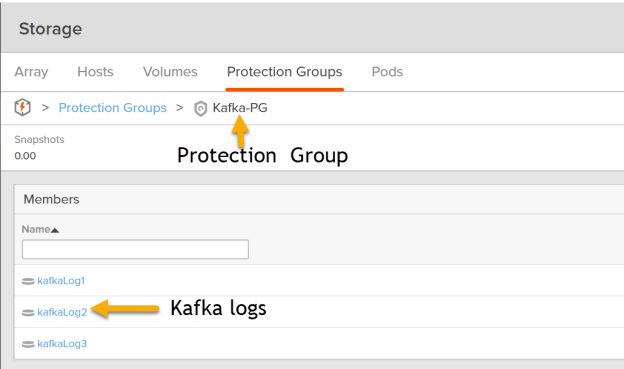

2. Next step is to take FlashArray//X snapshot. The recommendation is to create a protection group for the Kafka commit logs as shown below.

Take the FlashArray snapshot every 5 minutes of all the commit log volumes in the protection group. The snapshots which are older than 1 hour will be removed automatically by setting up retention.

4. Unfreeze the filesystem for kafka commit log volumes. The command is

xfs_freeze -u /kafka/logs

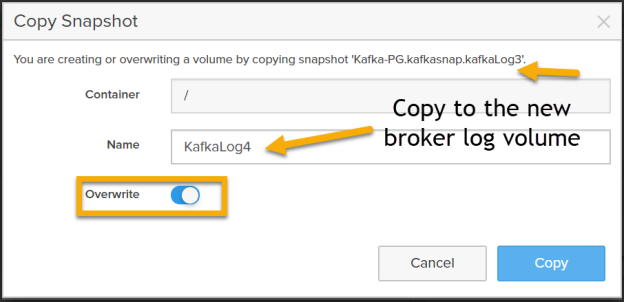

5. Install Kafka on the new healthy node which is going to be added to the cluster. Change the configuration in server properties to enable it to join the cluster. Copy the latest snapshot from the failed node or the node which is going to be replaced.

Start the new Kafka broker to see what happens when we use snapshot and compare it with starting broker without applying snapshot on Kafka commit logs. To illustrate this I have a topic with replication factor set as 3 called test31. I have loaded it with messages and this has an offset as shown below:

Then I started the healthy node broker by applying the snapshot. Remember that we are taking the snapshot every 5 minutes so there will be lesser messages to sync. It has to sync the messages which were added to topic after the snapshot was taken. Let us see the broker logs with snapshot applied to kafka commit logs.

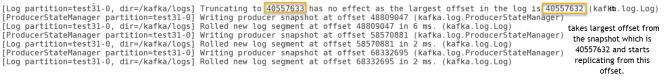

With snapshot: The most recent snapshot of kafka commit logs on the failed broker was taken when the offset was 40557632. As seen below from the broker logs, the ReplicaFetcher thread starts to sync beginning from 40557633 and hence decreases the sync time drastically.

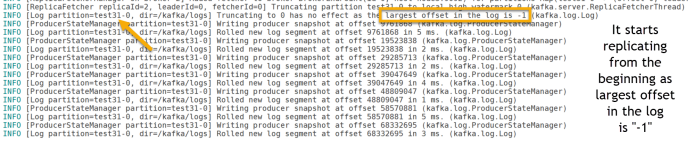

Without snapshot: As seen below from the broker logs, the ReplicaFetcher thread starts to sync from the beginning as the largest offset in the log is -1 and hence it takes more time to sync.

Clearly, it shows that FlashArray//X snapshot process is faster even when data grows or multiplies. Whereas other methodologies(scp or repair) will get even slower if there is more data per node. I would highly recommend using the Pure Storage FlashArray//X snapshots for replacing/recovering failed kafka brokers as it reduces the sync time drastically.

Fast, Resilient Kafka Recovery at Scale

As Apache Kafka adoption continues to expand across industries—fueling real-time analytics, event-driven microservices, and data pipelines—the ability to recover quickly from broker failures has become a key requirement for operations teams.

FlashArray’s snapshot-based recovery remains a best-in-class approach for minimizing replica lag and restoring broker health without extended downtime or operational complexity. Recent innovations enhance this capability even further:

- Improved snapshot orchestration via REST API v2 and Ansible/Terraform automation, allowing for easier integration into DevOps pipelines.

- SafeMode™ Snapshot support for immutable, ransomware-resilient backup copies of Kafka commit logs.

- Enhanced performance with DirectFlash® and NVMe-oF, reducing latency for snapshot restore operations across high-throughput Kafka clusters.

- Support for containerized Kafka via Kubernetes and Portworx®, enabling stateful application recovery using volume snapshots in dynamic environments.

Combined with Pure1®’s visibility and predictive analytics, teams can now proactively monitor Kafka storage health, automate snapshot lifecycle management, and confidently restore broker nodes with minimal operational burden.

Whether running Kafka in virtualized infrastructure or containerized platforms, FlashArray continues to offer the performance, protection, and simplicity required to keep your messaging infrastructure resilient and ready.

Free Test Drive

Try FlashArray

Explore our unified block and file storage platform.